Was there any resolution to this issue ? i am facing the same and its causing daily produciton failures . @KranthiPakala-MSFT and @Alina Sheiko

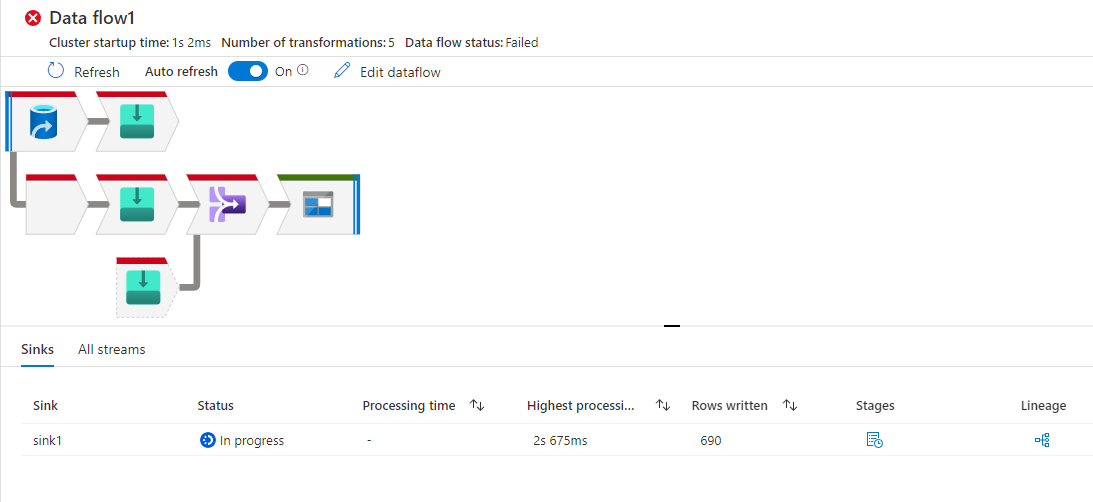

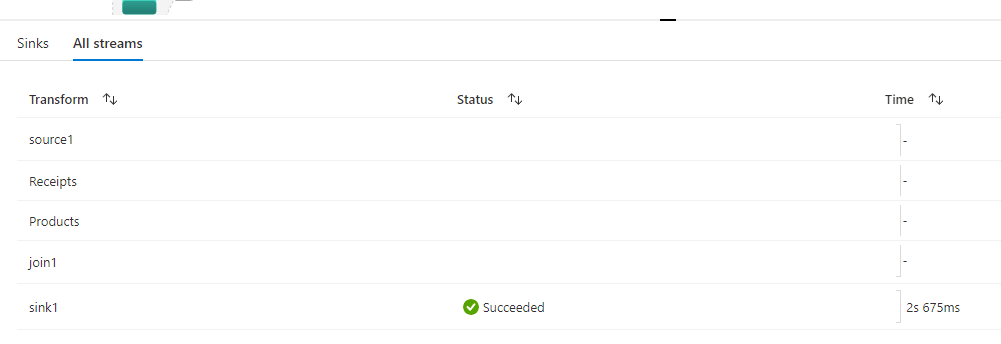

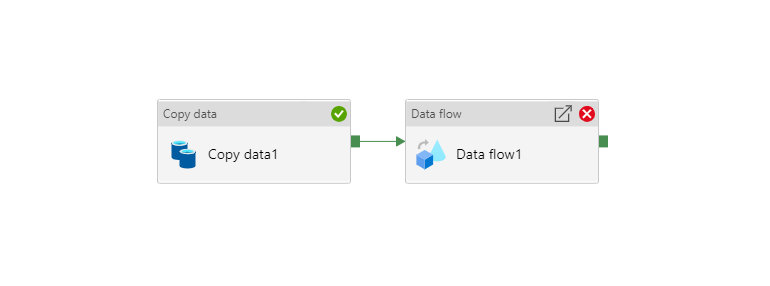

ADF dataflow failing during the pipeline run

Hello everyone!

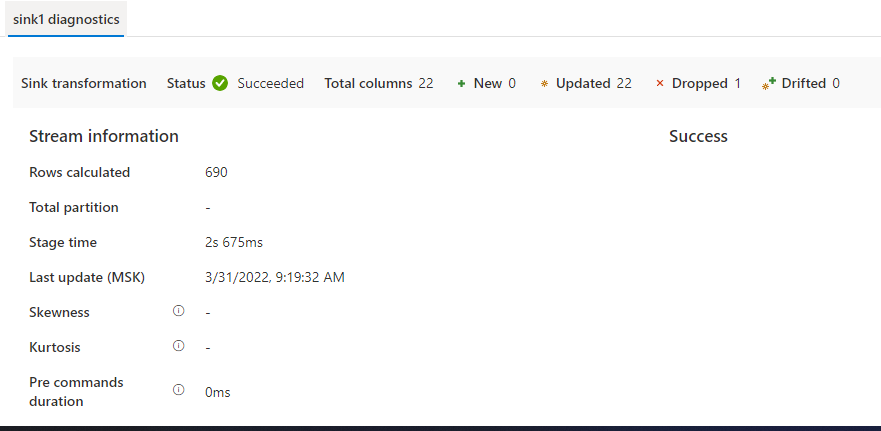

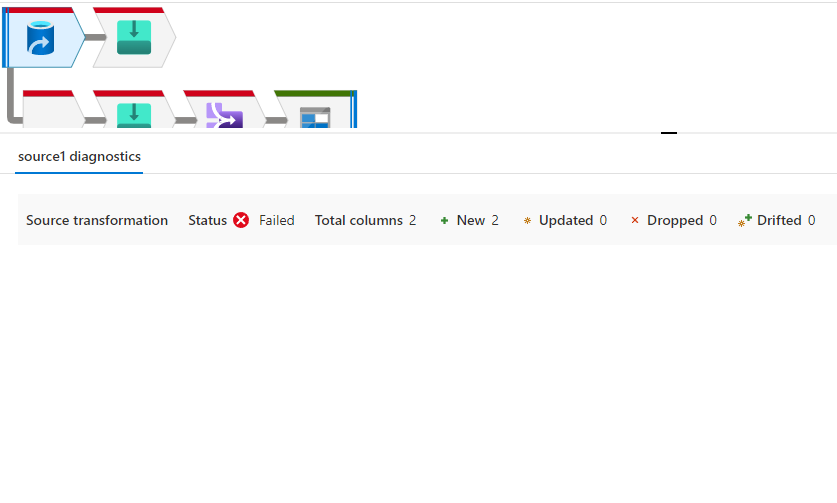

Every time I debug my pipeline in ADF I get the following error on dataflow activity: "{"StatusCode":"DFExecutorUserError","Message":"Job failed due to reason: at Sink 'sink1': org.apache.hadoop.fs.azure.AzureException: com.microsoft.azure.storage.StorageException: This operation is not permitted on a non-empty directory.""

The process is: I try to load XML file via API to the one folder in Azure BLOB Storage (ADLS Gen2) using COPY DATA activity, then to parse it to CSV using Dataflow and load the final csv file to the same container, but another folder in BLOB Storage.

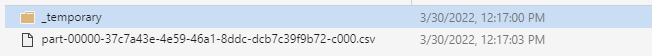

The COPY DATA activity runs successfully, I really get my xml file in Azure Storage. But when dataflow starts processing, it create a folder inside of my target folder with file having strange name and an empty folder and fails with error.

In dataflow sink I set 'single partition' and 'output to single file', but seems like it doesn't work correctly. I also tried to set "clear the folder", but it didn't help.

Hope you have any ideas how to deal with this issue

Thanks for your help!

Azure Blob Storage

Azure Data Factory

2 answers

Sort by: Most helpful

-

-

MarkKromer-MSFT 5,231 Reputation points Microsoft Employee Moderator

MarkKromer-MSFT 5,231 Reputation points Microsoft Employee Moderator2022-06-13T05:17:16.003+00:00 Are you using Blob or ADLS Gen 2 for the files? Make sure you are using the correct Linked Service type.