@René Tersteeg Yes, this should be possible with SSML voice element where multiple voices in different/same languages can be used.

For example, I have used English and Hindi to test the scenario:

<speak version="1.0" xmlns="http://www.w3.org/2001/10/synthesis" xml:lang="en-IN">

<voice name="en-IN-NeerjaNeural">

This is how you say "Thank you very much" in hindi.

</voice>

<voice name="hi-IN-SwaraNeural">

आपका बहुत बहुत धन्यवाद

</voice>

</speak>

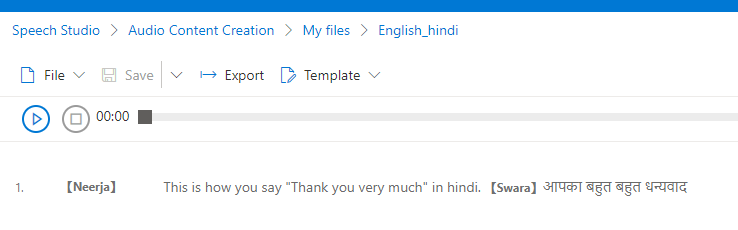

Perhaps, the easiest way to test this is from Azure Speech studio audio content creation tool. Just enter the sentences in the tool and select the text that you want to be output in English and select a voice. Similarly, select the text that is in French and select the voice that is required. Save the file and hear the audio before deciding on the voice that sounds right. You can later create your own SSML elements in your applications and directly call the speech API to get the speech output.

Same SSML from the studio using the audio content creation tool.

If an answer is helpful, please click on  or upvote

or upvote  which might help other community members reading this thread.

which might help other community members reading this thread.