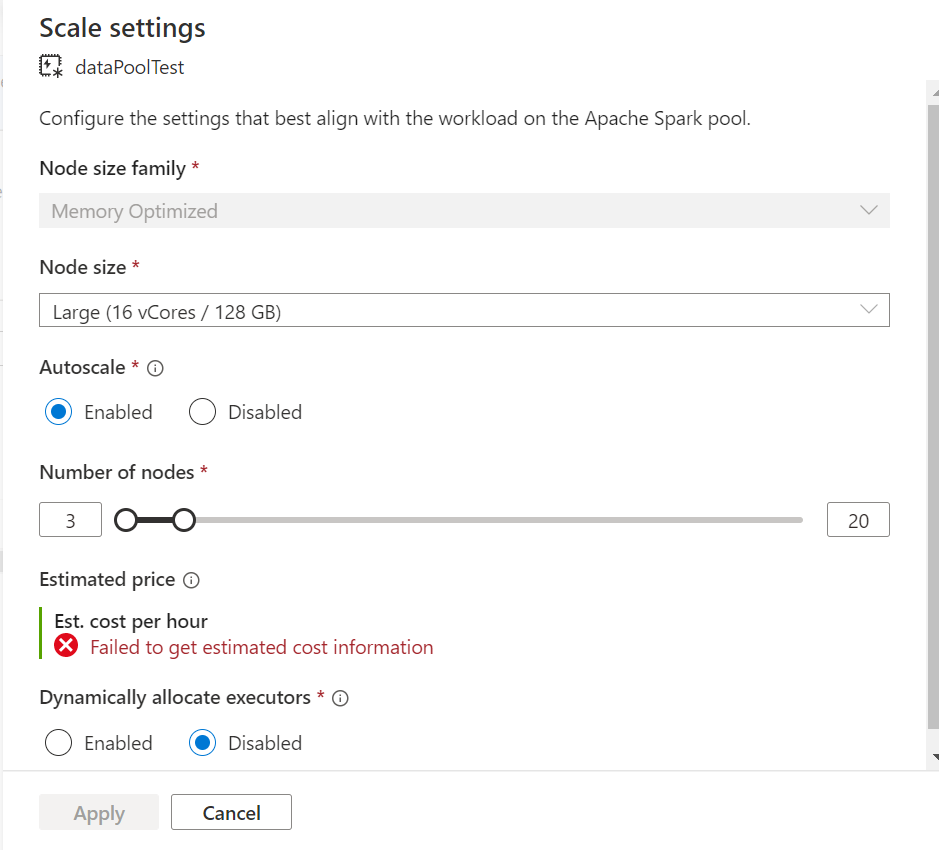

I think that @ShaikMaheer-MSFT is correct. I reduced my number of nodes back to the default of 3 and everything is working now. I assume this was caused by the quota limit that he mentions.

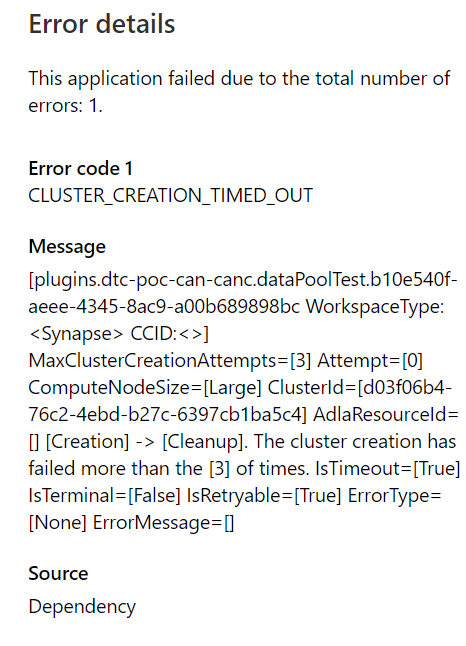

CLUSTER_CREATION_TIMED_OUT

Hi all, I am trying to read some data from storage accounts we have in PRD in our product and we are doing a POC on that data which doesn't work so good I can't even start a basic spark session that just print to the console "test".

this is the basic command I am trying to run which after about 12 minutes of starting session it fails and I get this error message.

AggregateException: Failed to recover livy session in RecoverLivySessionAsync. (Livy session has failed. Session state: Error. Error code: CLUSTER_CREATION_TIMED_OUT. [plugins.dtc-poc-can-canc.dataPoolTest.b10e540f-aeee-4345-8ac9-a00b689898bc WorkspaceType:<Synapse> CCID:<>] MaxClusterCreationAttempts=[3] Attempt=[0] ComputeNodeSize=[Large] ClusterId=[d03f06b4-76c2-4ebd-b27c-6397cb1ba5c4] AdlaResourceId=[] [Creation] -> [Cleanup]. The cluster creation has failed more than the [3] of times. IsTimeout=[True] IsTerminal=[False] IsRetryable=[True] ErrorType=[None] ErrorMessage=[] Source: Dependency.)

--> CLUSTER_CREATION_TIMED_OUT: Livy session has failed. Session state: Error. Error code: CLUSTER_CREATION_TIMED_OUT. [plugins.dtc-poc-can-canc.dataPoolTest.b10e540f-aeee-4345-8ac9-a00b689898bc WorkspaceType:<Synapse> CCID:<>] MaxClusterCreationAttempts=[3] Attempt=[0] ComputeNodeSize=[Large] ClusterId=[d03f06b4-76c2-4ebd-b27c-6397cb1ba5c4] AdlaResourceId=[] [Creation] -> [Cleanup]. The cluster creation has failed more than the [3] of times. IsTimeout=[True] IsTerminal=[False] IsRetryable=[True] ErrorType=[None] ErrorMessage=[] Source: Dependency.

also I can see the message in the manage tab which look like this:

sparkPool:

anyone has any suggestion?

Thanks.