Irregular behavior with Azure Data Factory Data Flows. Debug results different than when running the pipline

Hello,

I am building some pipelines for production in Data Factory. For one of my transformation I am using Data Flow.

I am basically reading data from Parquet, do some processing, and sink the data into Azure SQL.

In my debug session, when I preview data at each step, everything seems normal and the output is as I expect.

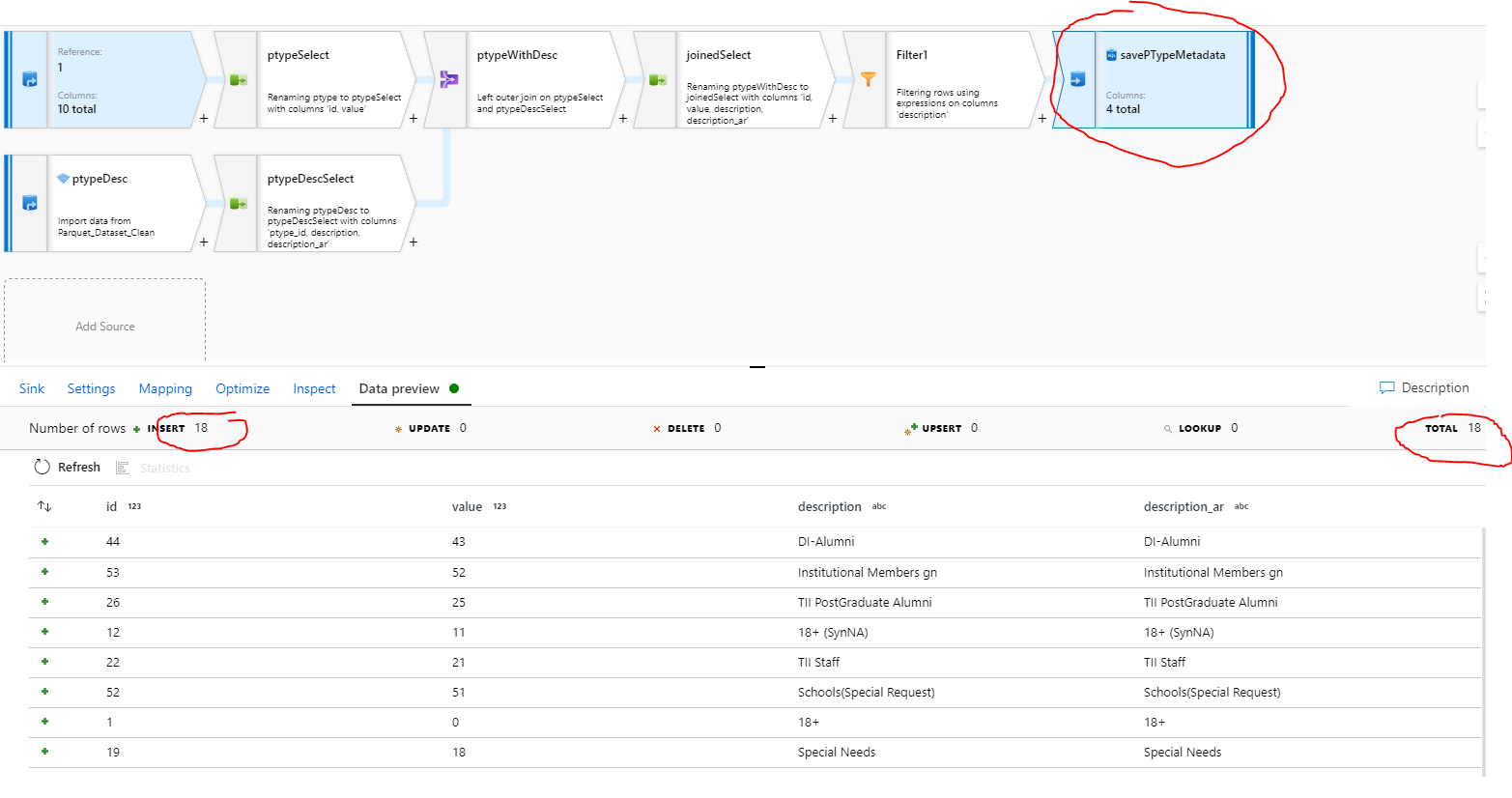

Below you can see an image in of the dataflow and the output in inside the debug session. (here I am showing the data preview of the last activity which is the sink, so I expect these data to be written to the database when I run the pipeline).

As shown it is obvious that there is 18 records to be inserted at the end.

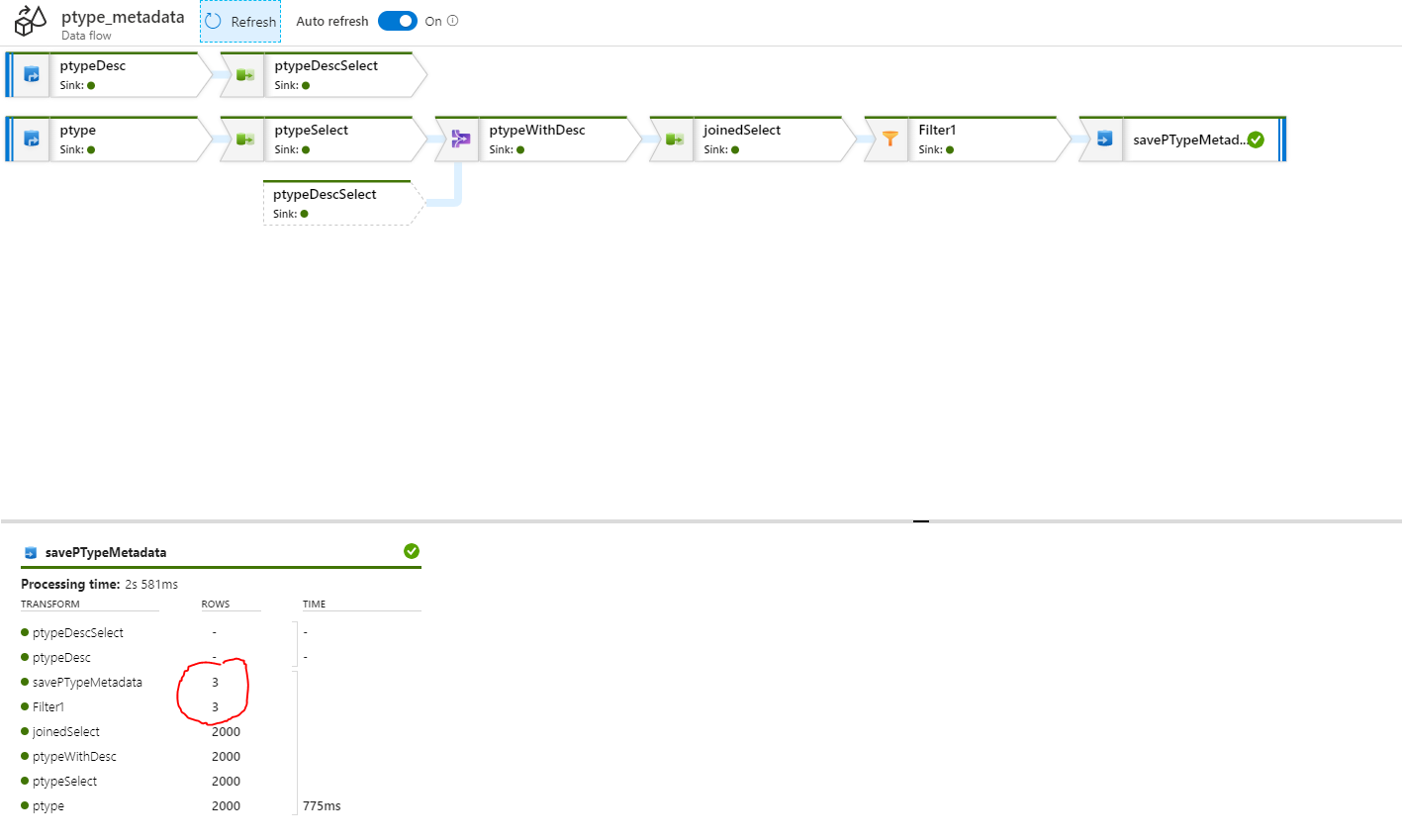

Now when I run the pipeline, and check the results of the dataflow, I don't know why, for some reason, the 18 records are becoming 3 records at the end. and only 3 records are inserted into my Azure SQL DB. You can check the picture below to see the execution of the dataflow.

There is no parameters coming from the pipeline whatsoever, it is exactly the same run inside the debug session, and outside from the pipeline run. I just can't understand how the outputs of these two runs are different. It just doesn't make any sense.