Cannot enable autorepair for VM scale set

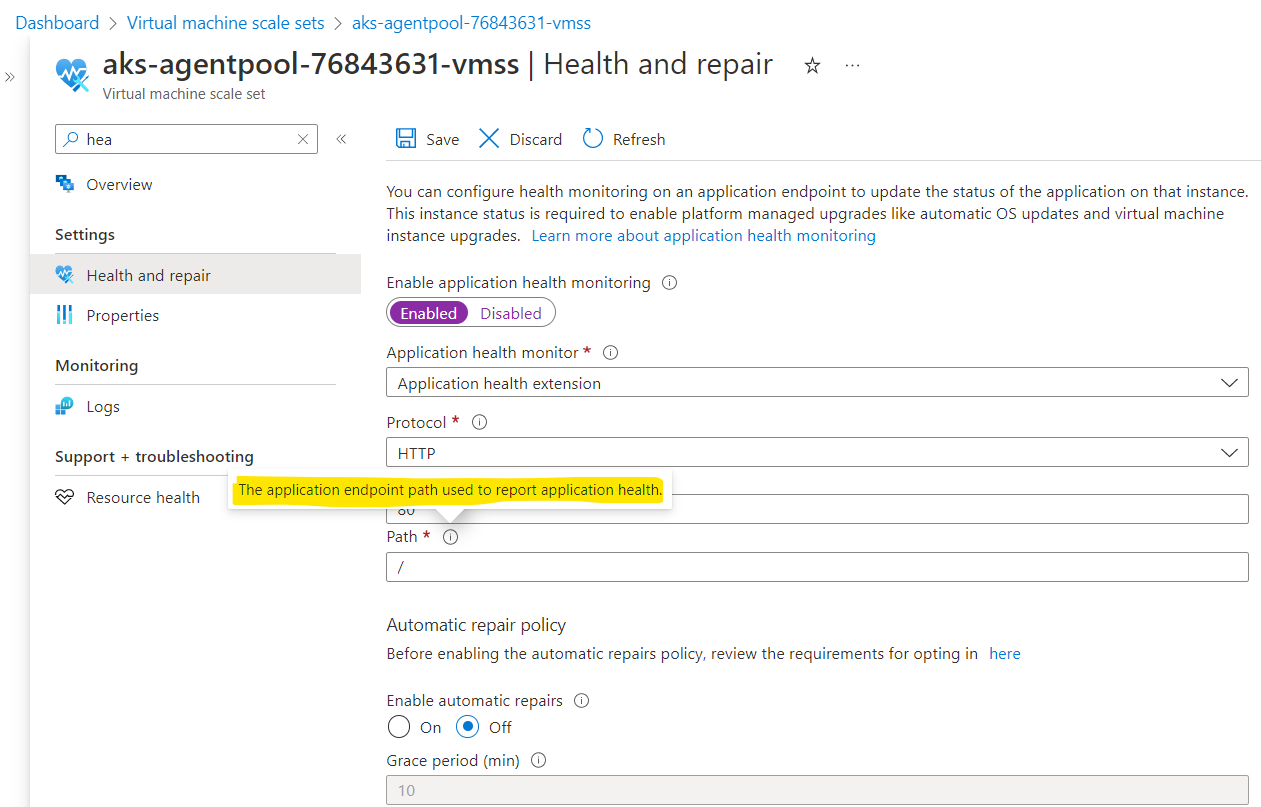

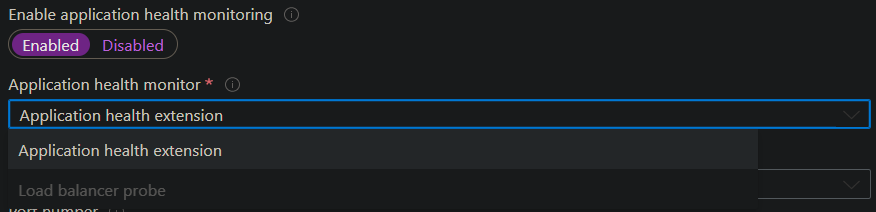

I'm not able to enable autorepair for my VM scale set configured via Terraform or manually via Azure Portal. Despite having automatic_instance_repair and health_probe_id the autorepair is still disabled in "Health and repair" tab. I've also tried to add it manually in Azure Portal but it doesn't allow me to assign a load balancer rule, either the one created by Terraform or another one created manually. Load balancer probe is just grayed out in the dropdown.

I've also tried to work around it by configuring a healthcheck extension. Unfortunately, it complains that the VMs cannot have both the probe and extension configured. Healthchecks work fine for the instances, only autorepair can't be enabled. Am I missing something here?

Here is the complete Terraform script:

variable "vm_password" {

description = "Linux virtual machine root password"

type = string

sensitive = true

}

variable "frontend_ip_configuration_name" {

description = "Frontend IP configuration name referenced by the load balancer"

type = string

default = "example-frontend-ip"

}

# Azure Provider source and version being used

terraform {

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "=3.0.0"

}

}

}

# Configure the Microsoft Azure Provider

provider "azurerm" {

features {}

}

# Configure a resource group

#

# All Azure resources have to be part of a resource group.

resource "azurerm_resource_group" "example" {

name = "example-resource-group"

location = "UAE North"

}

# Configure a VM scale set

resource "azurerm_linux_virtual_machine_scale_set" "example" {

admin_username = "exampleadmin"

admin_password = var.vm_password

disable_password_authentication = false # Use password auth instead of SSH keys

instances = 2 # Default number of instances

location = azurerm_resource_group.example.location

name = "example-linux-virtual-machine-scale-set"

resource_group_name = azurerm_resource_group.example.name

sku = "Standard_B1ls" # Smallest VM for testing purposes

network_interface {

name = "example-network-interface"

primary = true

ip_configuration {

name = "internal"

subnet_id = azurerm_subnet.example.id

load_balancer_backend_address_pool_ids = [

azurerm_lb_backend_address_pool.example.id

]

}

}

os_disk {

caching = "None"

storage_account_type = "Standard_LRS" # Lowest storage tier

}

# Find available images in region with command 'az vm image list' eg.

# az vm image list --publisher "Canonical" --offer "Ubuntu" --sku "20_04-lts" --location "UAE North" --all

source_image_reference {

offer = "0001-com-ubuntu-server-focal"

publisher = "Canonical"

sku = "20_04-lts"

version = "latest"

}

custom_data = filebase64("start_web_server.sh") # Base64 encoded startup script

# For some reason this currently does not enable self-healing

automatic_instance_repair {

enabled = true

grace_period = "PT10M"

}

health_probe_id = azurerm_lb_probe.example.id # Healthcheck used to determine if instance is healthy

}

# Configure a subnet

resource "azurerm_subnet" "example" {

name = "example-subnet"

resource_group_name = azurerm_resource_group.example.name

virtual_network_name = azurerm_virtual_network.example.name

address_prefixes = ["10.0.0.0/24"] # Occupied part of the address space (10.0.0.0 - 10.0.0.255)

}

# Configure a virtual network

resource "azurerm_virtual_network" "example" {

address_space = ["10.0.0.0/16"] # Address spaces available for subnets (10.0.0.0 - 10.0.255.255)

location = azurerm_resource_group.example.location

name = "example-virtual-network"

resource_group_name = azurerm_resource_group.example.name

}

# Configure a load balancer

resource "azurerm_lb" "example" {

location = azurerm_resource_group.example.location

name = "example-lb"

resource_group_name = azurerm_resource_group.example.name

frontend_ip_configuration {

name = var.frontend_ip_configuration_name

public_ip_address_id = azurerm_public_ip.example.id

}

}

# Configure a public IP

resource "azurerm_public_ip" "example" {

allocation_method = "Dynamic"

location = azurerm_resource_group.example.location

name = "example-public-ip"

resource_group_name = azurerm_resource_group.example.name

}

# Configure a backend address pool

#

# This pool manages IPs that will be accessed by the load balancer.

resource "azurerm_lb_backend_address_pool" "example" {

loadbalancer_id = azurerm_lb.example.id

name = "example-backend-address-pool"

}

# Configure a load balancer rule

#

# This rule tells which ports should be used on load balancer and VMs.

resource "azurerm_lb_rule" "example" {

backend_port = 8080 # Port of application on the VM

frontend_ip_configuration_name = var.frontend_ip_configuration_name

frontend_port = 80 # Port on which load balancer will receive requests

loadbalancer_id = azurerm_lb.example.id

name = "example-lb-rule"

protocol = "Tcp"

backend_address_pool_ids = [

azurerm_lb_backend_address_pool.example.id

]

probe_id = azurerm_lb_probe.example.id # Healthcheck used to determine if instance is healthy

}

# Configure a healthcheck probe

resource "azurerm_lb_probe" "example" {

loadbalancer_id = azurerm_lb.example.id

name = "example-lb-probe"

port = 8080

protocol = "Http"

request_path = "/"

}

# Output variables printed to the console after apply

output "lb_public_ip" {

description = "Public IP address of the load balancer"

value = azurerm_public_ip.example.ip_address

}