Hi @arkiboys ,

Thank you for posting query in Microsoft Q&A Platform.

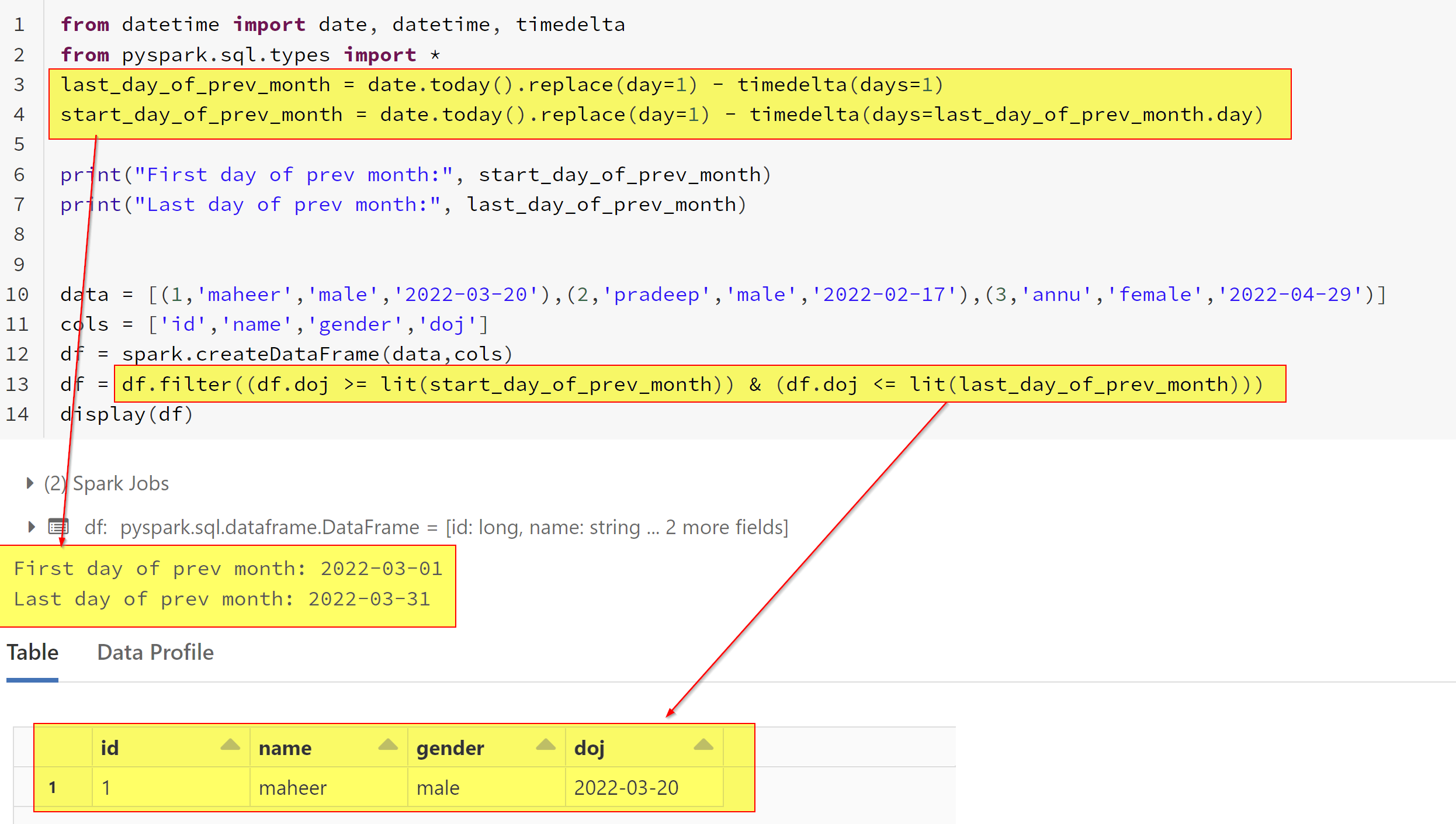

As per my understanding, you are trying to filter dataframe based on some date filed. Your filter condition should check is date if in previous month or not. Please correct me if I am wrong.

You should consider computing previous month start date and last date and then have filter on dataframe to check if your date column is between start and last date of previous month or not.

Please check below screenshot.

Below is the sample code which shown in above image.

from datetime import date, datetime, timedelta

from pyspark.sql.types import *

last_day_of_prev_month = date.today().replace(day=1) - timedelta(days=1)

start_day_of_prev_month = date.today().replace(day=1) - timedelta(days=last_day_of_prev_month.day)

print("First day of prev month:", start_day_of_prev_month)

print("Last day of prev month:", last_day_of_prev_month)

data = [(1,'maheer','male','2022-03-20'),(2,'pradeep','male','2022-02-17'),(3,'annu','female','2022-04-29')]

cols = ['id','name','gender','doj']

df = spark.createDataFrame(data,cols)

df = df.filter((df.doj >= lit(start_day_of_prev_month)) & (df.doj <= lit(last_day_of_prev_month)))

display(df)

Hope this helps. Please let us know if any further queries.

----------

Please consider hitting Accept Answer button. Accepted answers help community as well.

and upvote

and upvote  for the same. And, if you have any further query do let us know.

for the same. And, if you have any further query do let us know.