Hi @Anonymous ,

Thank you for posting query in Microsoft Q&A Platform.

The Synapse notebook activity runs on the Spark pool that gets chosen in the Synapse notebook. When we run notebook activity, spark pool takes time to start spark session. Once spark sessions starts thats when data processing will actually gets trigger.

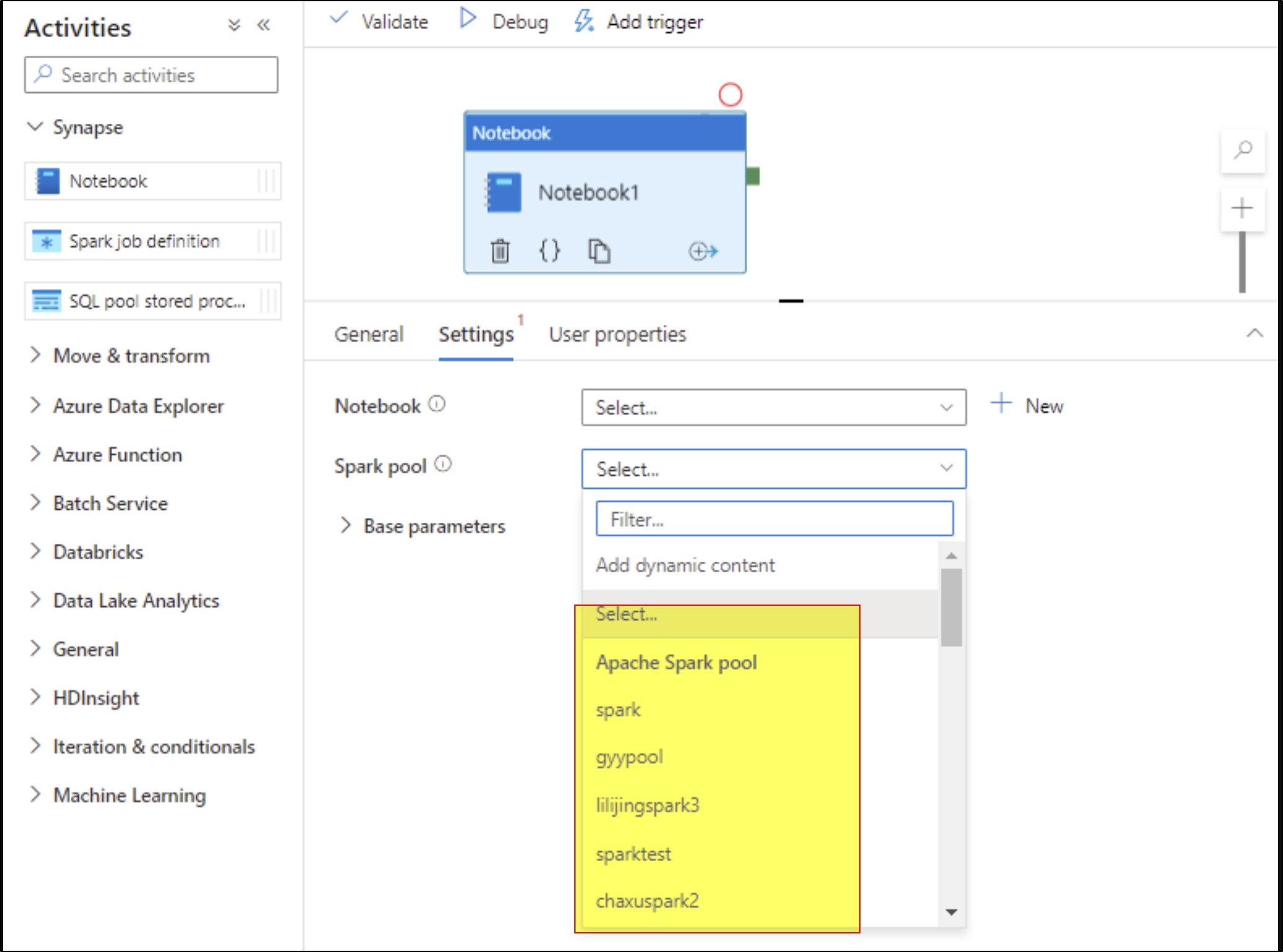

You can select an Apache Spark pool in the settings. It should be noted that the Apache spark pool set here will replace the Apache spark pool used in the notebook. If Apache spark pool is not selected in the settings of notebook content for current activity, the Apache spark pool selected in that notebook will be used to run.

If we have multiple notebooks which you would like to run. Then try to chain them within the notebook. That means from notebook call another notebook. That way we wont end up using multiple synapse notebook activities and we don't end up taking more time for spark session to start every time.

We can use %run <notebook> or mssparkutils.notebook.run("<notebook>") to run one notebook from another.

Below are few useful videos to explain about above commands:

- %run command to reference another notebook with in current notebook in Azure Synapse Analytics

- run() function of notebook module in MSSparkUtils package in Azure Synapse

Hope this helps. Please let us know if any further queries.

-------------

Please consider hitting Accept Answer. Accepted answers help community as well.

and upvote

and upvote  for the same. And, if you have any further query do let us know.

for the same. And, if you have any further query do let us know.