Hello @BartoszWachocki-4076 and welcome to Microsoft Q&A and Data Factory. Thank you for your question.

I was able to reproduce your issue... until I hooked up the SQL. However I think I know where to fix this anyway.

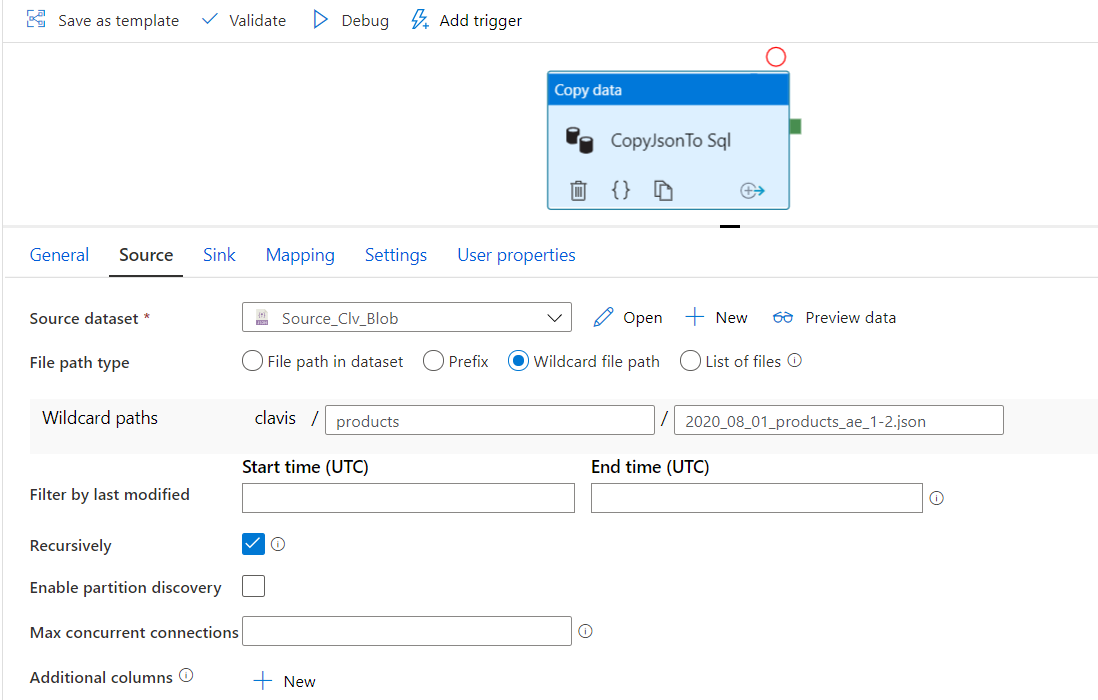

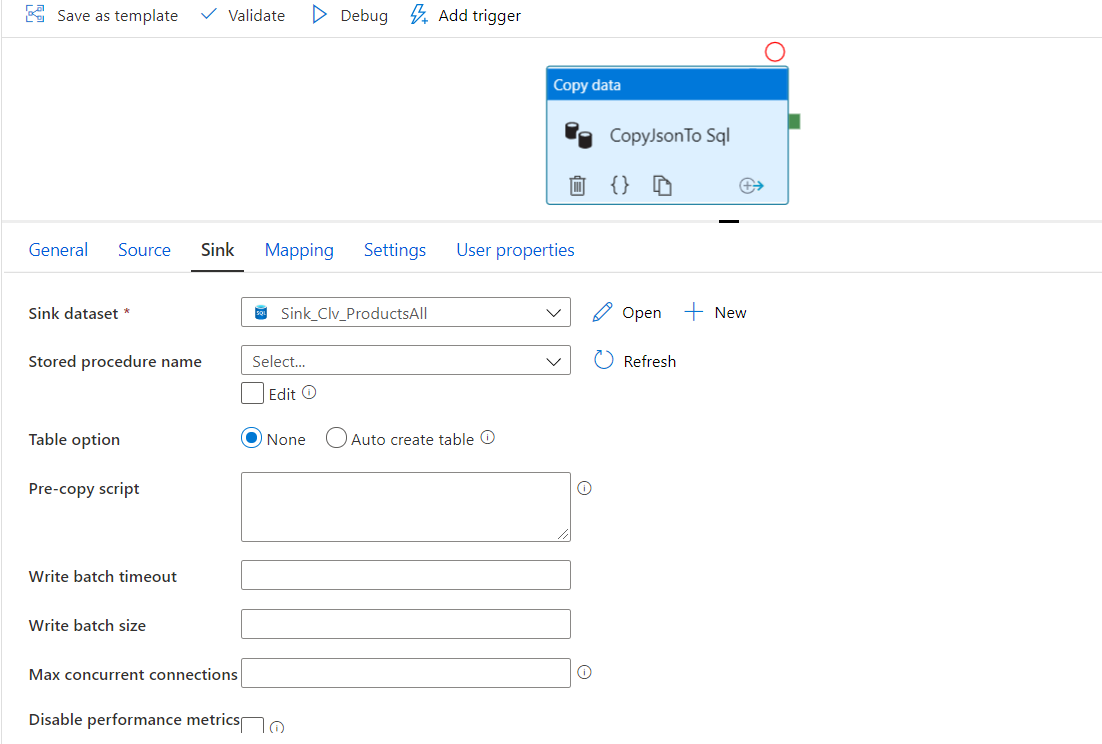

First, go to your sink dataset

and import schema

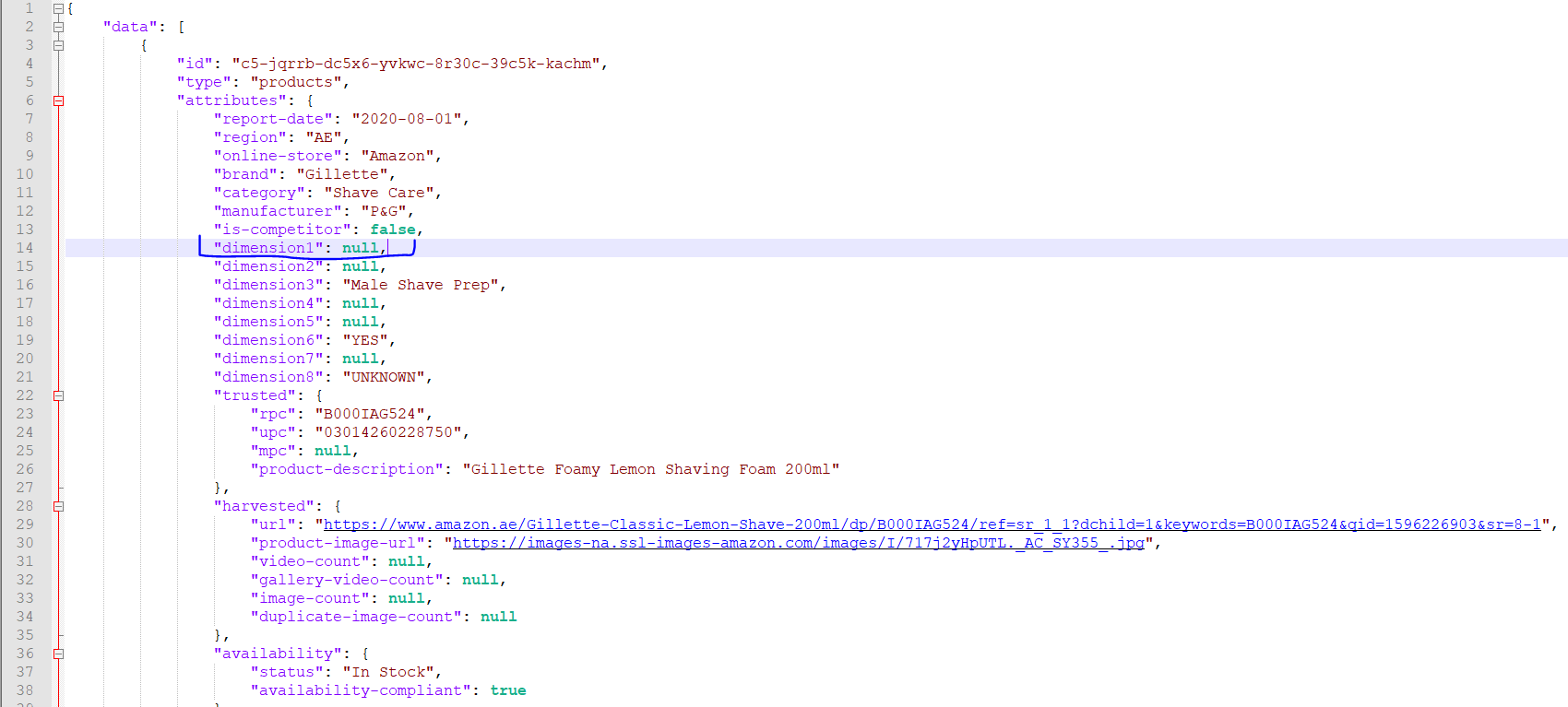

Then go back to the copy activity mapping and import schemas again (to ensure it is up to date). If you click clear first, don't forget to check the collection reference.

The idea behind this, is to make the mapping explicit to Data Factory, so it doesn't try to guess data type from the data.

Please let me know if this solves your issue. If not, we can try the same on the source side, and if that doesn't work, let me know and I'll figure something out.

Welcome and Thank you

Martin