Hello @Prateek Narula and welcome to Microsoft Q&A. Thank you for providing such detailed background information and context. I had never heard of dicom or pydicom before.

So here is my hunch.

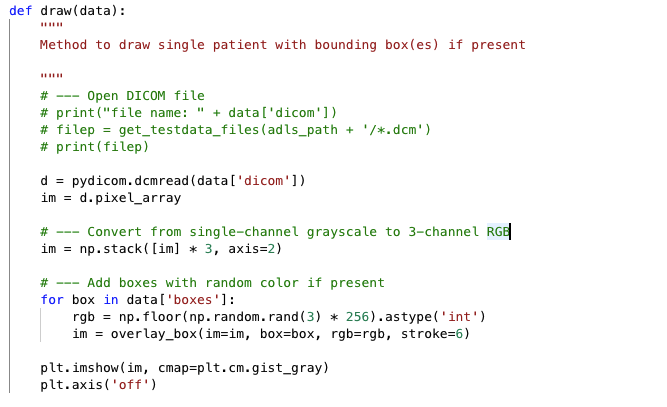

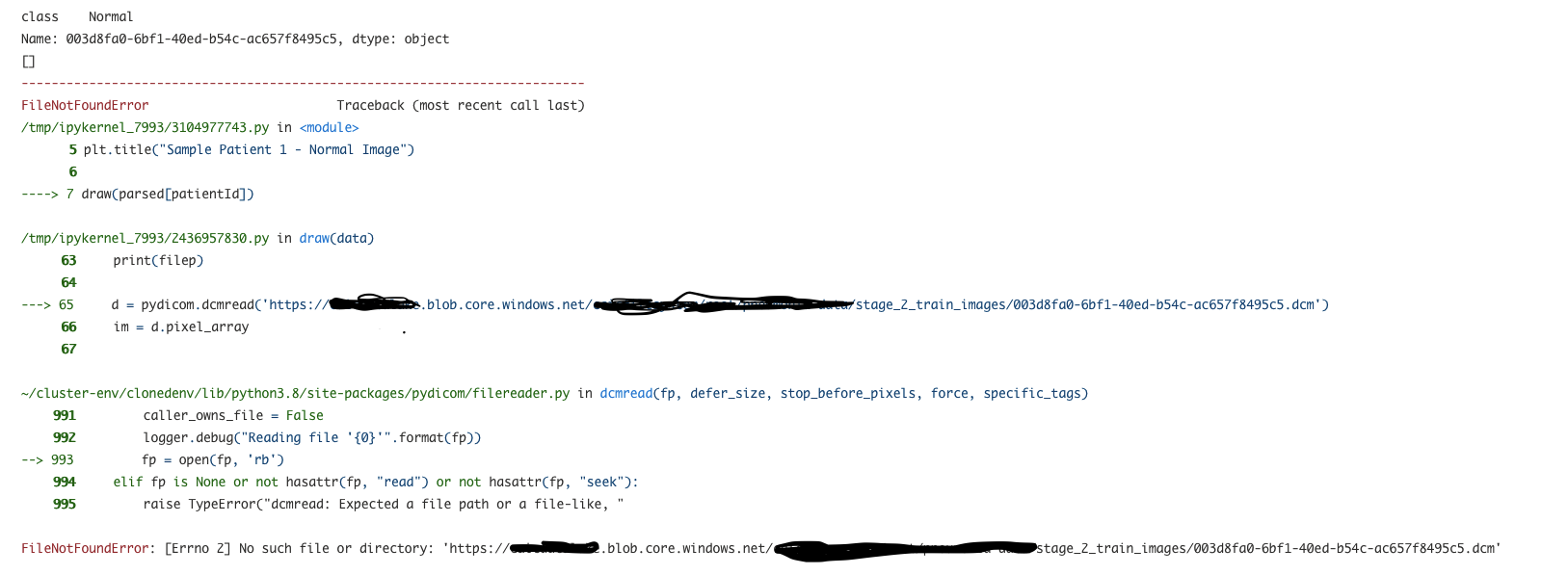

The lowest level of your error, the filereader.py is using low level operating system methods for opening a file. This is expecting to run on a normal environment on a computer where the file is stored on the local disk drive. The URI you have given is sharing the https protocol, as opposed to a local disk like C:\ .

I think we should try downloading the file to the cluster, and then running on that.

Another option is to mount the storage account, so it can then be referred to by a more operating system like designation. Actually, this

second option is what you probably want more. Mounting makes it so spark can pretend the storage account is actually an attached disk drive, as opposed to some web URL.

Does this make sense? You were trying to use a local file system operation on a website resource. Like trying to get to a campsite using a passenger train instead of a car. Both car and train are modes of transportation, but trains only go to train stops. The train can't get to the campsite.