For about 2 weeks now, we are now seeing a DF-MSSQL-InvalidFirewallSetting error in a Data Flow. We have not had a change to our ADF configuration in a few months and it has been working fine until recently.

Error message is: "Job failed due to reason: The TCP/IP connection to the host has failed. Make sure that an instance of SQL Server is running on the host and accepting TCP/IP connections at the port. Make sure that TCP connections to the port are not blocked by a firewall."

I figured this was a blip in the underlying framework, but it has persisted.

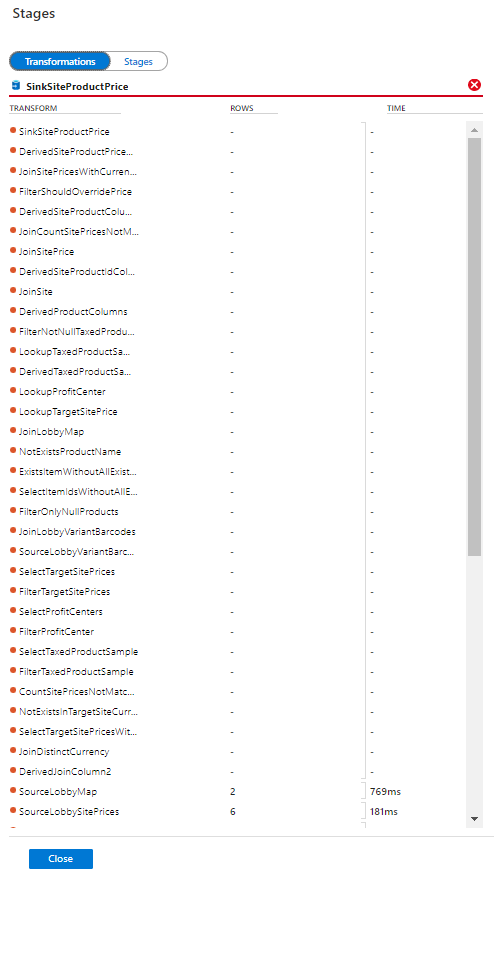

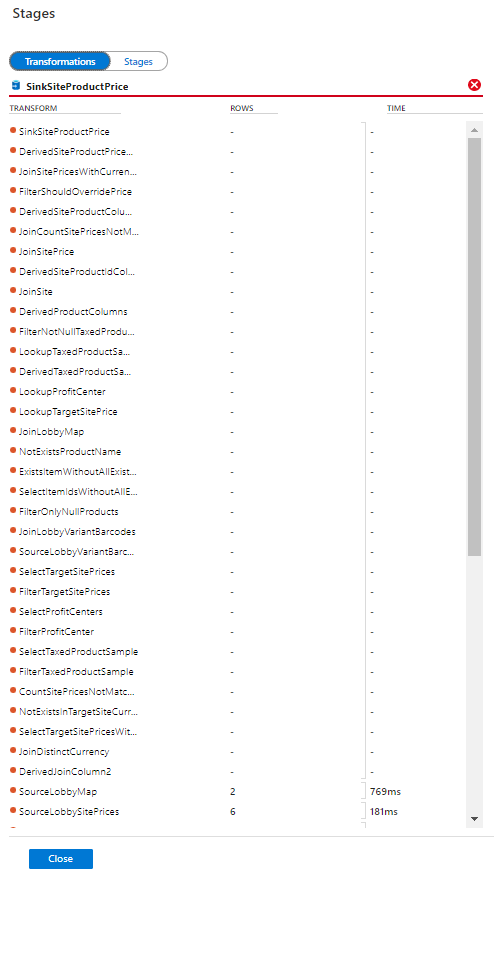

The error happens in the same data flow and marks a failure in the same sink every time.

We have allowed the "Data Factory" address range through any firewalls to the Azure SQL Server.

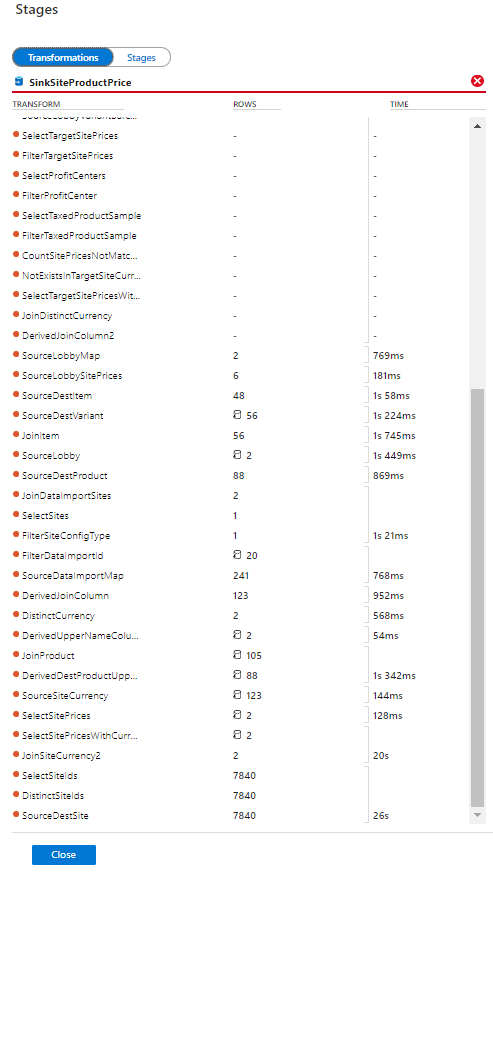

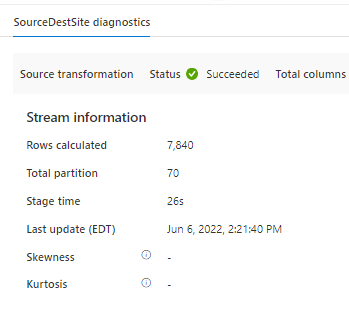

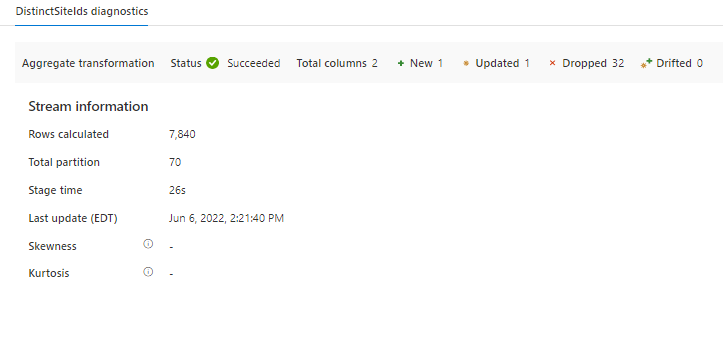

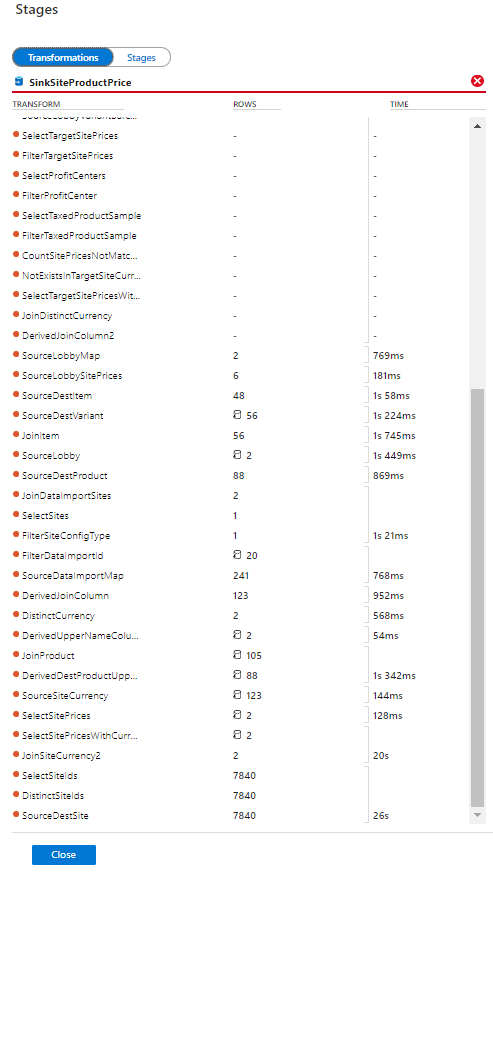

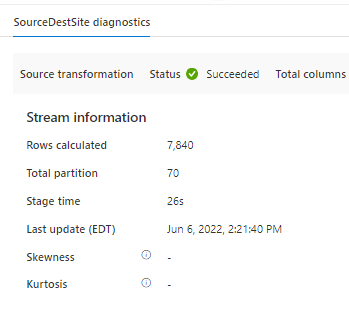

Reviewing the Data Flow, I noticed a high number of records for one of the Azure SQL source tables (SourceDestSite).

It appears it is querying this table in multiple partitions. Other data flows use this table and it is always read in a single partition with the default partitioning settings.

Note in this example, the source table only has 112 records.

I proceeded to set the partitioning for the source to Single Partition, but it is still reading multiple partitions and the dataflow fails.

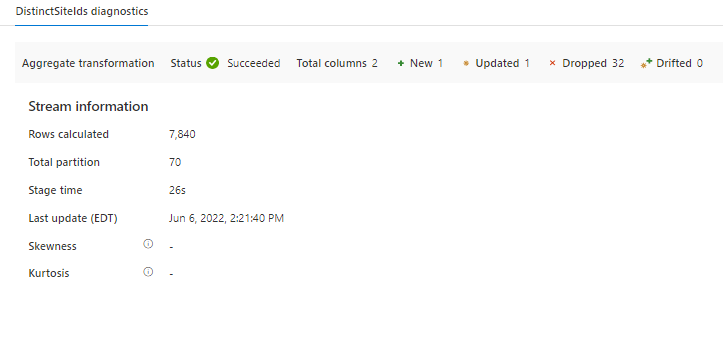

I think proceeded to put an aggregate function after this source to group by Site.Id and just count(), but it still read multiple partitions and the dataflow fails.

Finally, I decided to copy the source table in the pipeline to a parquet just before the dataflow and then use the parquet in the dataflow and I was able to work around this problem. However, this does not seem like the ideal approach.

Does anyone know of a better solution to this?