Hello @Manoj Ashvin ,

Thanks for the question and using MS Q&A platform.

Make sure to whitelist Databricks workspace VNet on Oracle firewall.

For more details, refer to the SO thread addressing similar issue.

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Hello MSFT,

I am currently migrating my onpremise python codes to Azure DAtabricks. One of the final activity in the pipeline is to perform DB2 actions on a Oracle table.

Actions involve, delete, insert and update.

I read that Oracle connector support is not present in ADF Data flow, However I can perform a copy activity to copy all (insert and update type) records with a pre copy script to delete records. I use a lookup activity to filter the records to be deleted as an array. However, populating the records as an array itself takes more than 10 minutes. My table contains 50k rows and 5 columns with some character columns with max length of 3 character.

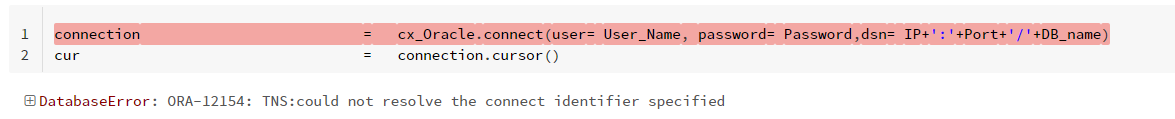

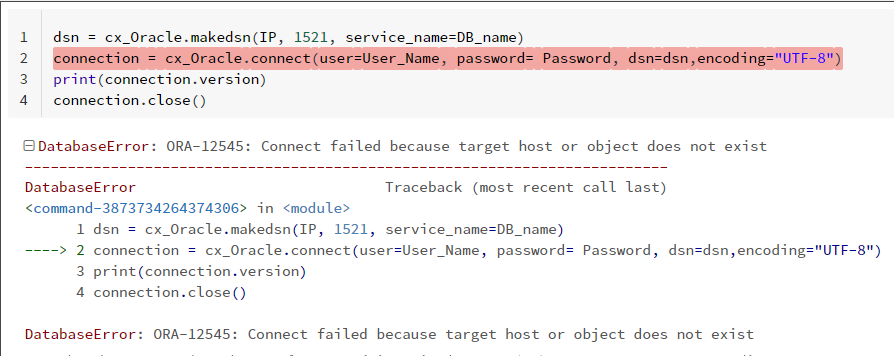

So I thought of using Python in Databricks as the next option which can be much faster than using ADF connectors. I use the same settings as in ADF. I followed this link from MSFT but I think the link is bit outdated w.r.t oracle client library path. I was able to overcome the issue and posted the outcome in this link.

Now the client is installed correctly but still there is some connection/network issue. I am wondering why there is a difference between ADF and Databricks, although both works on same principle just the interface is different.

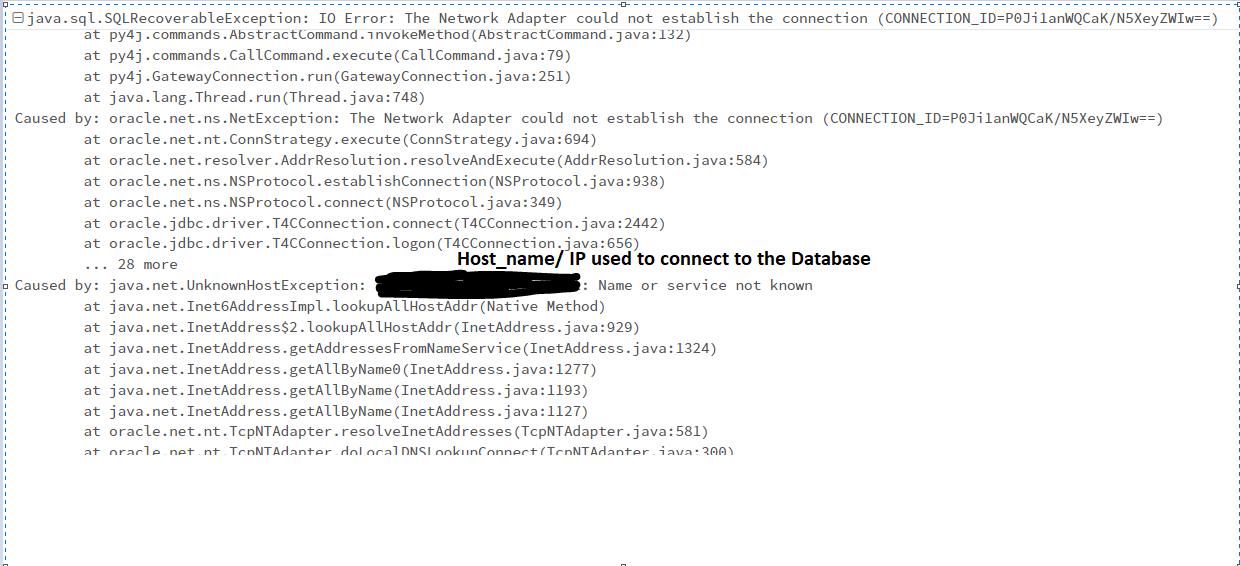

Next, I tried to connect using Pyspark and it also failed with below error. Also installed OJDBC into the cluster, where I used OJDBC version compatible with Oracle DB version.

URL = "jdbc:oracle:thin:" + User_Name + "/" + Password + "@//" + IP + ":" + Port + "/" + DB_name

DbTable = DataBase_name + "." + Table_Name

Table_data = spark.read.format("jdbc").option("url", URL).option("dbtable", DbTable).option("user", User_Name).option("password", Password).option("driver", "oracle.jdbc.driver.OracleDriver").load()

I even tried the new library for cx_Oracle --> oracledb, still the same issue,

without_config, with config_dir (I guess the path is different in databricks), with conn string, Creating DNS, Connect Descriptor string

Can you advise why the connection is not working in Databricks for the same settings as in ADF?

Hello @Manoj Ashvin ,

Thanks for the question and using MS Q&A platform.

Make sure to whitelist Databricks workspace VNet on Oracle firewall.

For more details, refer to the SO thread addressing similar issue.