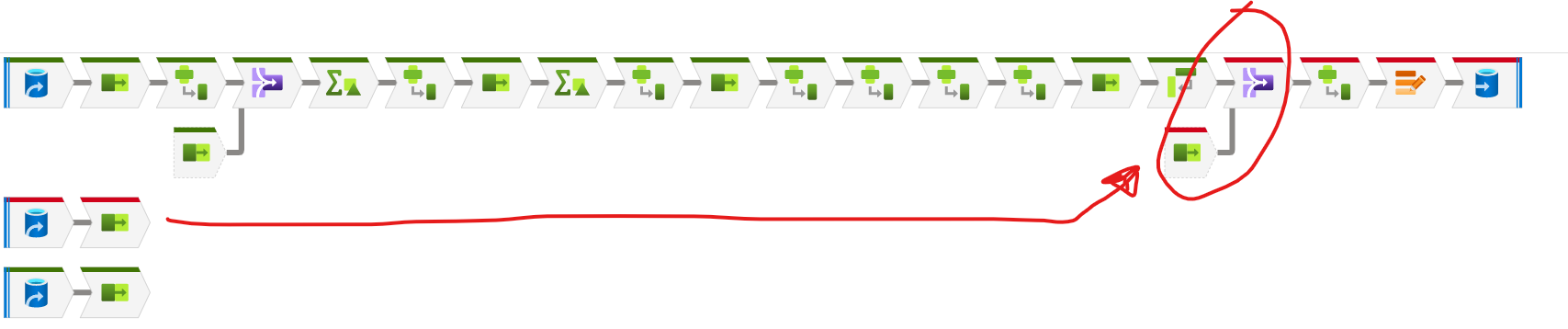

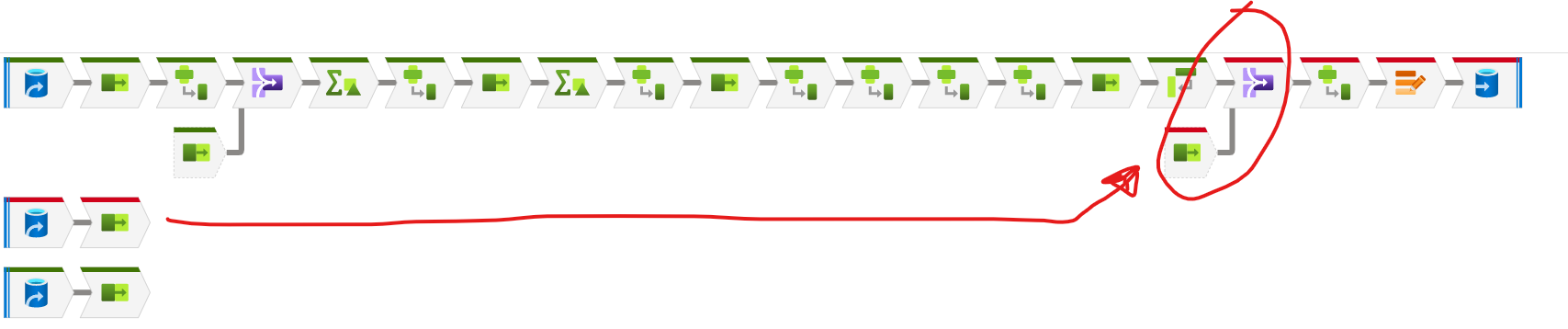

In our project we use dataflow to ingest data into a Postgre database from a csv file. We do a join before inserting with the table itself to detect which items have been updated and thus assign an update timestamp.

The problem occurs in this join, because if I remove it there are no problems. The database exceeds 100GB. The error does not give me much information as to what might be happening:

{"StatusCode":"DFInternalServerError","Message":"Job failed due to reason: Failed to execute dataflow with internal server error, please retry later. If issue persists, please contact Microsoft support for further assistance","Details":"org.apache.spark.SparkException: Job aborted.\n\tat org.apache.spark.sql.execution.datasources.FileFormatWriter$.write(FileFormatWriter.scala:202)\n\tat org.apache.spark.sql.execution.datasources.InsertIntoHadoopFsRelationCommand.run(InsertIntoHadoopFsRelationCommand.scala:159)\n\tat org.apache.spark.sql.execution.command.DataWritingCommandExec.sideEffectResult$lzycompute(commands.scala:104)\n\tat org.apache.spark.sql.execution.command.DataWritingCommandExec.sideEffectResult(commands.scala:102)\n\tat org.apache.spark.sql.execution.command.DataWritingCommandExec.doExecute(commands.scala:122)\n\tat org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:157)\n\tat org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:153)\n\tat org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:181)\n\tat org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)\n\tat org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:1"}

Does any one have the same problem recently?