Hello @P Sharma and welcome to Microsoft Q&A.

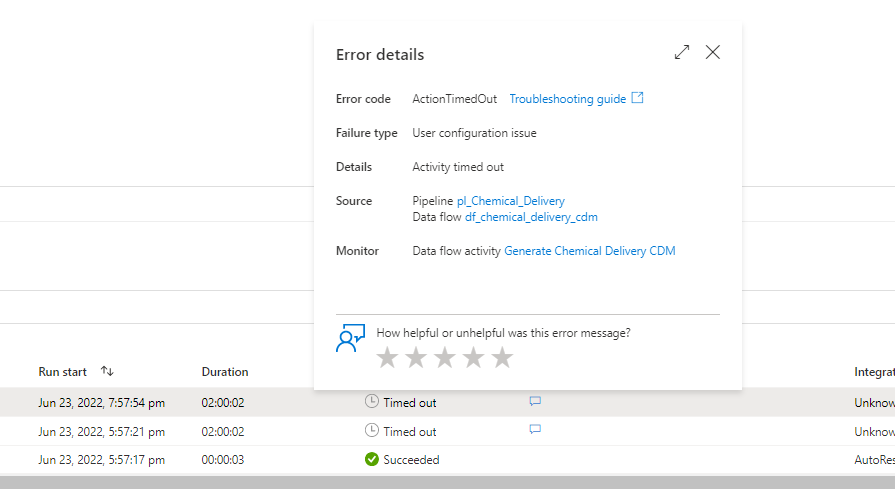

I have checked for similar historical cases (based upon error message).

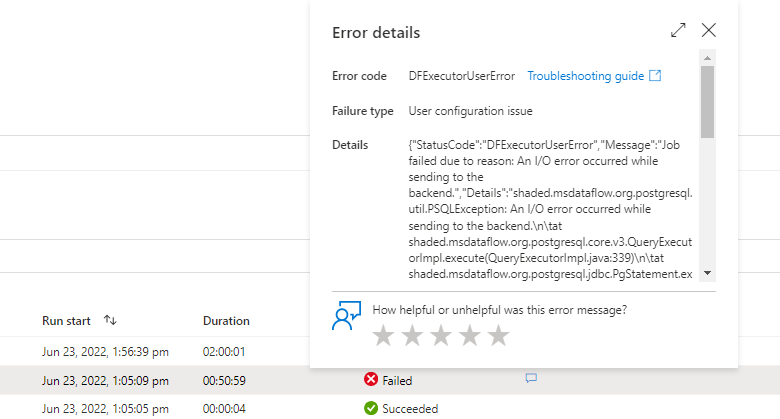

shaded.msdataflow.org.postgresql.util.PSQLException: An I/O error occurred while sending to the backend

The cases I found talked about types of connection pooling, and having too many connections open on the postgres.

What this means is, too many users/applications were trying to access the database at the same time, and the multitasking made operations take too long. When operations took too long, the connection was dropped. Since the connection was dropped, it couldn't send data to the back end.

What I find interesting, is that the Data Flow debug worked fine. If your Data Flow writes back to the same DB you read from, I havea theorey. The Data Flow debug preview does not write back to the DB, it only reads the data, and then caches the source data. This way it doesn't have to fetch the source data multiple times. On a database, Write operations usually take more effort than read operations. So thats my theorey on why the DataFlow debug preview had less trouble.

There might be other causes, so after you check your number of peak connections, I can offer you a 1-time free support ticket for more in-depth investigation.