For ease of rapid prototyping, I downloaded the model.json and wrote some Python on my local machine.

Below is not a final version, but it seems to work. Some changes would need to be made if we want to run it on Synapse. Namely, changing model.json location from local file to blob address. Or could mount it.

Take a look and tell me what you think. @Elshan Chalabiyev

import json

model_location = 'C:\\Users\\XXXX\\Downloads\\model.json'

#CREATE EXTERNAL FILE FORMAT and CREAT EXTERNAL DATA SOURCE are separate things, not covered here.

#however the file format and external data source are used in the SQL expression, so I need names for them

file_format = "myFileFormat"

data_source = "myDataSource"

# if data types in model.json are not good types in SQL, make substitutions with below

typemapping = { 'string':'varchar(256)', 'int64':'int', 'decimal':'decimal'}

#magic sql writer

def writeSQL( tablename, filelocation, columns, datasource, fileformat):

statement = 'CREATE EXTERNAL TABLE ' + tablename + " (\n\t"

for col in columns:

statement += col['name'] + ' '

if col['dataType'] not in typemapping:

statement += col['dataType']

else:

statement += typemapping[col['dataType']]

statement += ", \n\t"

statement = statement.rstrip(", \n\t")

statement += ")\nWITH (\n Location = '" + filelocation + "',\n DATA_SOURCE = " + datasource + ",\n FILE_FORMAT = " + fileformat + ");\n"

print(statement)

return statement

#do the stuff

with open(model_location, 'r') as raw_model:

model = json.load(raw_model)

for table in model['entities']:

writeSQL(table['name'], table['partitions'][0]['name'], table['attributes'], data_source, file_format)

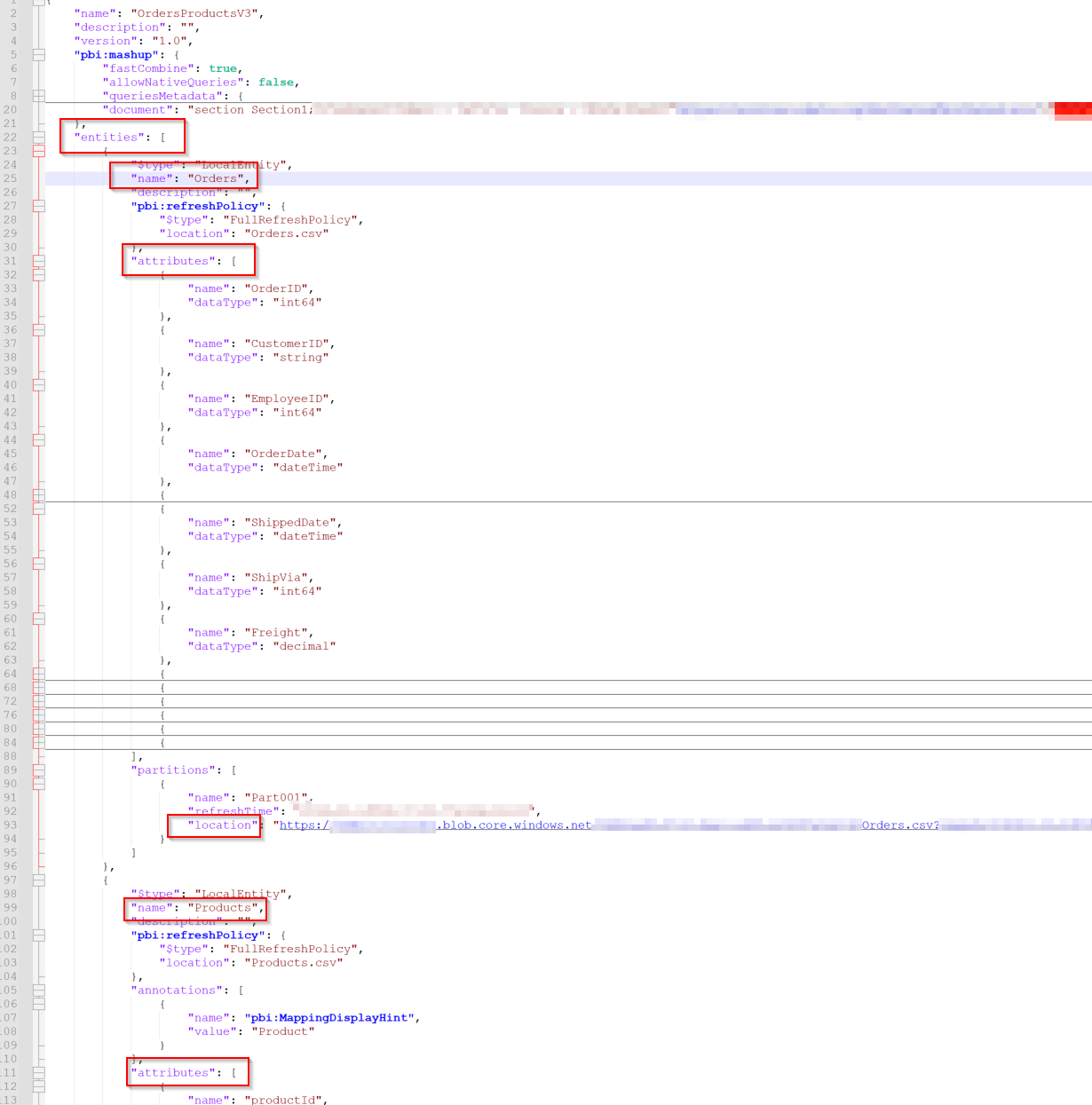

The model.json took the form of (see below). Is yours vaguely similar?