Hi @kiran machan ,

Thanks for reaching out and using Microsoft Q&A forum.

As per the requirement image shared in the original query, it is possible to do in a single ADF pipeline using parameterization concept. Firstly you need a ForEach Activity to iterate through input array (nothing but source/destination folder/file mapping details) and inside ForEach activity (Sequential = True) you will need a copy activity to process files for each iteration.

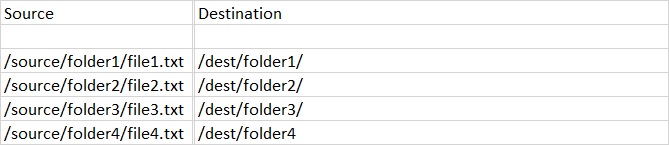

Note: In order to map source-folder/file to destination-folder/file you will need to pre-define the mapping either in database table or pass a JSON array with input details as a pipeline parameter as shown in below example.

SQL table example:

If you prefer a database table, then your pipeline will need a Lookup Activity to fetch the source/destination folder mapping details and pass the output array to a ForEach activity (Sequential = true) and inside forEach you will need to have a copy activity which will copy the files per each iteration.

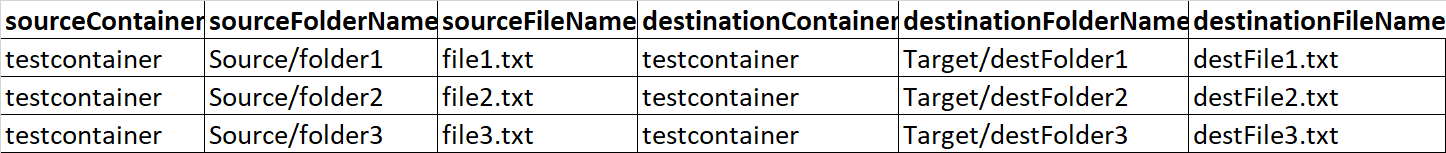

In this sample, I have used below JSON Object array as input parameter to the pipeline and iterated through each object in forEach activity and processed files accordingly.

Since we are implementing parameterization, we have to define source and sink data set parameters in order to map input parameters (sourceContainer, sourceFolderName, sourceFileName, destinationContainer, destinationFolderName, destinationFileName)

Here is the input parameter JSON object array used:

[

{

"sourceContainer": "testcontainer",

"sourceFolderName": "Source/folder1",

"sourceFileName": "file1.txt",

"destinationContainer": "testcontainer",

"destinationFolderName": "Target/destFolder1",

"destinationFileName": "destFile1.txt"

},

{

"sourceContainer": "testcontainer",

"sourceFolderName": "Source/folder2",

"sourceFileName": "file2.txt",

"destinationContainer": "testcontainer",

"destinationFolderName": "Target/destFolder2",

"destinationFileName": "destFile2.txt"

},

{

"sourceContainer": "testcontainer",

"sourceFolderName": "Source/folder3",

"sourceFileName": "file3.txt",

"destinationContainer": "testcontainer",

"destinationFolderName": "Target/destFolder3",

"destinationFileName": "destFile3.txt"

}

]

Please see below GIF of for implementation

Hope this helps. In case if your requirement is different than this, please let me know with few additional details/clarification so that I can assist accordingly.

Thank you.

----------

Please do consider to click on "Accept Answer" and "Upvote" on the post that helps you, as it can be beneficial to other community members.