Hi @Diego Poggioli ,

I think you are misunderstood the 1000 events per second. I believe this is applicable only for ingress operation, it's either 1000 events or 1 MB per API call to event hub. However, on the other had for egress it's up to max 2 MB per call (it will wrap N number of events to round to 2 MB per call) and there is no upper limit for number of events to read. In your case, I assume 7000 events approx. size is 2 MB. Since its trigger once, when the job kick-starts, it was able to pull only 7000 events and ends there.

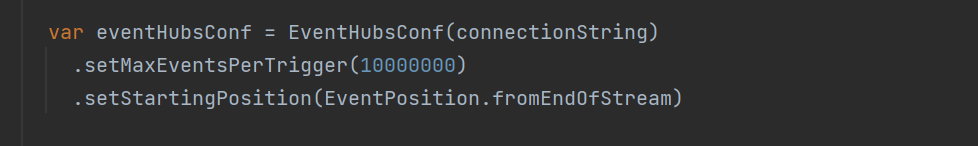

Saying that, setting maxEventsPerTrigger value to a higher number will let it create micro batch (each batch is less than 2 MB) behind the scene and send the events to the caller. However, in this case, it's not always guaranteed that you will have only 10000000 events, what if you have more than N number of events?

I think to overcome this I would still keep the trigger as once, however instead of letting the SDK do the batching for you, you can have the batching logic in your code. When it's triggered, pull X number of events and loop through until it's lower than a batch size and end the logic there. If you want to keep the dev simple, then playing around the attribute maxEventsPerTrigger will help.

Mark this as an accepted answer if it helps you.