Hi @Amit Sopan Shendge ,

Thank you for posting query in Microsoft Q&A Platform.

As per documentation of Pandas in Synapse notebooks. I see example of using path URL along with linked service. So you can consider doing same in your case instead of mount point. Click here to see the same.

Below is the sample code.

#Read data file from URI of secondary Azure Data Lake Storage Gen2

import pandas

#read data file

df = pandas.read_csv('abfs[s]://file_system_name@account_name.dfs.core.windows.net/ file_path', storage_options = {'linked_service' : 'linked_service_name'})

print(df)

#write data file

data = pandas.DataFrame({'Name':['A', 'B', 'C', 'D'], 'ID':[20, 21, 19, 18]})

data.to_csv('abfs[s]://file_system_name@account_name.dfs.core.windows.net/file_path', storage_options = {'linked_service' : 'linked_service_name'})

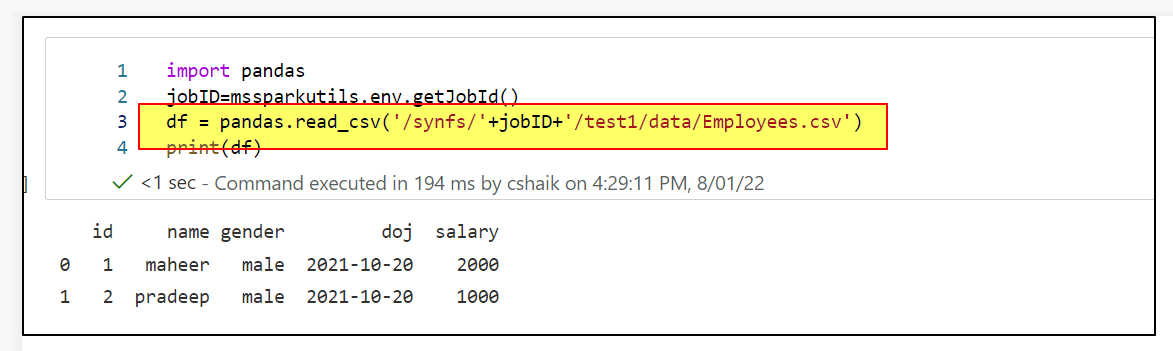

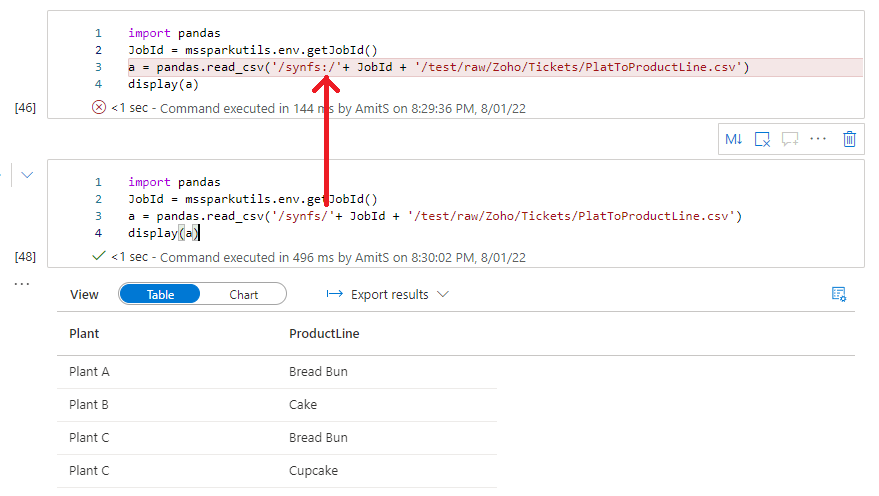

I tried using mount point and ending up with the similar issue as yours. I suspect with pandas mount points may be not work. I am checking more on this internally. I will share updates soon.

Hope this helps. Please let us know if any furthher queries.

------------

Please consider hitting Accept Answer button. Accepted answer help community as well.