I've followed this document here but still running into issues.

I have deployed the function app infrastructure using an ARM template using a YAML IaC pipeline.

I am now trying to deploy the Python code itself. It has a simple utc to current timezone converter function.

The requirements.txt file is as follows:

# DO NOT include azure-functions-worker in this file

# The Python Worker is managed by Azure Functions platform

# Manually managing azure-functions-worker may cause unexpected issues

azure-functions

azure-servicebus

azure-storage-file-datalake

python-dateutil

pytest

My YAML pipeline is as follows:

trigger:

branches:

include:

- releases/*

- refs/tags/func-sbtools*

paths:

include:

- function-app/func-sbtools/*

pool:

vmImage: 'ubuntu-latest'

variables:

buildPlatform: 'Any CPU'

buildConfiguration: 'Release'

pythonVersion: '3.9'

stages:

- stage: publish_artifacts

displayName: Publish deployment artifacts

jobs:

- job: sbtools_drop

displayName: Create build artifacts for sbTools deployment

steps:

- checkout: self

- task: UsePythonVersion@0

inputs:

versionSpec: '$(pythonVersion)'

displayName: 'Use Python $(pythonVersion)'

- task: CopyFiles@2

displayName: Copy function app files to staging directory

inputs:

SourceFolder: '$(System.DefaultWorkingDirectory)/function-app/func-sbtools/'

Contents: '**'

TargetFolder: '$(Build.ArtifactStagingDirectory)'

- script: |

python -m venv antenv

source antenv/bin/activate

python -m pip install --upgrade pip

pip install setup

pip install -r requirements.txt

workingDirectory: '$(Build.ArtifactStagingDirectory)'

displayName: "Install requirements"

- task: ArchiveFiles@2

displayName: 'Create sbTools function app drop zip'

inputs:

rootFolderOrFile: '$(Build.ArtifactStagingDirectory)'

includeRootFolder: false

archiveType: 'zip'

archiveFile: '$(Build.ArtifactStagingDirectory)/sbtools_$(Build.BuildId).zip'

replaceExistingArchive: true

- task: PublishBuildArtifacts@1

displayName: Publish sbTools function app drop

inputs:

PathtoPublish: '$(Build.ArtifactStagingDirectory)/sbtools_$(Build.BuildId).zip'

ArtifactName: 'sbtools-drop'

publishLocation: 'Container'

- stage: sit_deploy

displayName: SIT deployment

dependsOn: [publish_artifacts]

jobs:

- job: sbtools_sit

displayName: Create build artifacts

steps:

- checkout: self

- download: current

displayName: Download func-sbtools drop

artifact: sbtools-drop

- task: UsePythonVersion@0

inputs:

versionSpec: '$(pythonVersion)'

displayName: 'Use Python version $(pythonVersion)'

- task: AzureFunctionApp@1

displayName: 'Deploy sbTools code'

inputs:

azureSubscription: 'Azure DevOps (Dev/Test)'

appType: 'functionAppLinux'

appName: 'func-sbtools-sit'

package: '$(Pipeline.Workspace)/sbtools-drop/sbtools_$(Build.BuildId).zip'

The pipeline when run completes successfully.

When I test the function in Postman, I get a 500 Internal Server Error response. I've logged the call through App Insights and it says it can't find the 'dateutil' module, which I think is due to the requirements.txt file not being correctly deployed. Here is the exception message from App Insights:

Exception while executing function: Functions.calcUtcToLocaltime <--- Result: Failure Exception: ModuleNotFoundError: No module named 'dateutil'. Troubleshooting Guide: https://aka.ms/functions-modulenotfound Stack: File "/azure-functions-host/workers/python/3.9/LINUX/X64/azure_functions_worker/dispatcher.py", line 315, in _handle__function_load_request func = loader.load_function( File "/azure-functions-host/workers/python/3.9/LINUX/X64/azure_functions_worker/utils/wrappers.py", line 42, in call raise extend_exception_message(e, message) File "/azure-functions-host/workers/python/3.9/LINUX/X64/azure_functions_worker/utils/wrappers.py", line 40, in call return func(*args, **kwargs) File "/azure-functions-host/workers/python/3.9/LINUX/X64/azure_functions_worker/loader.py", line 85, in load_function mod = importlib.import_module(fullmodname) File "/usr/local/lib/python3.9/importlib/init.py", line 127, in import_module return _bootstrap._gcd_import(name[level:], package, level) File "/home/site/wwwroot/calcUtcToLocaltime/init.py", line 3, in <module> from dateutil import parser, tz

I noticed in the docs that I might have needed to add the app setting SCM_DO_BUILD_DURING_DEPLOYMENT=true to the function app settings config due to how I deployed the infrastructure. I have done so and this has made no difference.

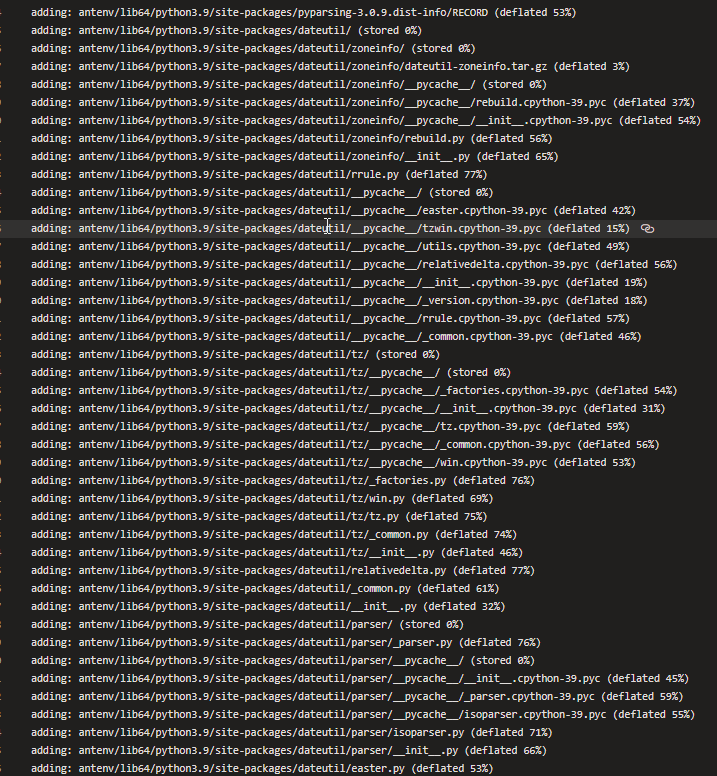

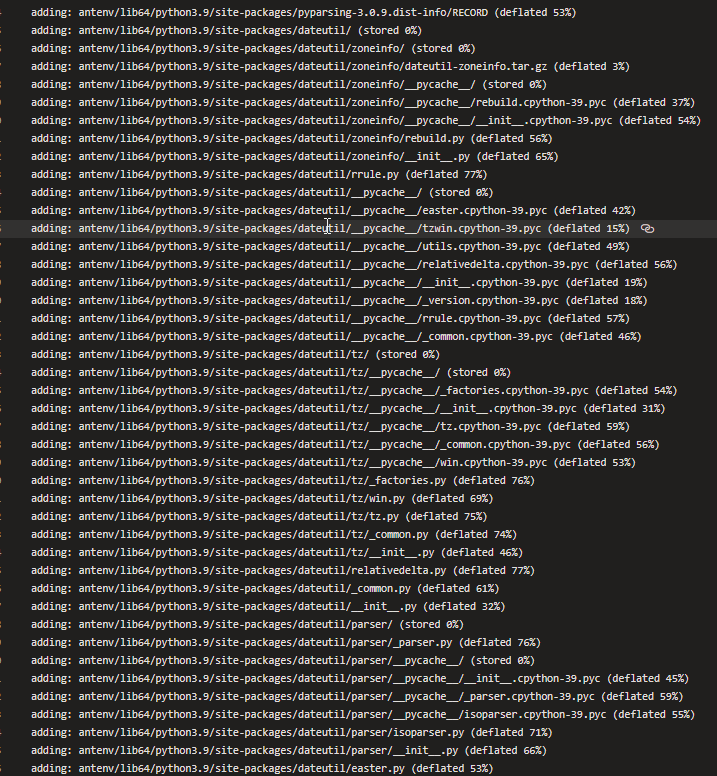

I've checked the pipeline logs for the build and I can see dateutil being downloaded:

At this point I am stuck and would appreciate where I am not executing the requirements correctly.