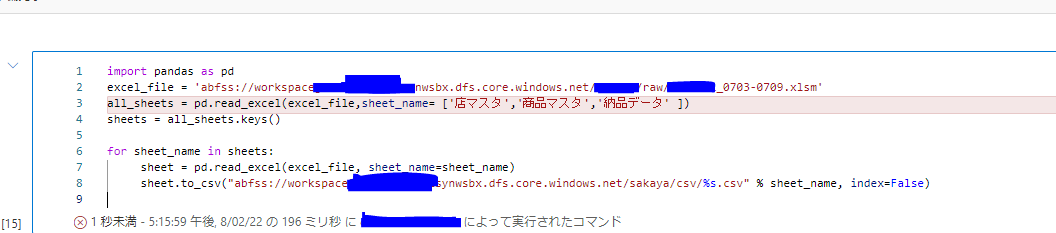

I can't solve the python error, so please let me know.

I am using pyhon in synapse notebook.

I'm trying to convert each of the three sheets in one macro-enabled excel file to a csv file.

However, I have also granted contributor and blob permissions, but it doesn't work. I would like to know how to solve this.

error message

---------------------------------------------------------------------------

HttpResponseError Traceback (most recent call last)

~/cluster-env/env/lib/python3.8/site-packages/azure/storage/blob/aio/_list_blobs_helper.py in _get_next_cb(self, continuation_token)

70 try:

---> 71 return await self._command(

72 prefix=self.prefix,

~/cluster-env/env/lib/python3.8/site-packages/azure/storage/blob/_generated/aio/operations/_container_operations.py in list_blob_hierarchy_segment(self, delimiter, prefix, marker, maxresults, include, timeout, request_id_parameter, **kwargs)

1557 error = self._deserialize(_models.StorageError, response)

-> 1558 raise HttpResponseError(response=response, model=error)

1559

HttpResponseError: Operation returned an invalid status 'This request is not authorized to perform this operation.'

Content: <?xml version="1.0" encoding="utf-8"?><Error><Code>AuthorizationFailure</Code><Message>This request is not authorized to perform this operation.

RequestId:a47a70fb-501e-00c0-3148-a60032000000

Time:2022-08-02T08:15:59.8037930Z</Message></Error>

During handling of the above exception, another exception occurred:

HttpResponseError Traceback (most recent call last)

<ipython-input-8-569e8557> in <module>

1 import pandas as pd

2 excel_file = 'abfss://workspaceXXXXXXXsynwsbx.dfs.core.windows.net/XXXXXX/raw/XXXXXX_0703-0709.xlsm'

----> 3 all_sheets = pd.read_excel(excel_file,sheet_name= ['店マスタ','商品マスタ','納品データ' ])

4 sheets = all_sheets.keys()

5

~/cluster-env/env/lib/python3.8/site-packages/pandas/util/_decorators.py in wrapper(*args, **kwargs)

297 )

298 warnings.warn(msg, FutureWarning, stacklevel=stacklevel)

--> 299 return func(*args, **kwargs)

300

301 return wrapper

~/cluster-env/env/lib/python3.8/site-packages/pandas/io/excel/_base.py in read_excel(io, sheet_name, header, names, index_col, usecols, squeeze, dtype, engine, converters, true_values, false_values, skiprows, nrows, na_values, keep_default_na, na_filter, verbose, parse_dates, date_parser, thousands, comment, skipfooter, convert_float, mangle_dupe_cols, storage_options)

334 if not isinstance(io, ExcelFile):

335 should_close = True

--> 336 io = ExcelFile(io, storage_options=storage_options, engine=engine)

337 elif engine and engine != io.engine:

338 raise ValueError(

~/cluster-env/env/lib/python3.8/site-packages/pandas/io/excel/_base.py in init(self, path_or_buffer, engine, storage_options)

1069 ext = "xls"

1070 else:

-> 1071 ext = inspect_excel_format(

1072 content=path_or_buffer, storage_options=storage_options

1073 )

~/cluster-env/env/lib/python3.8/site-packages/pandas/io/excel/_base.py in inspect_excel_format(path, content, storage_options)

947 assert content_or_path is not None

948

--> 949 with get_handle(

950 content_or_path, "rb", storage_options=storage_options, is_text=False

951 ) as handle:

~/cluster-env/env/lib/python3.8/site-packages/pandas/io/common.py in get_handle(path_or_buf, mode, encoding, compression, memory_map, is_text, errors, storage_options)

556

557 # open URLs

--> 558 ioargs = _get_filepath_or_buffer(

559 path_or_buf,

560 encoding=encoding,

~/cluster-env/env/lib/python3.8/site-packages/pandas/io/common.py in _get_filepath_or_buffer(filepath_or_buffer, encoding, compression, mode, storage_options)

331

332 try:

--> 333 file_obj = fsspec.open(

334 filepath_or_buffer, mode=fsspec_mode, **(storage_options or {})

335 ).open()

~/cluster-env/env/lib/python3.8/site-packages/fsspec/core.py in open(self)

133 been deleted; but a with-context is better style.

134 """

--> 135 out = self.enter()

136 closer = out.close

137 fobjects = self.fobjects.copy()[:-1]

~/cluster-env/env/lib/python3.8/site-packages/fsspec/core.py in enter(self)

100 mode = self.mode.replace("t", "").replace("b", "") + "b"

101

--> 102 f = self.fs.open(self.path, mode=mode)

103

104 self.fobjects = [f]

~/cluster-env/env/lib/python3.8/site-packages/fsspec_wrapper/core.py in hooked(*args, **kwargs)

86 super().do_connect()

87 def hooked(*args, **kwargs):

---> 88 return orig_attr(*args, **kwargs)

89 return hooked

90 else:

~/cluster-env/env/lib/python3.8/site-packages/fsspec/spec.py in open(self, path, mode, block_size, cache_options, **kwargs)

960 else:

961 ac = kwargs.pop("autocommit", not self._intrans)

--> 962 f = self._open(

963 path,

964 mode=mode,

~/cluster-env/env/lib/python3.8/site-packages/adlfs/spec.py in _open(self, path, mode, block_size, autocommit, cache_options, cache_type, metadata, **kwargs)

1605 """

1606 logger.debug(f"_open: {path}")

-> 1607 return AzureBlobFile(

1608 fs=self,

1609 path=path,

~/cluster-env/env/lib/python3.8/site-packages/adlfs/spec.py in init(self, fs, path, mode, block_size, autocommit, cache_type, cache_options, metadata, **kwargs)

1720 if self.mode == "rb":

1721 if not hasattr(self, "details"):

-> 1722 self.details = self.fs.info(self.path)

1723 self.size = self.details["size"]

1724 self.cache = caches[cache_type](

~/cluster-env/env/lib/python3.8/site-packages/fsspec_wrapper/core.py in hooked(*args, **kwargs)

86 super().do_connect()

87 def hooked(*args, **kwargs):

---> 88 return orig_attr(*args, **kwargs)

89 return hooked

90 else:

~/cluster-env/env/lib/python3.8/site-packages/adlfs/spec.py in info(self, path, refresh, **kwargs)

573 fetch_from_azure = True

574 if fetch_from_azure:

--> 575 return sync(self.loop, self._info, path, refresh)

576 return super().info(path)

577

~/cluster-env/env/lib/python3.8/site-packages/fsspec/asyn.py in sync(loop, func, timeout, *args, **kwargs)

66 raise FSTimeoutError

67 if isinstance(result[0], BaseException):

---> 68 raise result[0]

69 return result[0]

70

~/cluster-env/env/lib/python3.8/site-packages/fsspec/asyn.py in _runner(event, coro, result, timeout)

22 coro = asyncio.wait_for(coro, timeout=timeout)

23 try:

---> 24 result[0] = await coro

25 except Exception as ex:

26 result[0] = ex

~/cluster-env/env/lib/python3.8/site-packages/adlfs/spec.py in _info(self, path, refresh, **kwargs)

594 invalidate_cache = False

595 path = self._strip_protocol(path)

--> 596 out = await self._ls(

597 self._parent(path), invalidate_cache=invalidate_cache, **kwargs

598 )

~/cluster-env/env/lib/python3.8/site-packages/adlfs/spec.py in _ls(self, path, invalidate_cache, delimiter, return_glob, **kwargs)

780 outblobs = []

781 try:

--> 782 async for next_blob in blobs:

783 if depth in [0, 1] and path == "":

784 outblobs.append(next_blob)

~/cluster-env/env/lib/python3.8/site-packages/azure/core/async_paging.py in anext(self)

152 if self._page_iterator is None:

153 self._page_iterator = self.by_page()

--> 154 return await self.anext()

155 if self._page is None:

156 # Let it raise StopAsyncIteration

~/cluster-env/env/lib/python3.8/site-packages/azure/core/async_paging.py in anext(self)

155 if self._page is None:

156 # Let it raise StopAsyncIteration

--> 157 self._page = await self._page_iterator.anext()

158 return await self.anext()

159 try:

~/cluster-env/env/lib/python3.8/site-packages/azure/core/async_paging.py in anext(self)

97 raise StopAsyncIteration("End of paging")

98 try:

---> 99 self._response = await self._get_next(self.continuation_token)

100 except AzureError as error:

101 if not error.continuation_token:

~/cluster-env/env/lib/python3.8/site-packages/azure/storage/blob/aio/_list_blobs_helper.py in _get_next_cb(self, continuation_token)

76 use_location=self.location_mode)

77 except HttpResponseError as error:

---> 78 process_storage_error(error)

79

80 async def _extract_data_cb(self, get_next_return):

~/cluster-env/env/lib/python3.8/site-packages/azure/storage/blob/_shared/response_handlers.py in process_storage_error(storage_error)

148 error.error_code = error_code

149 error.additional_info = additional_data

--> 150 error.raise_with_traceback()

151

152

~/cluster-env/env/lib/python3.8/site-packages/azure/core/exceptions.py in raise_with_traceback(self)

245 def raise_with_traceback(self):

246 try:

--> 247 raise super(AzureError, self).with_traceback(self.exc_traceback)

248 except AttributeError:

249 self.traceback = self.exc_traceback

~/cluster-env/env/lib/python3.8/site-packages/azure/storage/blob/aio/_list_blobs_helper.py in _get_next_cb(self, continuation_token)

69 async def _get_next_cb(self, continuation_token):

70 try:

---> 71 return await self._command(

72 prefix=self.prefix,

73 marker=continuation_token or None,

~/cluster-env/env/lib/python3.8/site-packages/azure/storage/blob/_generated/aio/operations/_container_operations.py in list_blob_hierarchy_segment(self, delimiter, prefix, marker, maxresults, include, timeout, request_id_parameter, **kwargs)

1556 map_error(status_code=response.status_code, response=response, error_map=error_map)

1557 error = self._deserialize(_models.StorageError, response)

-> 1558 raise HttpResponseError(response=response, model=error)

1559

1560 response_headers = {}

HttpResponseError: This request is not authorized to perform this operation.

RequestId:a47a70fb-501e-00c0-3148-a60032000000

Time:2022-08-02T08:15:59.8037930Z

ErrorCode:AuthorizationFailure

Error:None

Content: <?xml version="1.0" encoding="utf-8"?><Error><Code>AuthorizationFailure</Code><Message>This request is not authorized to perform this operation.

RequestId:a47a70fb-501e-00c0-3148-a60032000000

Time:2022-08-02T08:15:59.8037930Z</Message></Error>

※XXXX is a personal information.