Hi @Sagar ,

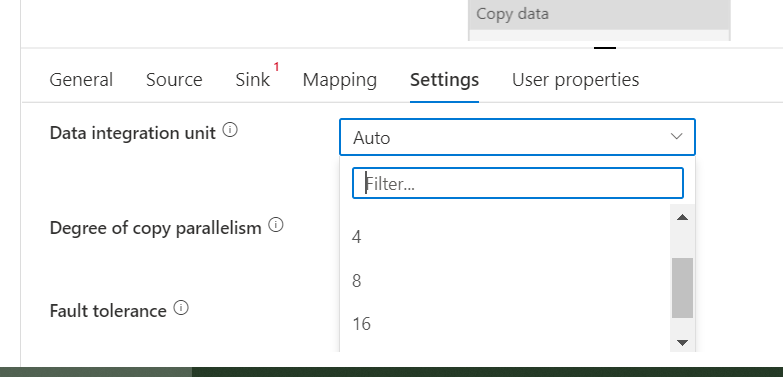

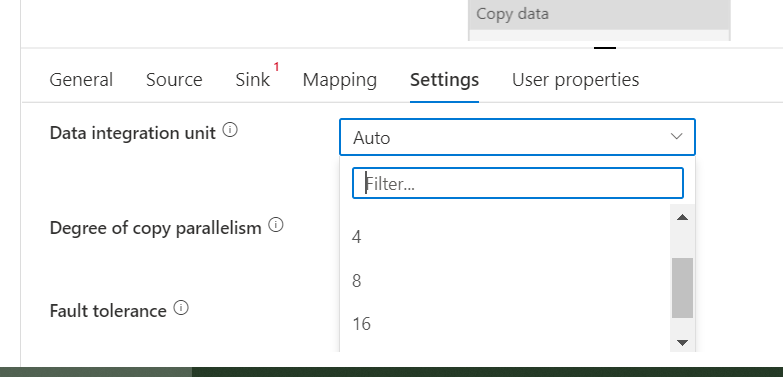

What is the current Data integration unit for the CopyActivity? Would you please increase the units and try again. Thanks!

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Could you please help me to solve this issue

ErrorCode=ParquetJavaInvocationException,'Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=An error occurred when invoking java, message: java.lang.OutOfMemoryError:Unable to retrieve Java exception.

total entry:6

sun.misc.Unsafe.allocateMemory(Native Method)

java.nio.DirectByteBuffer.<init>(DirectByteBuffer.java:127)

java.nio.ByteBuffer.allocateDirect(ByteBuffer.java:311)

com.microsoft.datatransfer.bridge.parquet.ParquetBatchReaderBridge.<init>(ParquetBatchReaderBridge.java:87)

com.microsoft.datatransfer.bridge.parquet.ParquetBatchReaderBridge.open(ParquetBatchReaderBridge.java:63)

com.microsoft.datatransfer.bridge.parquet.ParquetFileBridge.createReader(ParquetFileBridge.java:22)

.,Source=Microsoft.DataTransfer.Richfile.ParquetTransferPlugin,''Type=Microsoft.DataTransfer.Richfile.JniExt.JavaBridgeException,Message=,Source=Microsoft.DataTransfer.Richfile.HiveOrcBridge,'

Hi @Sagar ,

What is the current Data integration unit for the CopyActivity? Would you please increase the units and try again. Thanks!

Today it's showing same error as below

ErrorCode=ParquetJavaInvocationException,'Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=An error occurred when invoking java, message: java.io.IOException:Could not read footer: java.lang.OutOfMemoryError

total entry:7

org.apache.parquet.hadoop.ParquetFileReader.readAllFootersInParallel(ParquetFileReader.java:269)

org.apache.parquet.hadoop.ParquetFileReader.readAllFootersInParallelUsingSummaryFiles(ParquetFileReader.java:210)

org.apache.parquet.hadoop.ParquetReader.<init>(ParquetReader.java:112)

org.apache.parquet.hadoop.ParquetReader.<init>(ParquetReader.java:45)

org.apache.parquet.hadoop.ParquetReader$Builder.build(ParquetReader.java:202)

com.microsoft.datatransfer.bridge.parquet.ParquetBatchReaderBridge.open(ParquetBatchReaderBridge.java:62)

com.microsoft.datatransfer.bridge.parquet.ParquetFileBridge.createReader(ParquetFileBridge.java:22)

java.lang.OutOfMemoryError:null

total entry:16

sun.misc.Unsafe.allocateMemory(Native Method)

java.nio.DirectByteBuffer.<init>(DirectByteBuffer.java:127)

java.nio.ByteBuffer.allocateDirect(ByteBuffer.java:311)

com.microsoft.datatransfer.bridge.io.parquet.BridgeInputFileStream.<init>(BridgeInputFileStream.java:21)

com.microsoft.datatransfer.bridge.io.parquet.CSharpStreamFile.getInputStream(CSharpStreamFile.java:29)

com.microsoft.datatransfer.bridge.io.parquet.BridgeFileSystem.open(BridgeFileSystem.java:167)

com.microsoft.datatransfer.bridge.io.parquet.BridgeFileSystem.open(BridgeFileSystem.java:174)

org.apache.parquet.hadoop.util.HadoopInputFile.newStream(HadoopInputFile.java:65)

org.apache.parquet.hadoop.ParquetFileReader.readFooter(ParquetFileReader.java:459)

org.apache.parquet.hadoop.ParquetFileReader.readFooter(ParquetFileReader.java:437)

org.apache.parquet.hadoop.ParquetFileReader$2.call(ParquetFileReader.java:259)

org.apache.parquet.hadoop.ParquetFileReader$2.call(ParquetFileReader.java:255)

java.util.concurrent.FutureTask.run(FutureTask.java:266)

java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

java.lang.Thread.run(Thread.java:745)

.,Source=Microsoft.DataTransfer.Richfile.ParquetTransferPlugin,''Type=Microsoft.DataTransfer.Richfile.JniExt.JavaBridgeException,Message=,Source=Microsoft.DataTransfer.Richfile.HiveOrcBridge,'

i have same issue with .parquet files any solution tutorial videos for blob parquet files to azure sql?