We have a problem with a Front Door instance, which intermittently responds with 503s. In this particular case, the origin configured in front door is an API Management interface in eastus2.

I looked at https://learn.microsoft.com/en-us/azure/frontdoor/troubleshoot-issues and I can tell you that I've tried the following:

- The configured timeout is set to 240 seconds, not the default 30 seconds

- I disabled EnforceCertificateNameCheck

- I created a rule to remove Accept-Encoding from the request for byte range requests

But I still see intermittent 503 responses.

I also saw this about the range requests, although in that there is no mention of DNSNameNotResolved: https://learn.microsoft.com/en-us/answers/questions/273558/azure-front-door-returns-a-503-service-unavailable.html, and I'm already removing the Accept-Encoding header from requests, per the previously linked article.

I also came across this: https://stackoverflow.com/questions/68160400/azure-front-door-route-to-api-m-returns-dnsnamenotresolved-errorinfo, which says:

"according to Microsoft, the TTL on the DNS records on Front-Door is really short and thus it is very DNS aggressive, this falls within the 99.9% uptime. When this falls within the said uptime they will look into adjustments for Front-Door.". Although I don't think that is an official statement from Microsoft.

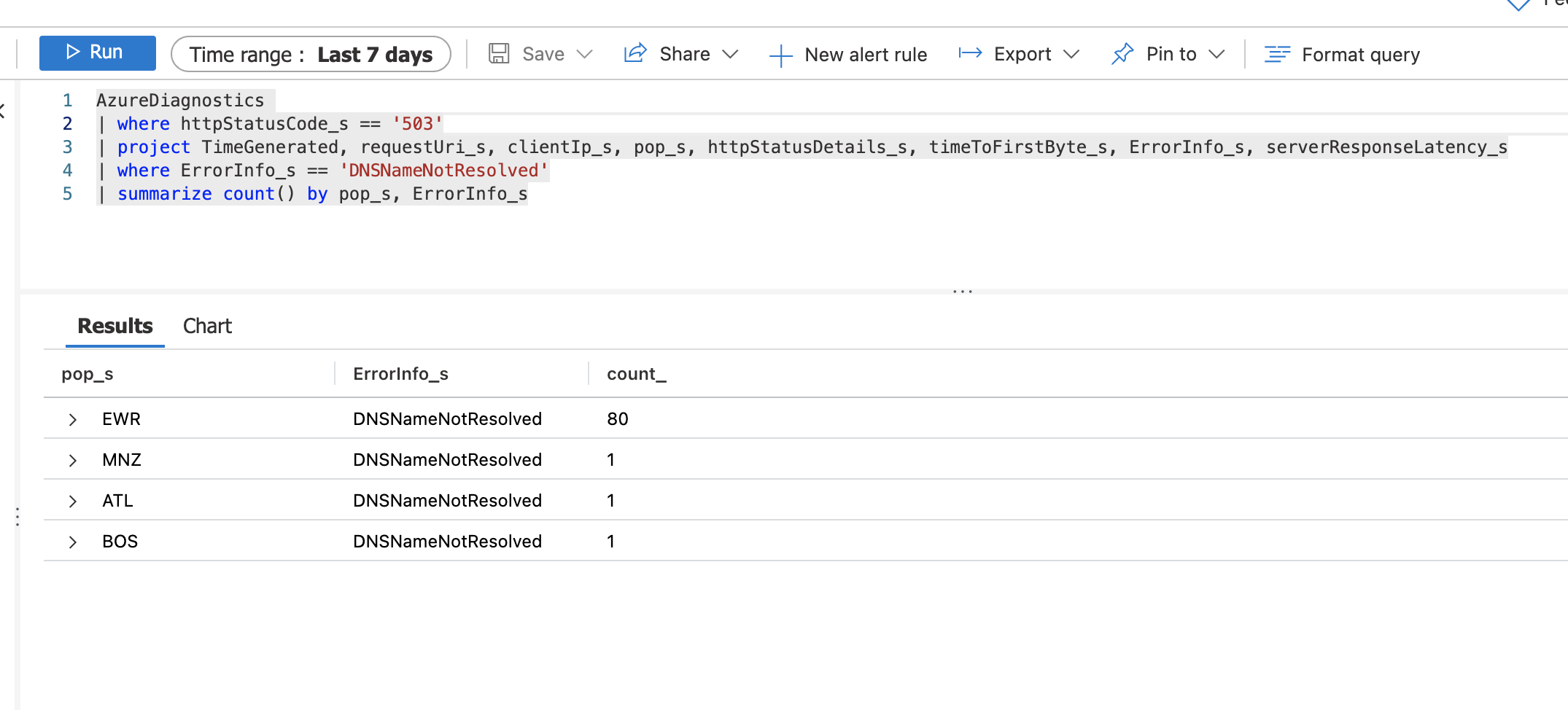

If I examine the ErrorInfo_s record, I can see that:

- 80% of the 503's have the DNSNameNotResolved error.

- 20% of the 503's have the OriginTimeout error.

For the sake of this Q&A, I'm more concerned about the DNSNameNotResolved error. According to https://learn.microsoft.com/en-us/azure/frontdoor/front-door-diagnostics:

"DNSNameNotResolved: The server name or address couldn't be resolved."

Now if I look at the timeToFirstByte_s value of all of these 503 'DNSNameNotResolved' requests, I see that the timeToFirstByte_s is > 11s for all of these requests.

If I look at what the origin FQDN resolves to from a DNS lookup, I see something like this (I replaced any identifying IP addresses or DNS records with x's):

;; ANSWER SECTION:

xxx.azure-api.net. 800 IN CNAME apimgmttmhzxxxx.trafficmanager.net.

apimgmttmhzxxxx.trafficmanager.net. 200 IN CNAME xxx-01.regional.azure-api.net.

xxx-01.regional.azure-api.net. 800 IN CNAME apixxx.eastus2.cloudapp.azure.com.

apixxx.eastus2.cloudapp.azure.com. 10 IN A 20.x.x.x (WHERE 20.x.x.x is the IP of our API Management proxy)

You can see there are 4 separate DNS lookups that must be made to complete a request to our API Management service at xxx.azure-api.net.

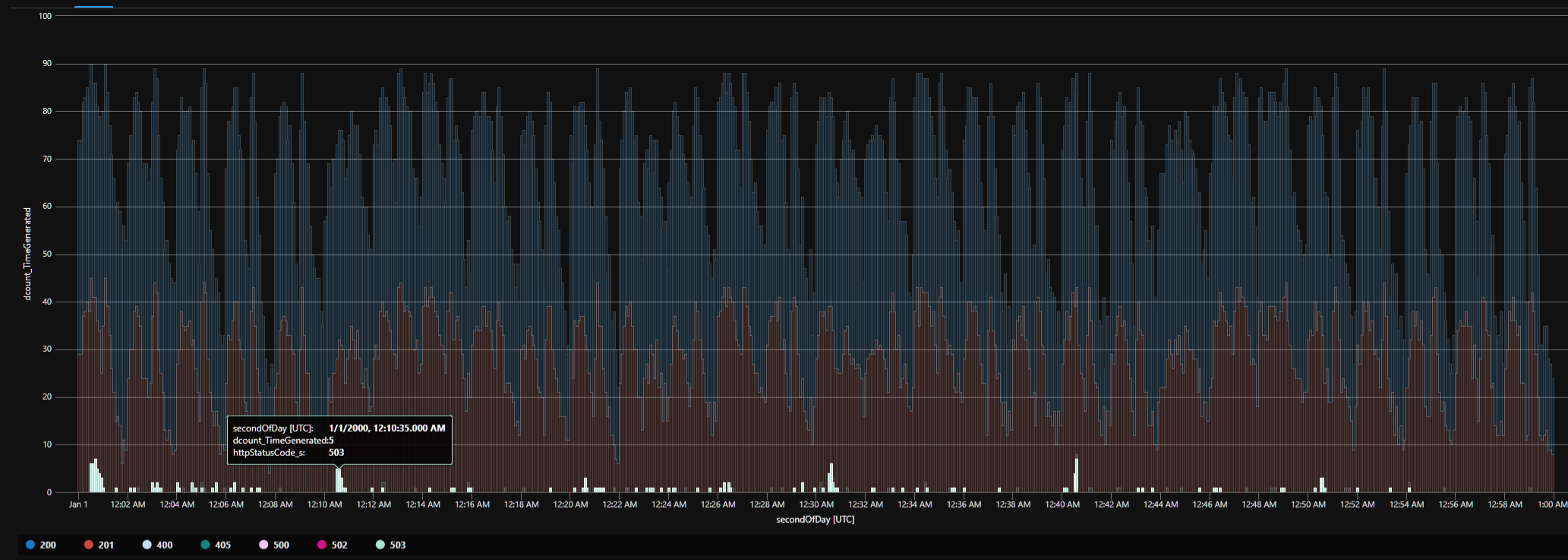

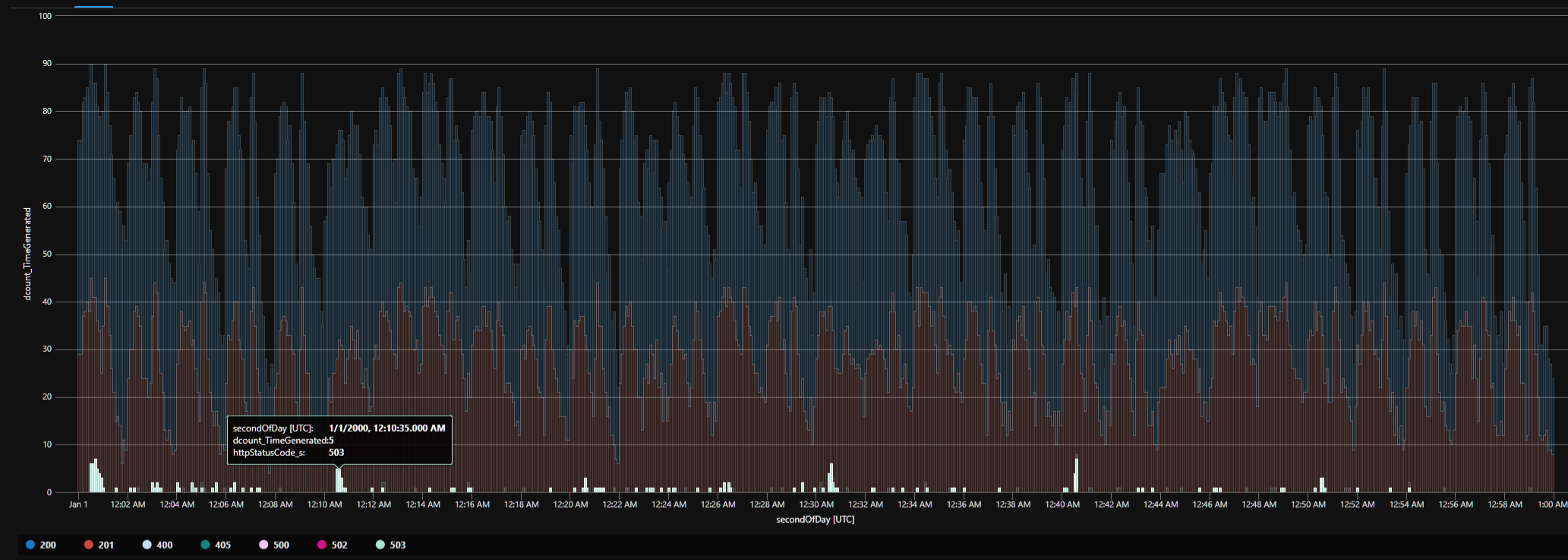

If I look at some data through a KQL query, I can see that the request volume remains flat, yet we still see these 503's spiking every 10 minutes.

I considered adding the IP of the API Management as a backend origin instead, to completely bypass the DNS checks. But I think that introduces a completely different problem with TLS termination -- since it's then connecting to the backend at https://<ip-address>, that just doesn't work due to tls errors / certificate name mismatch (even though I have it disabled). <---- Although that is for a different Q&A to iron out.

Is Azure DNS not fast enough to respond to these requests from Azure FrontDoor? What else should I look at? What are my options here to eliminate these DNSNameNotResolved errors?

Thank you