Hello @Ram ,

Thanks for the response. As I understand the process that you are using Logic apps to trigger your ADF pipelines when a new file arrives in a blob storage. Please correct me if I'm not clear.

I'm not sure if you are already aware of this but would like to share as it might be helpful for you to redesign your flow. You can trigger ADF pipelines based on events happening in storage account, such as the arrival or deletion of a file in Azure Blob Storage account. Azure Data Factory and Synapse pipelines natively integrate with Azure Event Grid, which lets you trigger pipelines on such events. By utilizing this native functionality in ADF you can avoid usage of Logic apps in your application flow.

For more information about blob event trigger that runs a pipeline in response to a storage event please refer to this document: Create a trigger that runs a pipeline in response to a storage event

Here is a demo video by community volunteer on the same: Event based Triggers in Azure Data Factory

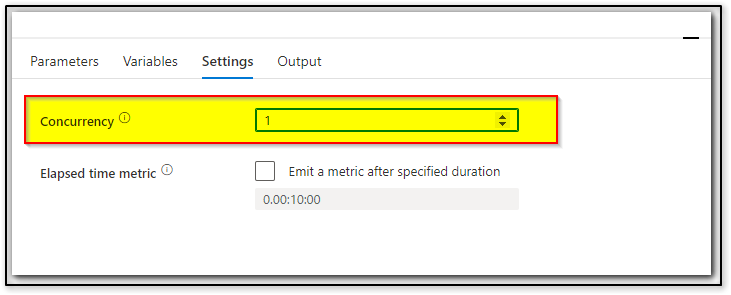

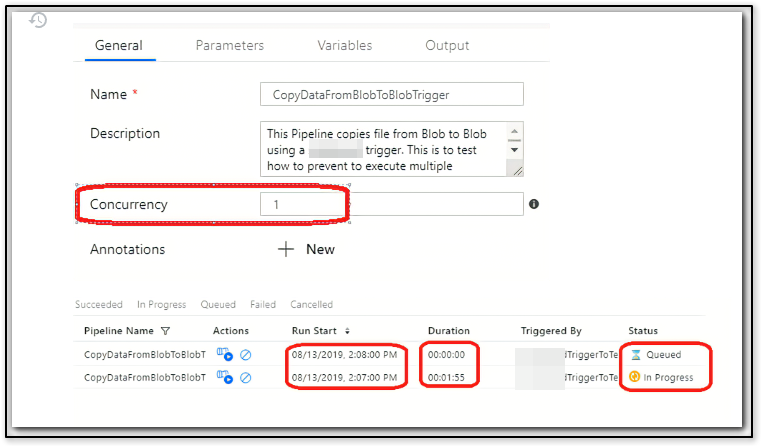

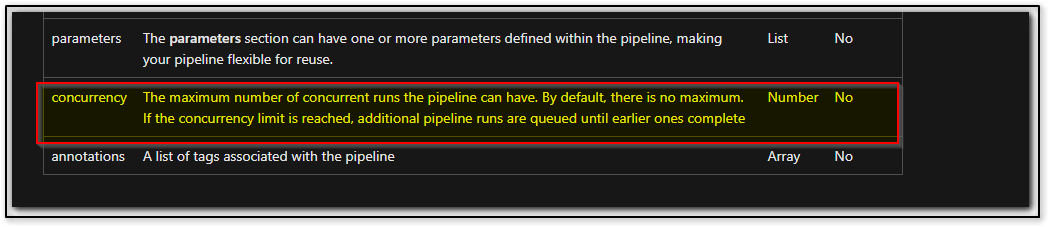

And for the second part where you would like to have a dependency run or run pipeline only after the initial run of the pipeline is completed. In order to acheive this you will have configure the concurrency setting to 1 in pipeline settings tab. Doing so you are setting the number of simultaneous pipeline runs that are fired. When you set the concurrency setting to 1 and if you receive 2 files at the same time. Only 1 will active/in progress and rest two pipeline runs will queue and will be initiated after the first run is completed. And the same follows from pipeline run2 to pipeline run 3.

This concurrency setting with help you in both cases (either using ADF event trigger or triggers with your logic apps)

For more information related to pipeline concurrency please refer to this document: https://learn.microsoft.com/en-us/azure/data-factory/concepts-pipelines-activities?tabs=data-factory#pipeline-json

Related SO thread: Azure Data Factory V2 Trigger Pipeline only when current execution finished

Hope this will help. Let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how