Expose spark metrics to prometheus

I want to expose spark cluster metrics in azure databrick to prometheus using Prometheus Serverlet. So I tried to edit the metrics.properties file to something like this

*.sink.prometheusServlet.class=org.apache.spark.metrics.sink.PrometheusServlet

*.sink.prometheusServlet.path=/metrics/prometheus

master.sink.prometheusServlet.path=/metrics/master/prometheus

applications.sink.prometheusServlet.path=/metrics/applications/prometheus

using the init script

!/bin/bash

cp /dbfs/databricks/metrics-conf/metrics.properties /databricks/spark/conf/

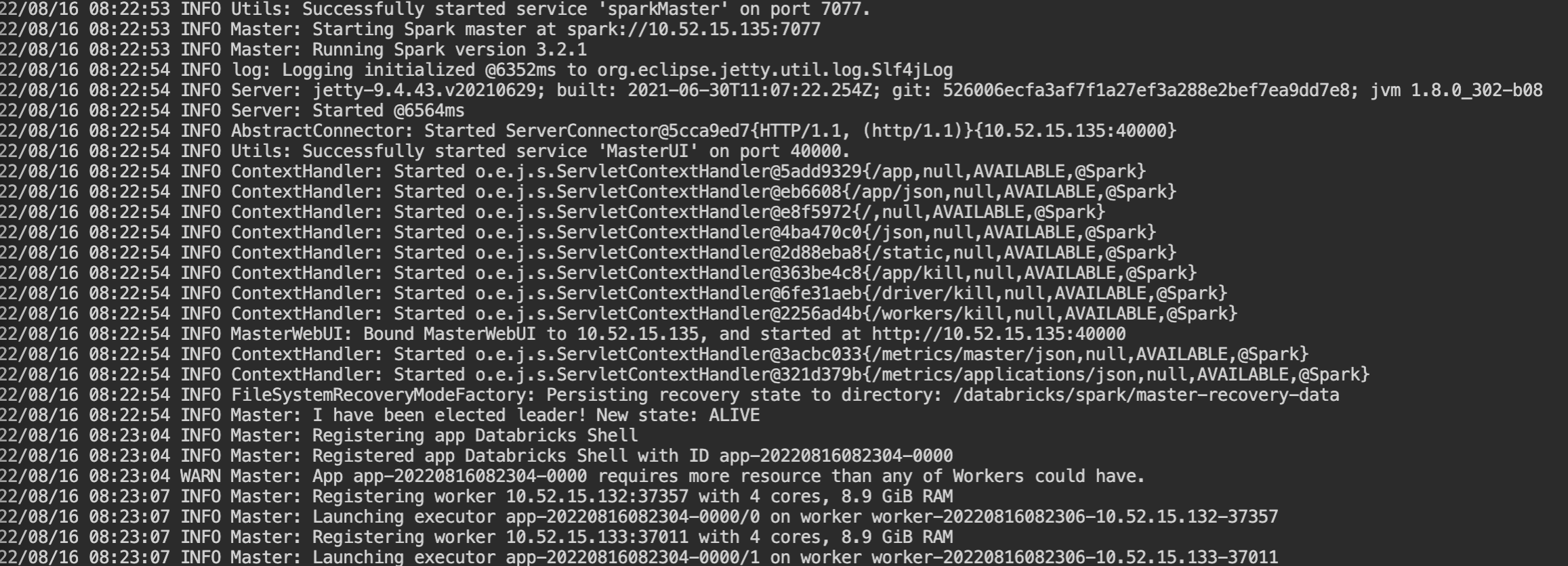

The file is successfully edited but I still cannot access the prometheus endpoint. As I checked the logs from spark, I don't see any prometheus servlet was created