Calling the Databricks from ADF does not change where the code is executed/run. If the Databricks is hosted in Azure (Azure Databricks), then it will run in Azure.

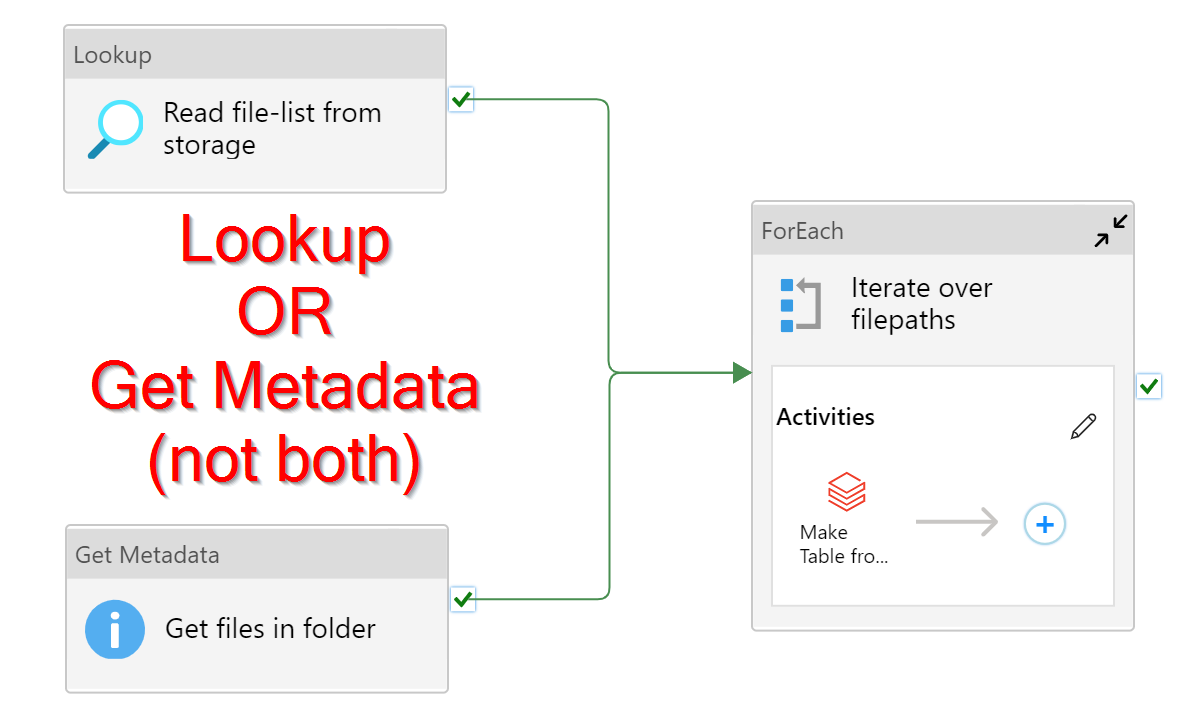

So, to do this, the notebook for reading the file and creating the table, must take a parameter. This parameter is how we will pass in the filepath. How we get the filepath depends on where it is stored. Below I explain the two possibilities. Only do one of them, not both.

- If the location of these files are not in the default dbfs, but instead are in mounted dbfs folder, then we may be able to get at them from Data Factory. For example, the files are stored in Data Lake gen 2, but you mounted them so it is dbfs:/mymount/tables . We can use Data Factory Get Metadata on the Data Lake storage to list the files.

- However if the files are kept in the default dbfs, then I am not aware of any way for Data Factory to see them. Have something (could be a Databricks notebook, or just 1-off effort) write a file to Azure storage (blob storage or Data Lake gen 2). This file should contain/list the paths of each table-file, 1 per line. We will use a Data Factory Lookup Activity to read these.

Be aware, both Get Metadata and Lookup will return at most 4MB. If your list of files/tables is more than 5000 records or more than 4 MB, then neither method will work. Let me know if this is the case.

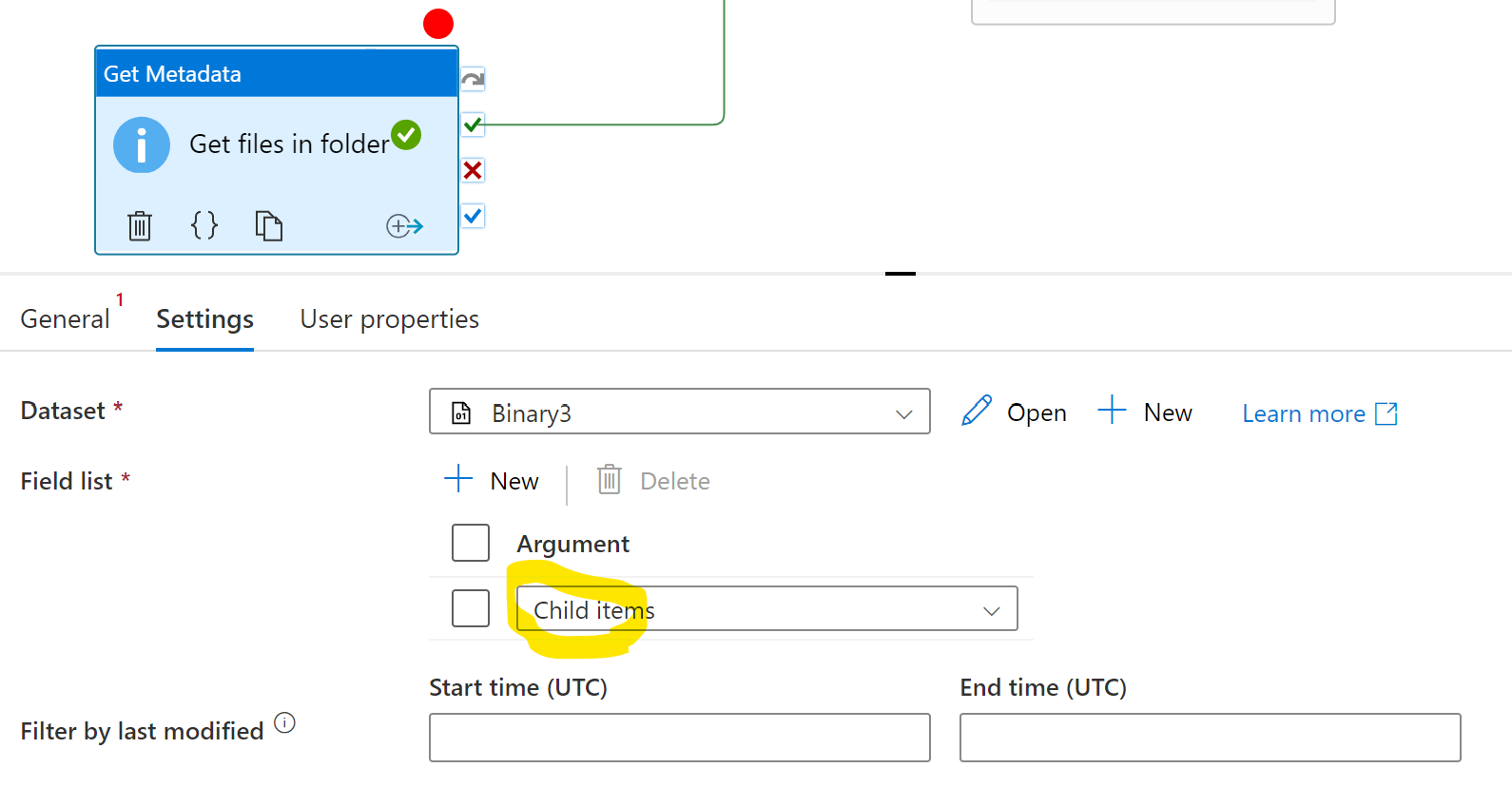

For option #1, create a linked service for that storage service. Create a dataset for that linked service. I recommend a binary dataset type, as we only care about the name, not contents. The dataset should point to the folder containing the table-files.

The Get Metadata activity's Field List should be Child items **.

** this operation gets both files and folders. If you have sub-folders, add Item type to the Get Metadata activity's Field list. We can use a Filter activity to screen out the subfolders.

Do a debug run to test whether all is well so far.

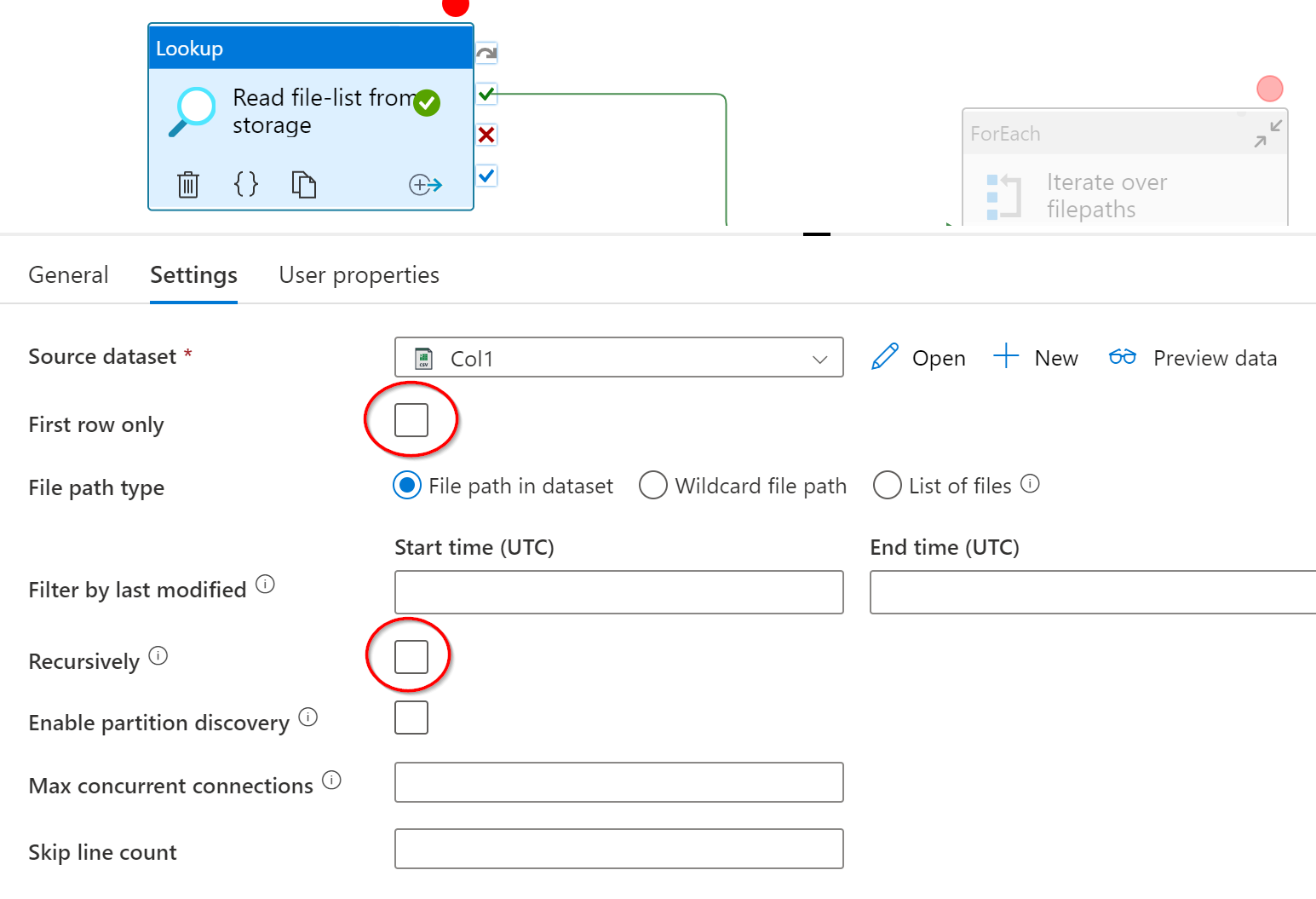

For option #2, create a linked service for where you stored the list-file. Create a delimited text dataset for that linked service. Point directly to that list-file. If you gave the file a header, check the dataset's First row as header options, otherwise uncheck it. Given we have only 1 column, the rest of the dataset options should be fine as-is, unless you put commas in filenames.

The Lookup activity should use our new dataset. Make sure the First row only and Recursively are turned OFF. Do a "preview data" to check all is working properly.

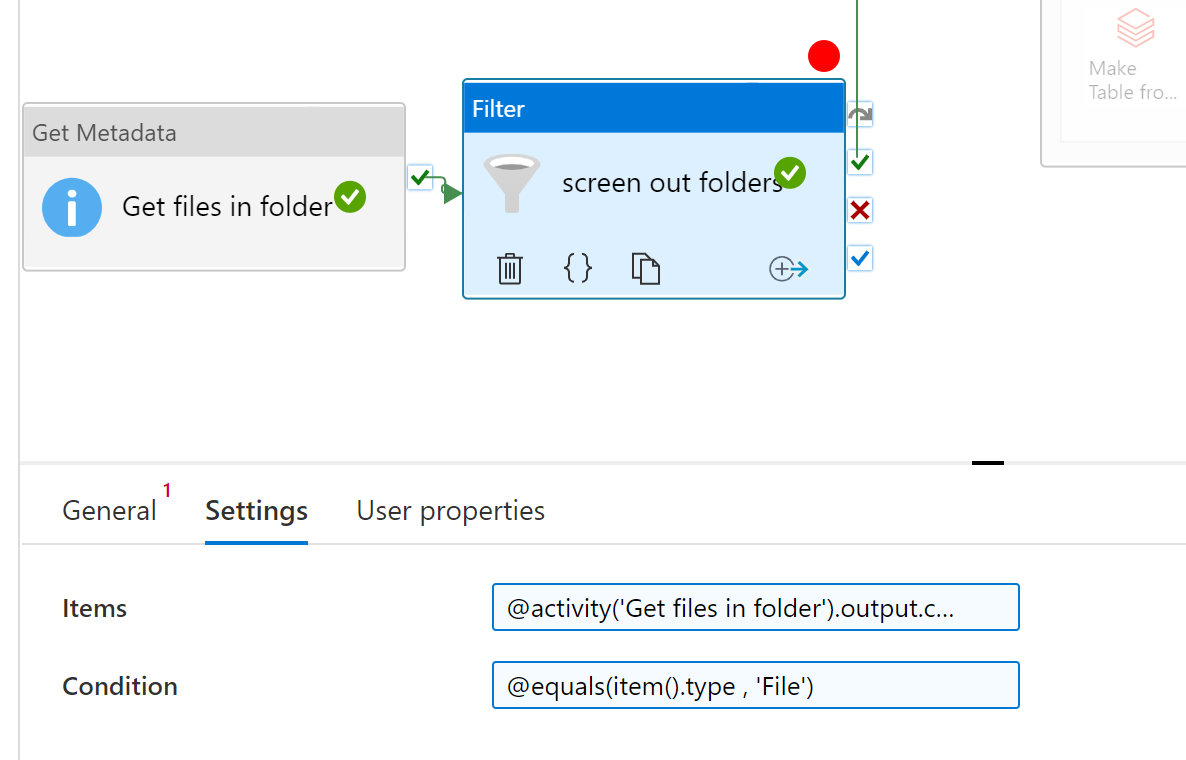

If option #1 AND you have subfolders, I'm sure you don't want to give subfolder name to the databricks. Add a filter activity between the Get Metadata and ForEach. The Filter's items would be @activity('Get files in folder').output.childItems The condition would be @equals(item().type , 'File')

Click the red circle above the filter activity to turn on a breakpoint. This allows you to do a debug run and only run everythign before and including this activity, skipping everything after. Do a debug run to test.

If option #1, then the ForEach 's items should be @activity('Get files in folder').output.childItems

If option #1 AND you have subfolders, then the ForEach 's items should be @activity('screen out folders').output.Value

If option #2, then the ForEach 's items should be @activity('Read file-list from storage').output.value

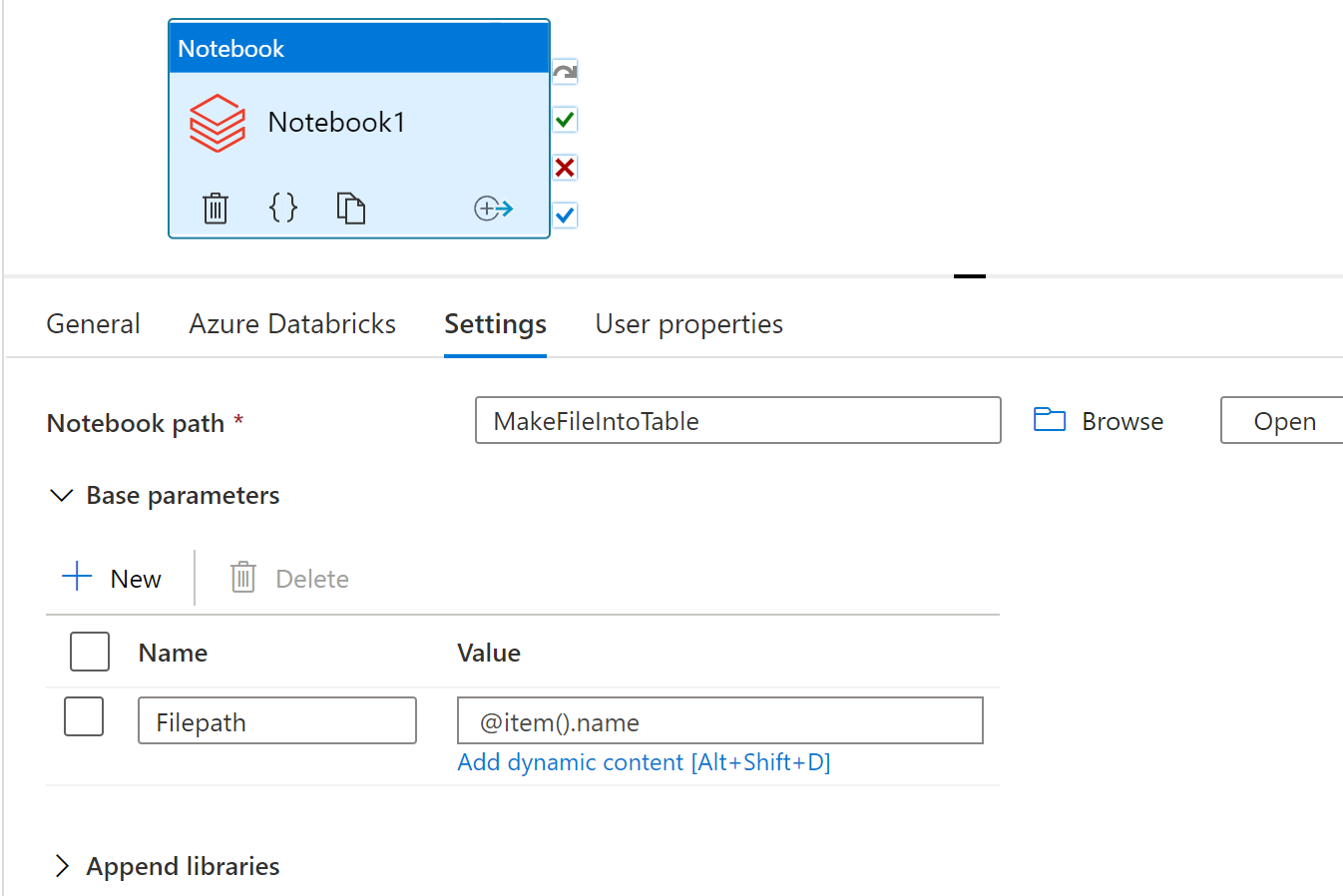

Now go inside the ForEach and add an Azure Databricks activity (Notebook / python / jar). If you do not already have a Databricks linked service, make one.

If option #1, the parameter value should be @item().name

If option #2 WITHOUT header, the value should be @item().prop_0

If option #2 WITH header, the value should be @item().myColumnNameGoesHere

Have I missed anything @Vinodh247 ?