I've struggled a lot with storage triggers, and they can be really tough to get to the bottom of (from a support point of view).

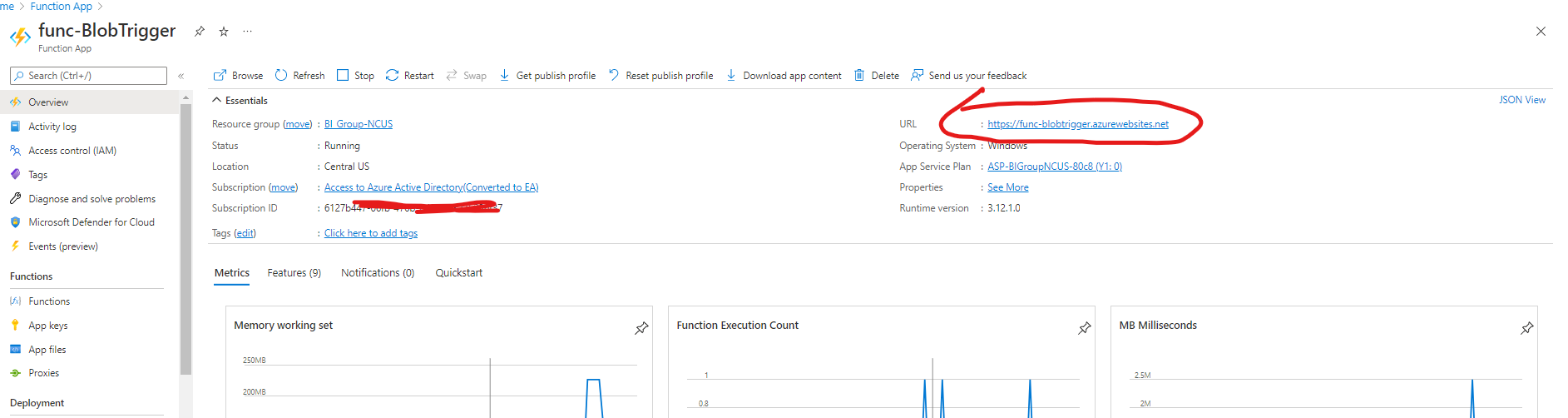

I'd recommend stopping and starting your function as a first point. Depending on how you deploy, the trigger can require this. If it seems to work right away, wait for a day and try again to be sure. Many of my issues worked fine in active testing but started ghosting me after a few hours.

For testing purposes, I'd take a look at your storage account. I've had issues with storage account versions (I always use v2 storage now because I found it more stable - the storage created when setting up the function is v1 by default). If you can, just stand up a new storage account with the alternate version of what you are using and point your trigger there, just to see if it behaves differently.

Also, from the storage layer, we needed to switch to Locally Redundant Storage (LRS) as well, but I can't recall if this fixed the issue or was just left from testing. If you're testing the storage layer, then this is another variation to look at.

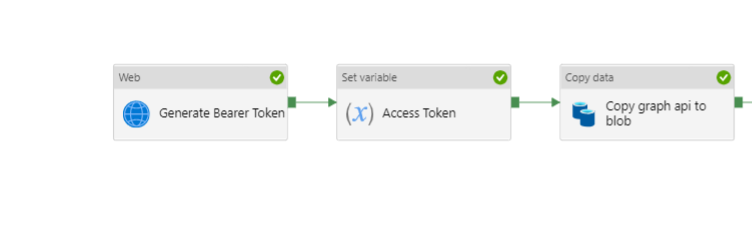

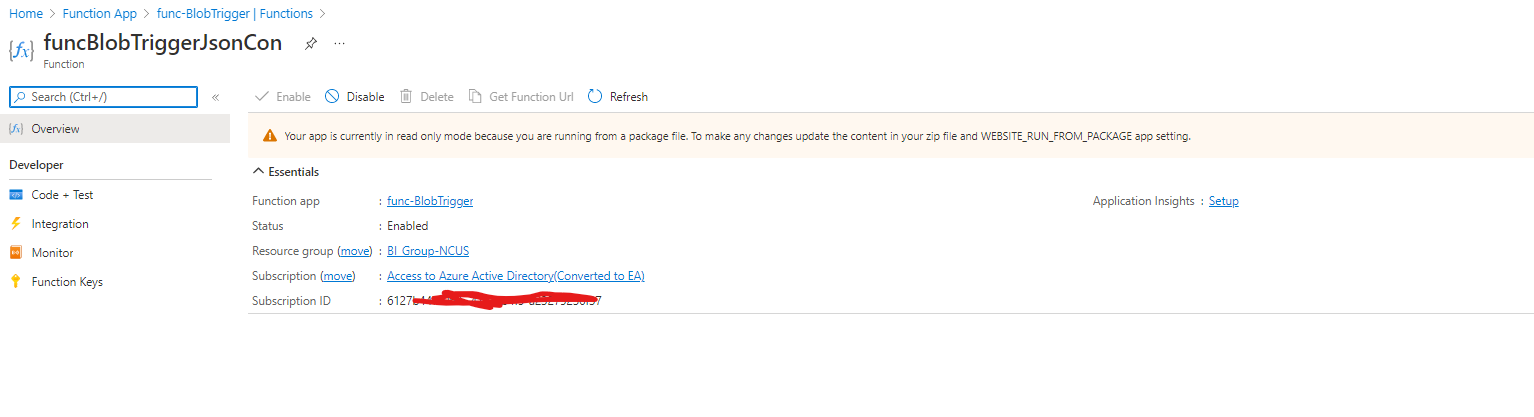

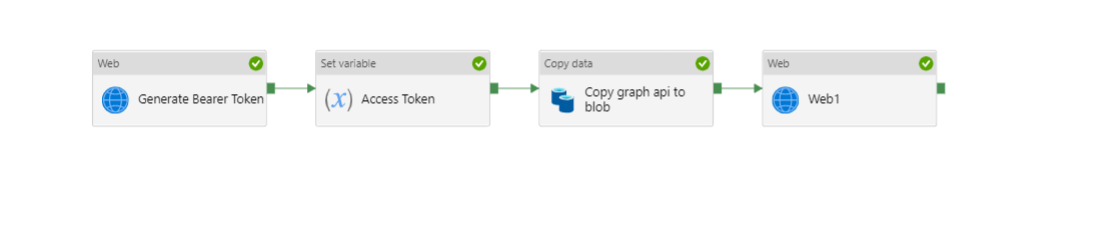

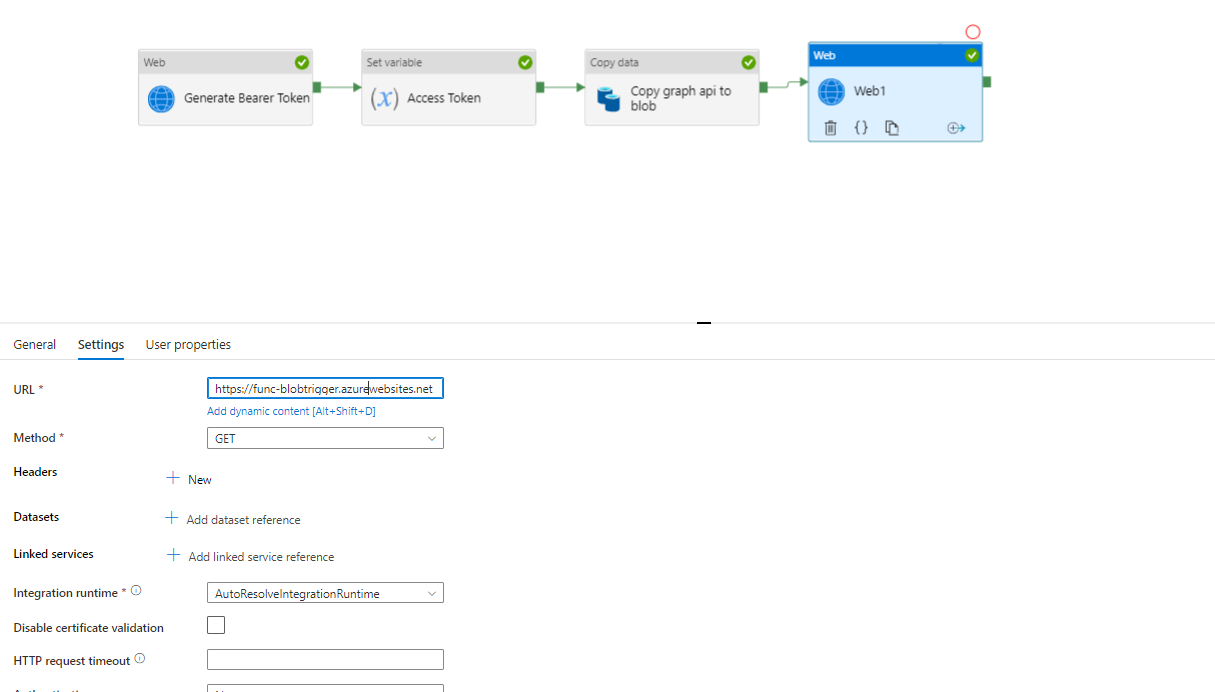

As an alternate approach, you could consider using an HTTP trigger on your function, add an Azure Function action to your pipeline and pass in the filename as a parameter. This latter is what I tend to do now, mainly because I've lost trust in storage triggers because of odd issues just like this, but also because I prefer to see triggering actions rather than have transparent activities that can be inadvertently triggered.

The bottom line, though, is the issue is not likely to be with the trigger itself but more with the event that gets raised on the storage account when the file is created. i.e. a storage event issue rather than a trigger issue.

Hope this helps.