Hello,

I have been developing a Pipeline that runs a Dataflow as and activity at the very end. We have a DEV and a PROD Environment.

All worked 100% in the DEV Environment, but when I reached PROD I got the following error in the Dataflow:

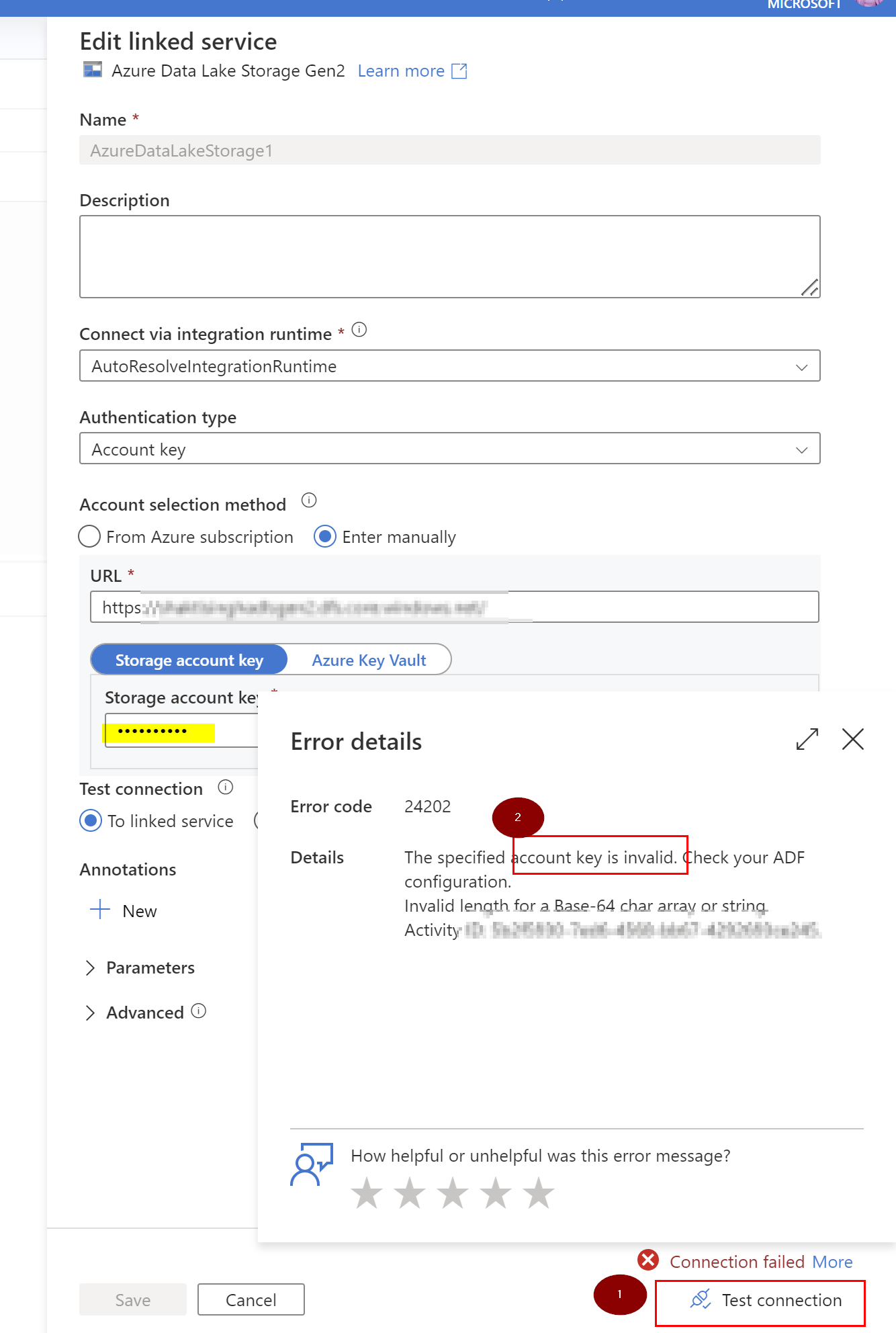

Error Code: DFExecutorUserError

Failure Type: User configuration issue

Details: Job failed due to reason: at source 'Australia' invalid account key.

It is clear the error lies in the Sources of the Dataflow. Would there be a reason why all is perfectly working in the Development Environment and I am having this issue in Production?

I checked the linked services I am referring in the Source and I can see how other pipelines in PROD Environment are using them with no issues.

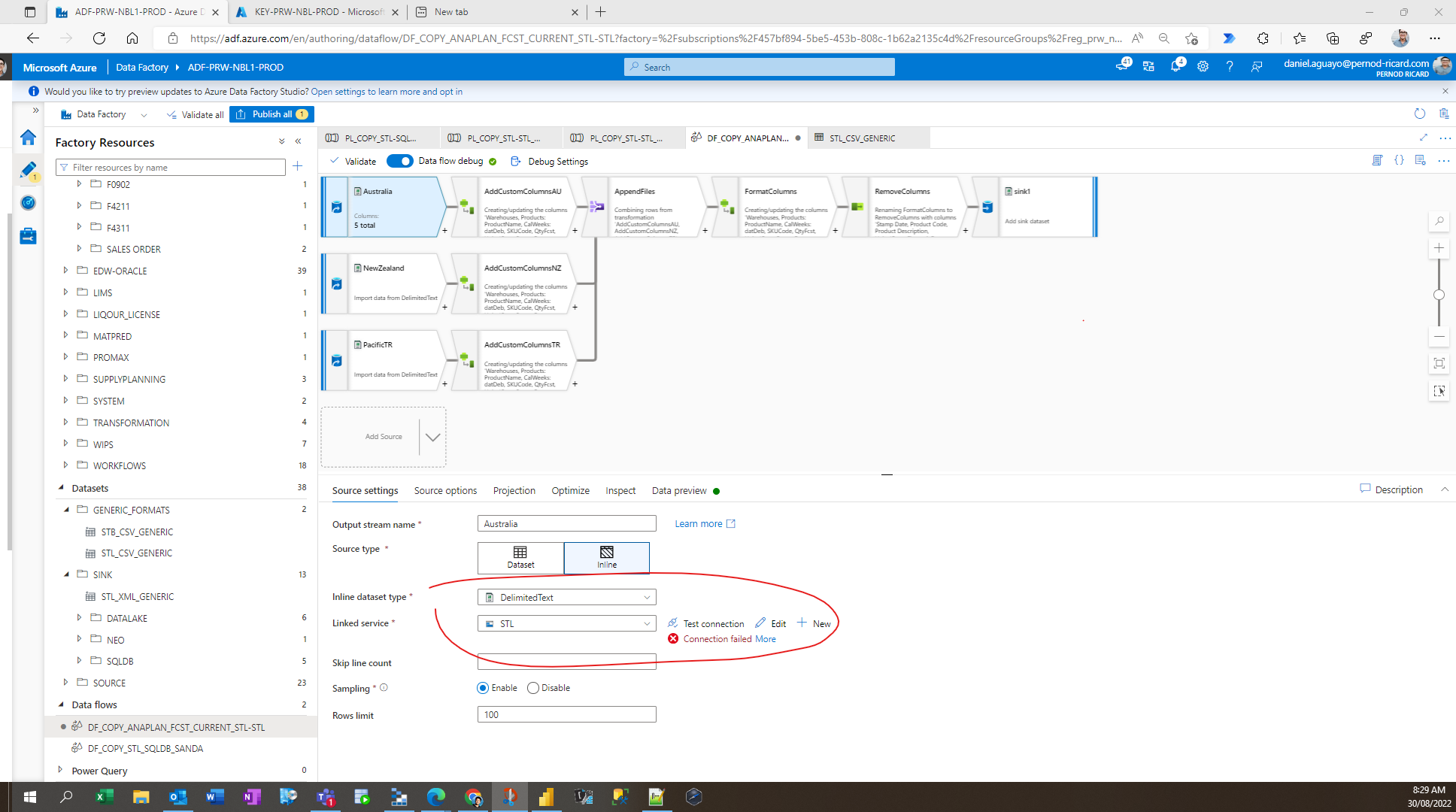

Source screenshots below :

Also, the script of the Dataflow is below:

{

"name": "DF_COPY_ANAPLAN_FCST_CURRENT_STL-STL",

"properties": {

"type": "MappingDataFlow",

"typeProperties": {

"sources": [

{

"linkedService": {

"referenceName": "STL",

"type": "LinkedServiceReference"

},

"name": "Australia"

},

{

"linkedService": {

"referenceName": "STL",

"type": "LinkedServiceReference"

},

"name": "NewZealand"

},

{

"linkedService": {

"referenceName": "STL",

"type": "LinkedServiceReference"

},

"name": "PacificTR"

}

],

"sinks": [

{

"linkedService": {

"referenceName": "STL",

"type": "LinkedServiceReference"

},

"name": "sink1"

}

],

"transformations": [

{

"name": "AddCustomColumnsAU"

},

{

"name": "AddCustomColumnsNZ"

},

{

"name": "AddCustomColumnsTR"

},

{

"name": "AppendFiles"

},

{

"name": "FormatColumns"

},

{

"name": "RemoveColumns"

}

],

"scriptLines": [

"source(output(",

" Warehouses as string,",

" {Products: ProductName} as string,",

" {CalWeeks: datDeb} as string,",

" SKUCode as string,",

" QtyFcst as float",

" ),",

" allowSchemaDrift: true,",

" validateSchema: false,",

" limit: 100,",

" ignoreNoFilesFound: true,",

" format: 'delimited',",

" fileSystem: 'prw-datalake-project-production',",

" columnDelimiter: '\t',",

" escapeChar: '\\',",

" quoteChar: '\\"',",

" columnNamesAsHeader: true,",

" wildcardPaths:['prw/Anaplan/Anaplan_Exports/FcstW txt Export - Neo Output/Australia/.txt']) ~> Australia",

"source(output(",

" Warehouses as string,",

" {Products: ProductName} as string,",

" {CalWeeks: datDeb} as string,",

" SKUCode as string,",

" QtyFcst as float",

" ),",

" allowSchemaDrift: true,",

" validateSchema: false,",

" ignoreNoFilesFound: true,",

" format: 'delimited',",

" fileSystem: 'prw-datalake-project-production',",

" columnDelimiter: '\t',",

" escapeChar: '\\',",

" quoteChar: '\\"',",

" columnNamesAsHeader: true,",

" wildcardPaths:['prw/Anaplan/Anaplan_Exports/FcstW txt Export - Neo Output/NewZealand/.txt']) ~> NewZealand",

"source(output(",

" Warehouses as string,",

" {Products: ProductName} as string,",

" {CalWeeks: datDeb} as string,",

" SKUCode as string,",

" QtyFcst as float",

" ),",

" allowSchemaDrift: true,",

" validateSchema: false,",

" ignoreNoFilesFound: true,",

" format: 'delimited',",

" fileSystem: 'prw-datalake-project-production',",

" columnDelimiter: '\t',",

" escapeChar: '\\',",

" quoteChar: '\\"',",

" columnNamesAsHeader: true,",

" wildcardPaths:['prw/Anaplan/Anaplan_Exports/FcstW txt Export - Neo Output/PacificTR/**.txt']) ~> PacificTR",

"Australia derive({Units/Case} = '',",

" {Stamp Date} = currentDate(),",

" Channel = case(equals(left(Warehouses,1),'A'),'PRA','')) ~> AddCustomColumnsAU",

"NewZealand derive({Units/Case} = '',",

" {Stamp Date} = currentDate(),",

" Channel = case(equals(left(Warehouses,1),'A'),'PNZ','')) ~> AddCustomColumnsNZ",

"PacificTR derive({Units/Case} = '',",

" {Stamp Date} = currentDate(),",

" Channel = case(equals(left(Warehouses,1),'A'),'AUS', case(equals(left(Warehouses,1),'N'),'NZT',''))) ~> AddCustomColumnsTR",

"AddCustomColumnsAU, AddCustomColumnsNZ, AddCustomColumnsTR union(byName: true)~> AppendFiles",

"AppendFiles derive({Stamp Date} = {Stamp Date},",

" {Product Code} = substringIndex(SKUCode,'_',-1),",

" {Product Description} = {Products: ProductName},",

" {Units/Case} = {Units/Case},",

" Channel = Channel,",

" Date = toDate({CalWeeks: datDeb}, 'yyyy-MM-dd'),",

" Branch = substringIndex(Warehouses,'-',1),",

" {Volume (CA)} = QtyFcst) ~> FormatColumns",

"FormatColumns select(mapColumn(",

" {Stamp Date},",

" {Product Code},",

" {Product Description},",

" {Units/Case},",

" Channel,",

" Date,",

" Branch,",

" {Volume (CA)}",

" ),",

" skipDuplicateMapInputs: true,",

" skipDuplicateMapOutputs: true) ~> RemoveColumns",

"RemoveColumns sink(allowSchemaDrift: true,",

" validateSchema: false,",

" format: 'delimited',",

" fileSystem: 'prw-datalake-project-production',",

" folderPath: 'prw/Anaplan/Anaplan_Exports/FcstW txt Export - Neo Output/zzDataflow Output/',",

" columnDelimiter: ',',",

" escapeChar: '\\',",

" quoteChar: '\\"',",

" columnNamesAsHeader: true,",

" partitionFileNames:['FcstW_Current_Output.csv'],",

" umask: 0022,",

" preCommands: [],",

" postCommands: [],",

" skipDuplicateMapInputs: true,",

" skipDuplicateMapOutputs: true,",

" partitionBy('hash', 1)) ~> sink1"

]

}

}

}