You don't say how far along you are in getting this done, so I'll need to make some assumptions, the first being to assume you're using a pipeline with a simple 'Copy data' activity to drop the contents of the file into SQL.

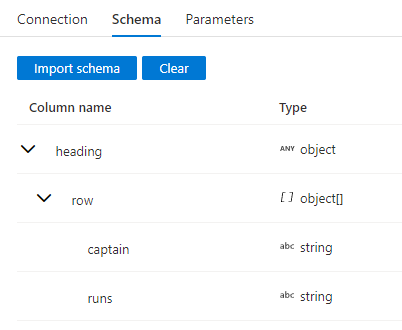

So, start out by creating your source dataset pointing to your input file. I'm going to assume you know how to do this, so you should have something similar to the following when you import schema.

From there, create your sink dataset pointing to your SQL server and destination table. Don't forget to click 'Auto create table' if you want to create the destination table.

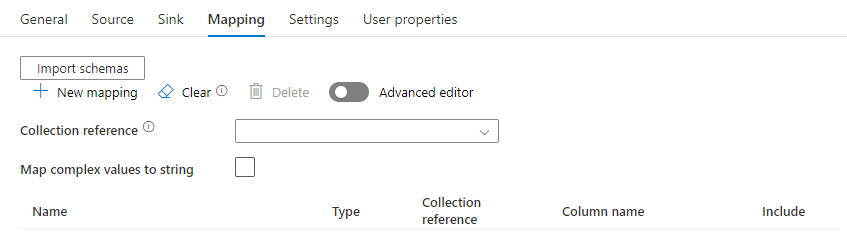

Click on the Mappings tab, and you should see something like this:

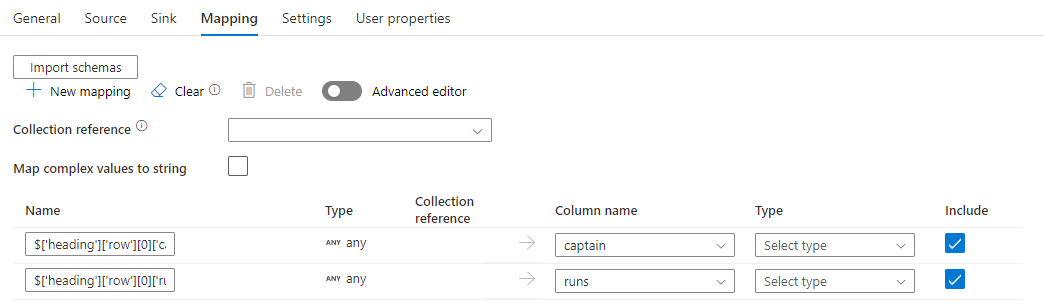

Click 'Import Schemas', and you should get something like the following:

This is starting to look promising - we can see that it is going to expose 'captain' and 'runs' columns.

But if you look at the Name definition, you'll see it is defined as "$['heading']['row'][0]['captain']". The [0] indicates that it will only extract the first row (row zero). We don't want that.

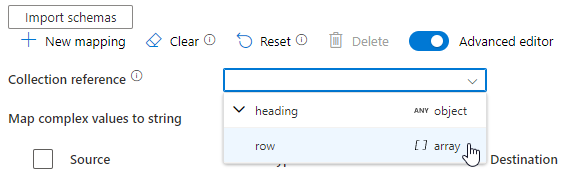

If you turn on the Advanced editor and choose an array from the Collection reference dropdown (in this case 'row'), you are telling the mapping that you want to unroll the array and map each array element to a row.

It should automatically translate those mappings for you, assuming you had the Advanced editor turned on. If you didn't, you can just choose these mappings manually.

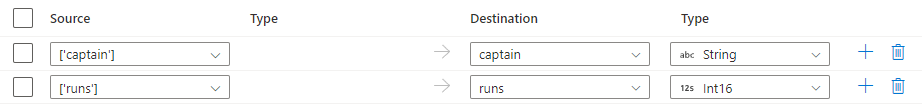

You should now have mappings that look like the above, though I did set those Type entries manually.

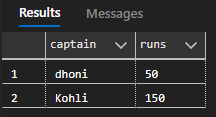

From there, save and run the pipeline, either with debug or by trigger, and it should happily drop the data in the sink table for you.

I hope that answers the question for you.

and upvote

and upvote  for the same. And, if you have any further query do let us know.

for the same. And, if you have any further query do let us know.