Hello @Gokhan Varol ,

Welcome to the MS Q&A platform.

Please correct me if my understanding is wrong. You are trying to upsert all columns in the Parquet file(source) to Delta(sink) in the dataflow and join on the Primary key to do the upsert. During the upsert process, you were getting an error message when automatically detecting the columns.

Could you please provide the detailed error message?

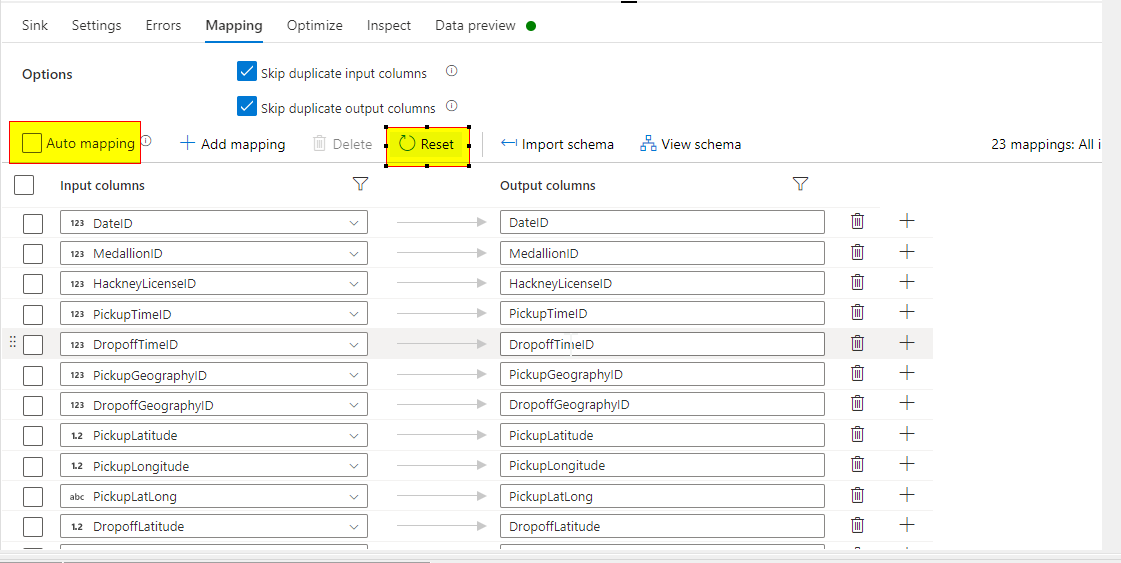

and can you please try unchecking the Automapping and manually map the columns and see?