Thank you for your post! I was able to do some further research and confirmed that the Application Map feature in Application Insights does in fact used the sampled data as any sampling will affect any log-based experience in Application Insights (i.e., Performance, Failures, Transaction Search, and Application Map, etc.) per the following document here. This is because it uses the itemCount field in the telemetry to determine how many real items are being represented by the ingested telemetry item.

The following summary has more information on how the itemCount functions and influences log-based experiences according to the scenario below:

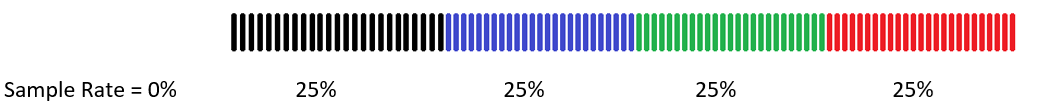

To help explain this behavior, it helps to walk through a controlled example. Let’s say that my web application generated 100 distinct telemetry records. For this hypothetical scenario lets assume 25 different users made a single request to my web site and each of those requests generated 1 Request telemetry record, 1 Dependency telemetry record, 1 Trace Message telemetry record and 1 Exception telemetry record. If all 25 of those users completed their requests then we’d have the raw 100 telemetry records in the image below, so 25 Requests in black, 25 Dependencies in blue, 25 traces in green and 25 exceptions in red.

Now, let’s make the assumption that the App Insights did NOT need to throttle the telemetry. Your web application wanted to send all 100 records up to our ingestion endpoint. The SDK would package up all of those 100 telemetry records into json payloads and send them up to our ingestion service. Every single one of those 100 telemetry records would get their itemCount field set to 1. That is because we don’t need to drop any records for sampling and each single telemetry record represents a count of 1. With a sample rate of 0% we keep each telemetry record and when you do a sum(itemCount) against the requests telemetry you get 25, which matches the 25 requests and 25% of the 100 telemetry records produced by the web application.

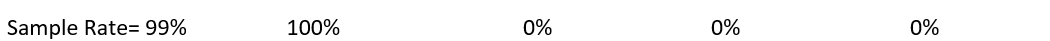

Now that we know how the telemetry behaves without sampling, let’s then talk about what happens during extreme sampling. Let’s say you use Ingestion Sampling or Fixed Rate sampling and decide you are getting too much telemetry and only want to keep 1% of all the records, thus dropping 99% of all of your telemetry. If your sample rate was 99% against our 100 telemetry records above, then that would mean I could only keep a single record out of all 100 items. If I can only keep 1 out of these 100 records, then let’s assume the SDK picks one of the black request telemetry records. It will have to drop all the other 99 records (24 requests, 25 dependencies, 25 traces, 25 exceptions = 99 records). Since we are keeping only one record, dropping 99, then the SDK would naturally set the itemCount field for that single request record to 100, because this single record that is getting ingested represents 100 total telemetry records that executed within the web app.

Now, when you go to run your same log-based sum(itemCount) calculation, you are going to see that the telemetry reports your web application processed 100 Requests, when we know that in reality it only processed 25 requests. Thus, this is how extreme sample rates can wildly start to skew log-based query results. There is no possible way for us to keep just keep 1 out of those 100 records and still somehow represent the true real values across the other telemetry types.

In practice it’s not this simple, because our App Insights SDK samples based on Operation Id, meaning we’ll identify an operation_Id that we want to keep and then we’ll collect ALL of the telemetry for that single operation and make sure all of those records get ingested and saved. The reason we do this is so that your End-to-End experience is complete. When you review a failing operation in the end-to-end view, we want to allow you to see all of the telemetry for that single failing operation so that you know where in the code it was breaking, and you’ll be able to get the exception details. If we were to drop telemetry items from within each operation too, then that wouldn’t help sum(itemCount) computations nor the e2e investigation experience. Since we sample out by operation id that also means you could see wild fluctuations from day to day if your operations produce different counts of telemetry. Say you had a Single-Page-Application that ran at some kiosk and ran all day for multiple users, it’s possible that a single telemetry operation could have produced 4000 telemetry records for just that operation id alone, and if that’s the operation id selected by the SDK to get ingested, as compared to other operations in your web app that only require 1 to 3 telemetry records, you could randomly see a spike in your adjusted sample rates.

This is a hard issue to describe and explain, but it is intentional and only impacts the accuracy of log-based, sum(itemCount), queries when sampling is enabled and starts to get high. We've seen some customers detect inaccuracies when sample rates get into the 60% range, but it impacts customers differently based on their telemetry types, telemetry counts per operation, etc.

The product team is well aware of this issue, and to help customers, the product team added pre-aggregated metrics to the SDKs, Log-based and pre-aggregated metrics in Azure Application Insights - Azure Monitor | Microsoft Learn. These metrics will calculate the 25 requests above and send a metric to your MDM account reporting “this web app processed 25 requests”, but then it will send that single request telemetry record with itemCount of 100 from our example above. The pre-aggregated metrics report the correct numbers and can be found on the Metrics blade of Application Insights, just search for anything in the Metric Namespace Application Insights Standard Metrics. Those standard metrics will chart correct numbers, the sum(itemCount) log-based queries will not when sampling rates get high.

Please let us know should you have any further questions or concerns and we'll be happy to assist you further.

Thanks!

Carlos V.