Problems connecting to reat-time transcription

Eduardo Gomez

3,426

Reputation points

I am playing around with some transcriptions, so I made a console app, to make this process faster.

I am following this documentation https://learn.microsoft.com/en-us/azure/cognitive-services/speech-service/how-to-use-conversation-transcription?pivots=programming-language-csharp

Class

[DataContract]

internal class VoiceSignature

{

[DataMember]

public string Status

{

get; private set;

}

[DataMember]

public VoiceSignatureData Signature

{

get; private set;

}

[DataMember]

public string Transcription

{

get; private set;

}

}

[DataContract]

internal class VoiceSignatureData

{

internal VoiceSignatureData()

{

}

internal VoiceSignatureData(int version, string tag, string data)

{

Version = version;

Tag = tag;

Data = data;

}

[DataMember]

public int Version

{

get; private set;

}

[DataMember]

public string Tag

{

get; private set;

}

[DataMember]

public string Data

{

get; private set;

}

}

VoiceGenerator

public static async Task<string> GetVoiceSignatureString()

{

var subscriptionKey = "c5ba659727324477b4b34e14bec0cee6";

var region = "westeurope";

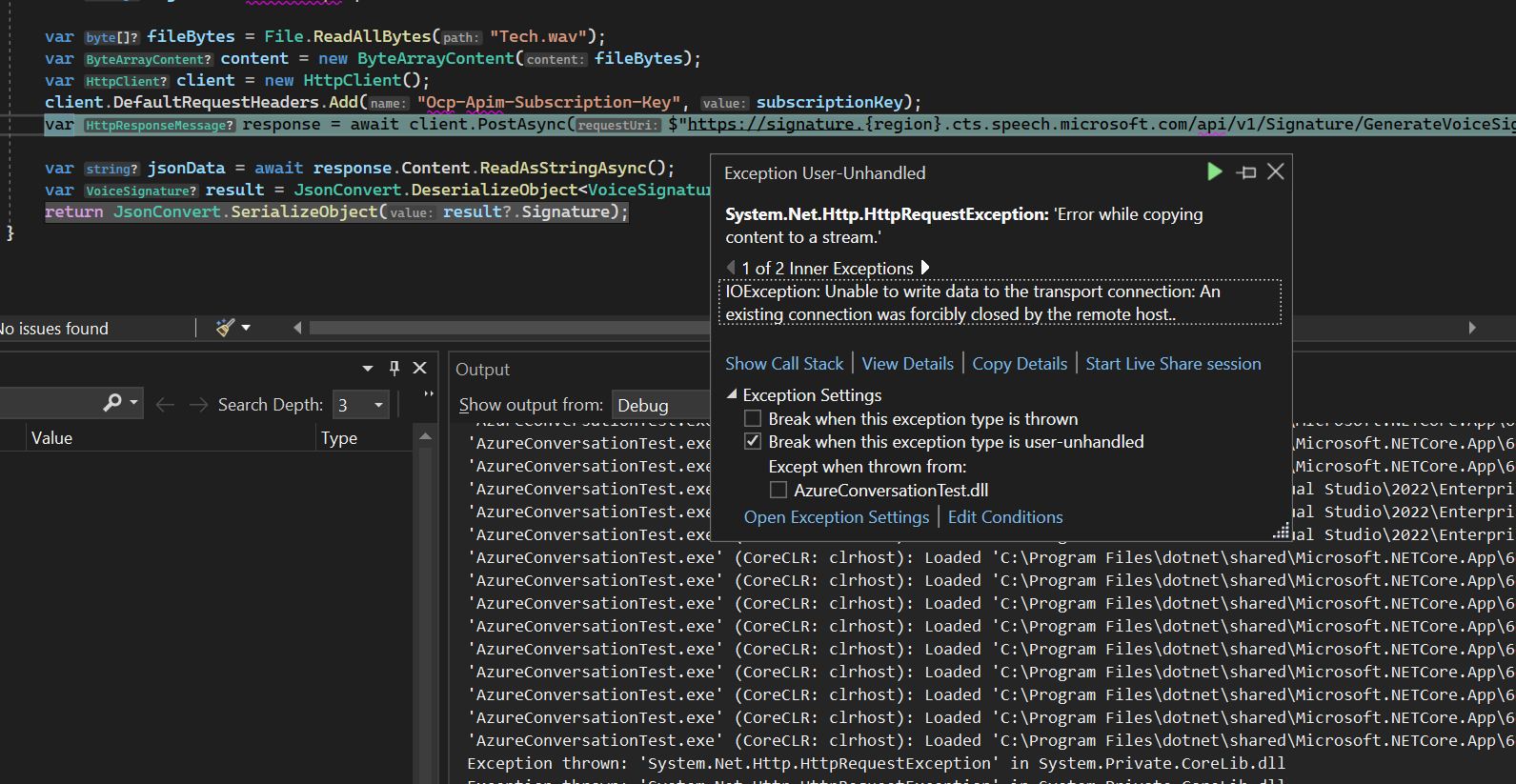

var fileBytes = File.ReadAllBytes("Tech.wav");

var content = new ByteArrayContent(fileBytes);

var client = new HttpClient();

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", subscriptionKey);

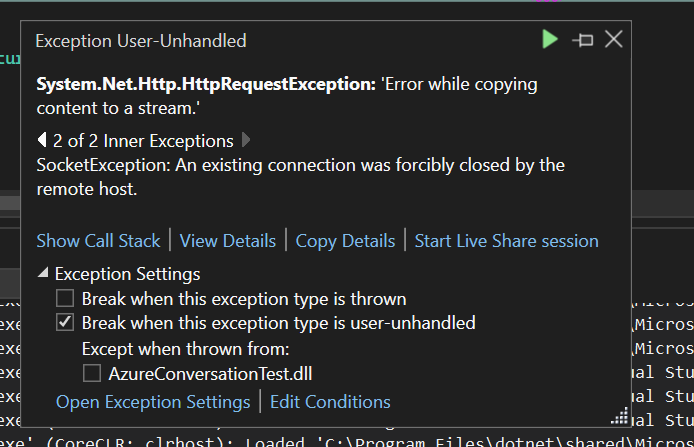

var response = await client.PostAsync($"https://signature.{region}.cts.speech.microsoft.com/api/v1/Signature/GenerateVoiceSignatureFromByteArray", content); // Unable to write data to the transport connection: An existing connection was forcibly closed by the remote host..

var jsonData = await response.Content.ReadAsStringAsync();

var result = JsonConvert.DeserializeObject<VoiceSignature>(jsonData);

return JsonConvert.SerializeObject(result?.Signature);

}

}

Program cs

public static async Task TranscribeConversationsAsync(string voiceSignatureStringUser1, string voiceSignatureStringUser2)

{

var filepath = "Tech.wav";

var config = SpeechConfig.FromSubscription(VoiceGenerator.subscriptionKey, VoiceGenerator.region);

config.SetProperty("ConversationTranscriptionInRoomAndOnline", "true");

// en-us by default. Adding this code to specify other languages, like zh-cn.

// config.SpeechRecognitionLanguage = "zh-cn";

var stopRecognition = new TaskCompletionSource<int>();

using (var audioInput = AudioConfig.FromWavFileInput(filepath))

{

var meetingID = Guid.NewGuid().ToString();

using (var conversation = await Conversation.CreateConversationAsync(config, meetingID))

{

// create a conversation transcriber using audio stream input

using (var conversationTranscriber = new ConversationTranscriber(audioInput))

{

conversationTranscriber.Transcribing += (s, e) =>

{

Console.WriteLine($"TRANSCRIBING: Text={e.Result.Text} SpeakerId={e.Result.UserId}");

};

conversationTranscriber.Transcribed += (s, e) =>

{

if (e.Result.Reason == ResultReason.RecognizedSpeech)

{

Console.WriteLine($"TRANSCRIBED: Text={e.Result.Text} SpeakerId={e.Result.UserId}");

}

else if (e.Result.Reason == ResultReason.NoMatch)

{

Console.WriteLine($"NOMATCH: Speech could not be recognized.");

}

};

conversationTranscriber.Canceled += (s, e) =>

{

Console.WriteLine($"CANCELED: Reason={e.Reason}");

if (e.Reason == CancellationReason.Error)

{

Console.WriteLine($"CANCELED: ErrorCode={e.ErrorCode}");

Console.WriteLine($"CANCELED: ErrorDetails={e.ErrorDetails}");

Console.WriteLine($"CANCELED: Did you set the speech resource key and region values?");

stopRecognition.TrySetResult(0);

}

};

conversationTranscriber.SessionStarted += (s, e) =>

{

Console.WriteLine($"\nSession started event. SessionId={e.SessionId}");

};

conversationTranscriber.SessionStopped += (s, e) =>

{

Console.WriteLine($"\nSession stopped event. SessionId={e.SessionId}");

Console.WriteLine("\nStop recognition.");

stopRecognition.TrySetResult(0);

};

// Add participants to the conversation.

var speaker1 = Participant.From("User1", "en-US", voiceSignatureStringUser1);

var speaker2 = Participant.From("User2", "en-US", voiceSignatureStringUser2);

await conversation.AddParticipantAsync(speaker1);

await conversation.AddParticipantAsync(speaker2);

// Join to the conversation and start transcribing

await conversationTranscriber.JoinConversationAsync(conversation);

await conversationTranscriber.StartTranscribingAsync().ConfigureAwait(false);

// waits for completion, then stop transcription

Task.WaitAny(new[] { stopRecognition.Task });

await conversationTranscriber.StopTranscribingAsync().ConfigureAwait(false);

}

}

}

}

}