Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

The networking model changed significantly in Windows Server 2008 Failover Clustering. First, and foremost, when we start talking about networking in 2008 Failover Clusters we need to drop some of the older concepts that have been around for awhile. Probably the one that needs to go first is the concept of 'Private' network. This term no longer applies in W2K8. All networks detected by the cluster service, and hence the cluster network driver, are, by default, classified as 'mixed' networks and are automatically configured for use by the cluster. Here is a sample of the default configuration for a network in a cluster -

Whether the cluster gets to use this network or not for cluster related communications (e.g. health checking, GUM updates, etc....) is determined by the check box next to "Allow the cluster to use this network.". You will also note that the box is checked for "Allow clients to connect through this network." This is the default configuration provided the network interface supporting the network has a default gateway configured. So, no DG configured....no client access is allowed unless a cluster administrator changes the default setting in the Failover Cluster Management interface. Inspecting the cluster registry settings for this network, the 'Role' is set = 3 which is a 'Mixed' network. So, cluster either gets to use the network or it does not......no more 'Private' network.

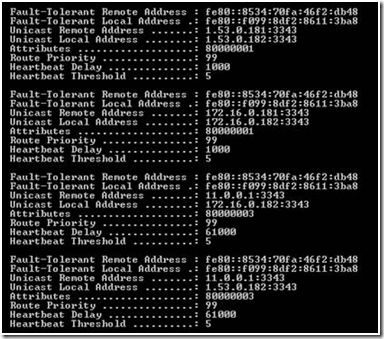

In Windows Server 2008 Failover Clustering, the cluster network driver underwent a complete re-write because it had to support new features including allowing cluster nodes to be placed on separate, routed networks. The new driver is 'netft.sys' (Network Fault-Tolerant driver). When loaded, it shows up as a network adapter (Microsoft Failover Cluster Virtual Adapter) and when you run an 'ipconfig /all' it is listed in the output with a MAC address and an AutoNet address (no modifications are needed nor desired). As a 'fault-tolerant' driver, one of its main functions is to determine all the network paths to all nodes in the cluster. As part of this process, netft.sys builds its own, internal routing table to find each node in the cluster on port 3343 -

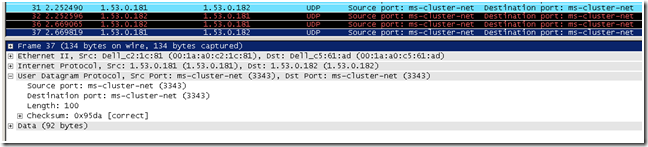

Hence, the requirement for a minimum of two network interfaces or you get a Warning message when running the networking validation tests...a single NIC cluster node violates a 'best practice', that being a single point of failure for cluster communications...and therefore, is not a supported configuration. Cluster network communications have changed from being UDP Broadcast to being UDP Unicast to accommodate the routing required since most routers, by default, will not route broadcast traffic. Plus, Unicast is more efficient. Here is an example packet in a network trace -

But wait.....UDP communications are not reliable! Not true! Provided the network these packets are traveling on is of good quality (not excessive packet loss), UDP communications are fine. In fact, they are more efficient since they do not have the overhead of TCP where it has to wait around for ACKs, and if not received, all the retransmission's that clog the network. Since there are no acknowledgements associated with UDP traffic, the cluster has some built-in 'enhancements' that allow for tracking of the messages that are sent to all nodes in the cluster. There is even a capability for retransmitting messages if they are not acknowledged by a cluster node (but this topic is beyond the scope of this thread)...suffice it to say, there is built-in reliability in cluster communications.

Not all cluster communications use UDP-Unicast. There are some communications that use TCP. For example, during the 'join' process, all initial communications use TCP. Once a node has successfully joined a cluster, intra-cluster communications can then include UDP as needed.

The cluster log also provides valuable information about how the cluster service builds its 'networking knowledge' as part of its startup routine. In the cluster log, you can follow as the cluster architecture components (Interface Manager (IM) and Topology Manager (TM) for example) validate communications connectivity between nodes in the cluster. Some of the information eventually becomes part of the cluster network driver routing table. Here is an example -

[FTI] Follower: waiting for route to node W2K8-CL1 on virtual IP fe80::8534:70fa:46f2:db48:~3343~ to come up 000007e4.00000644::2008/05/20-12:32:24.308 DBG [NETFTAPI] Signaled NetftRemoteReachable event, local address 172.16.0.182:003853 remote address 172.16.0.181:003853 000007e4.00000644::2008/05/20-12:32:24.308 DBG [NETFTAPI] Signaled NetftRemoteReachable event, local address 172.16.0.182:003853 remote address 172.16.0.181:003853 000007e4.00000644::2008/05/20-12:32:24.308 DBG [NETFTAPI] Signaled NetftRemoteReachable event, local address 172.16.0.182:003853 remote address 172.16.0.181:003853 000007e4.0000057c::2008/05/20-12:32:24.308 INFO [TM] got event: Remote endpoint 172.16.0.181:~3343~ reachable from 172.16.0.182:~3343~ 000007e4.000005c0::2008/05/20-12:32:24.308 INFO [FTI] Got remote route reachable from netft evm. Setting state to Up for route from 172.16.0.182:~3343~ to 172.16.0.181:~3343~. 000007e4.000005e0::2008/05/20-12:32:24.308 INFO [IM] got event: Remote endpoint 172.16.0.181:~3343~ reachable from 172.16.0.182:~3343~ 000007e4.000005e0::2008/05/20-12:32:24.308 INFO [IM] Marking Route from 172.16.0.182:~3343~ to 172.16.0.181:~3343~ |

So, if you want to allow client access on a network in a cluster by default, it will have to have a default gateway assigned to it, or you will have to manually configure the network for client access. If you do not have multiple networks that can be accessed via multiple routes, then the cluster will have a difficult time building a routing table that has enough information to provide reliable, cluster-wide communications over multiple networks.

There are other considerations when configuring multi-site clusters. Start by reviewing this - https://support.microsoft.com/kb/947048/en-us

Author: Chuck Timon

Support Escalation Engineer

Microsoft Enterprise Platforms Support

Comments

- Anonymous

January 01, 2003

Al in januari schreef Ben Armstrong op zijn blog een duidelijk verhaal over hoe netwerken werken onder - Anonymous

January 01, 2003

How to install SC Data Protection Manager on Windows Server 2008 Multi Site Failover Clustering Connectivity - Anonymous

January 01, 2003

PingBack from http://www.ditii.com/2008/06/03/windows-server-2008-multi-site-failover-cluster-communications-connectivity/ - Anonymous

January 01, 2003

You may be wondering why, at this point in time, we are publishing a blog such as this. That is a good