Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

I frequently write code that is meant for public consumption. It might not be perfect code, but I make an effort to make it available for others to peruse either way.

The general distinction I use when it comes to choosing a repository for a given solution is pushing it to GitHub if it is public, while using Azure DevOps if it's intended for internal usage. (For home at least - for business there are other factors and other solutions.) If I go with a private repo it's also a natural fit to pipe it from Azure DevOps into Azure Container Registry (ACR) if I'm doing containers. This is nice and dandy for most purposes, but I sometimes want "hybrids". I want the code to be public, but I want to easily deploy it to my own Azure services at the same time. I want to provide public container images, but ACR only supports private registries. And I certainly do not want to maintain parallell setups if I can avoid it.

An example scenario would be doing code samples related to Azure AD. The purpose of the code is of course that everyone can test it as easily as possible, but at the same time I obviously have some parameters that I want to keep out of the code while still being able to test things myself. Currently my AAD samples is one big Visual Studio solution with a bunch of projects inside - great for browsing on GitHub. Less scalable for my own QA purposes if I want to actually deploy them other places than localhost :)

The beauty of Azure DevOps is that while it is one "product" there are several independent modules inside. Sure, you can use everything from a-z, but you can also be selective and only choose what you like. In my case this would be looking closer at the Pipelines feature.

I am aware of things like GitHub Actions and direct integration with Docker Hub, so it's not that I'm confused about that part. It's just that I like things like features like the boards in Azure DevOps as well as being able to easily deploy to my AKS clusters so I thought I'd take a crack at combining some of these things.

The high level flow would be something like this:

- Write code in Visual Studio. Add the necessary Docker config, Helm charts, etc.

- Push to a GitHub repo.

- Pull said GitHub repo into Azure DevOps.

- Build the code in Azure DevOps, and push images to Docker Hub while in parallell pushing to Azure Container Registry.

- Provided the Docker image was built to support it I can supply a config file, a Kubernetes secret, or something similar and push it to an AKS cluster for testing and demo purposes.

Easy enough I'd assume. I didn't easily locate any guides on this setup though, so I'm putting it into writing just in case I'm not the only one wondering about this :) Not rocket science to figure out, but still nice to have screenshots of it.

I ran through the wizard in Visual Studio to create a HelloDocker web app. Afterwards I added Docker support for Linux containers. The default Dockerfile isn't perfect for Azure DevOps pipelines though so I created one for that purpose called Dockerfile.CI:

FROM microsoft/dotnet:2.2-sdk AS build-env

WORKDIR /app

# Copy csproj and restore

COPY *.csproj ./

RUN dotnet restore

# Copy everything else and build

COPY . ./

RUN dotnet publish -c Release -o out

# Build runtime image

FROM microsoft/dotnet:2.2-aspnetcore-runtime

WORKDIR /app

COPY --from=build-env /app/out .

ENTRYPOINT ["dotnet", "HelloDocker.dll"]

Next step is pushing it to GitHub - I'll assume you've got that part covered as well. I have also made sure I have a Docker Hub id, and the credentials for that ready.

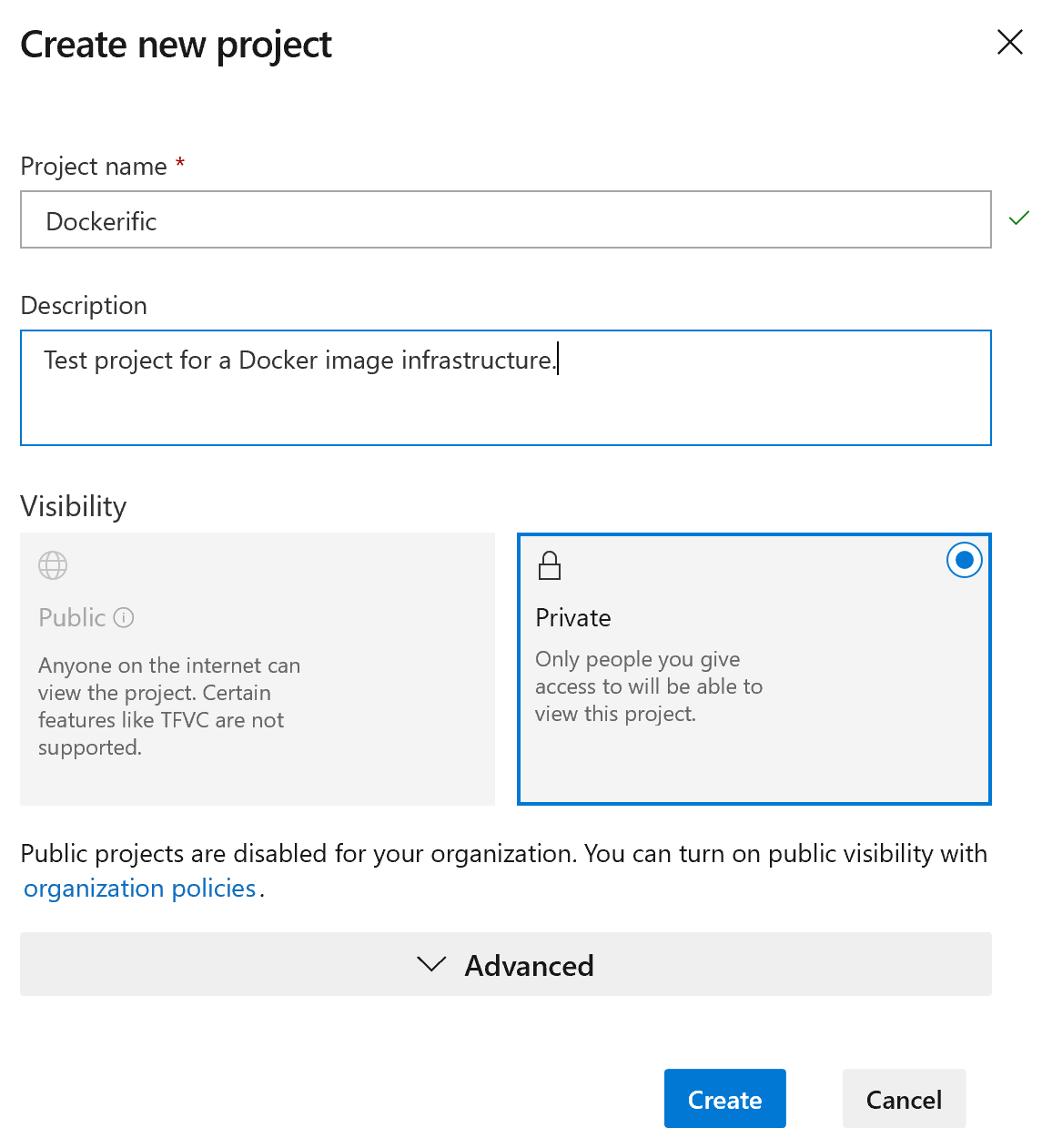

So, let's move into Azure DevOps and create a project:

Then you will want to navigate to the Project Settings, and Service connections for Pipelines:

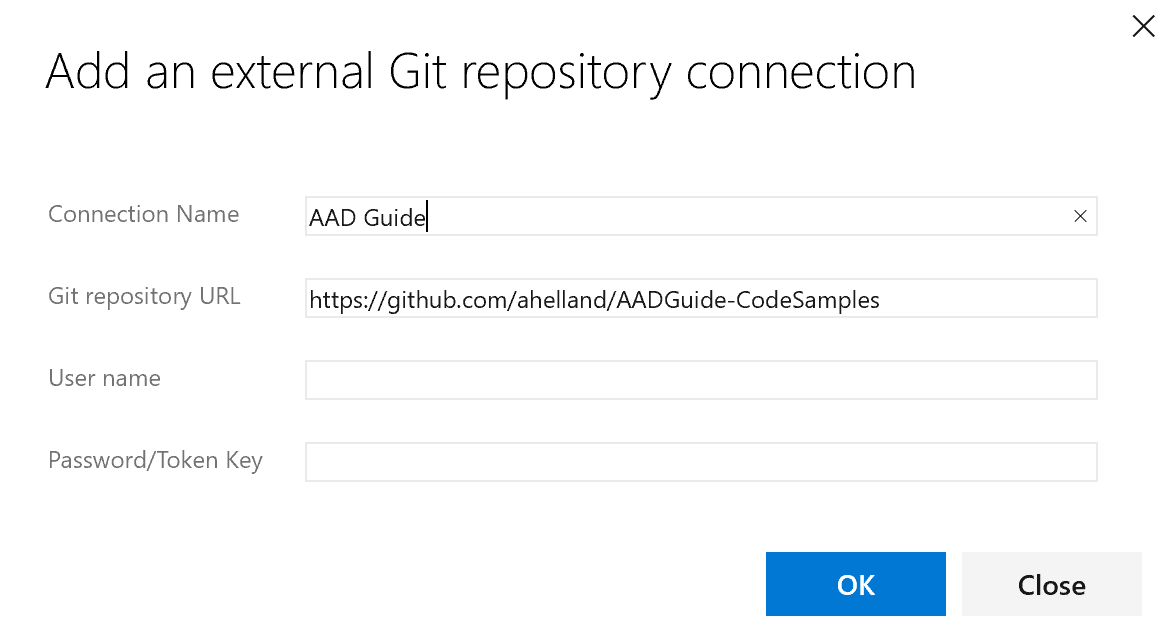

First let`s add a GitHub repo - you have two choices:

Use External Git:

External Git will let you add any random repo on GitHub, whereas the GitHub connection requires you to have permissions to the GitHub account. (The benefits are that you can browse the repo instead of typing in paths manually, you can report back to GitHub that releases should be bundled up, etc. so provided it is your repo you should consider using a GitHub connection.)

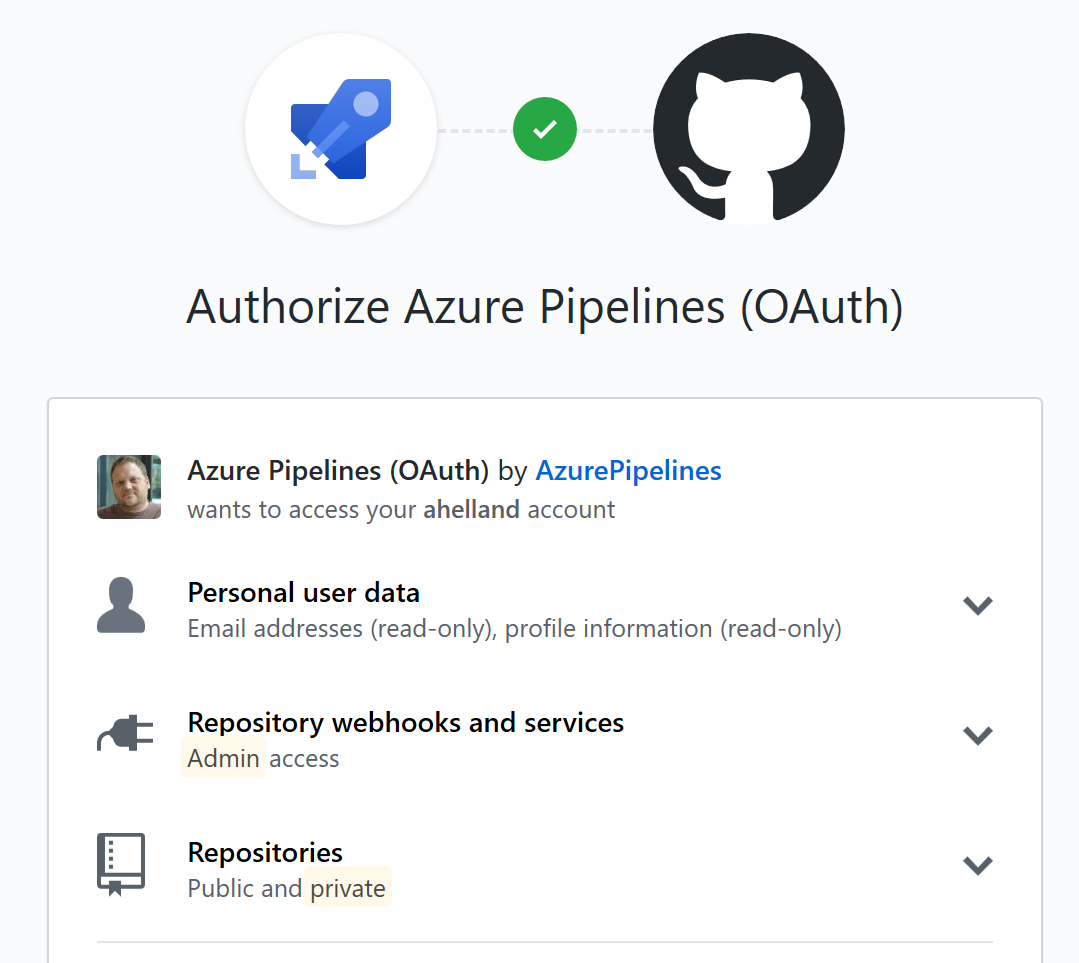

That means you have to login and consent for the GitHub connection:

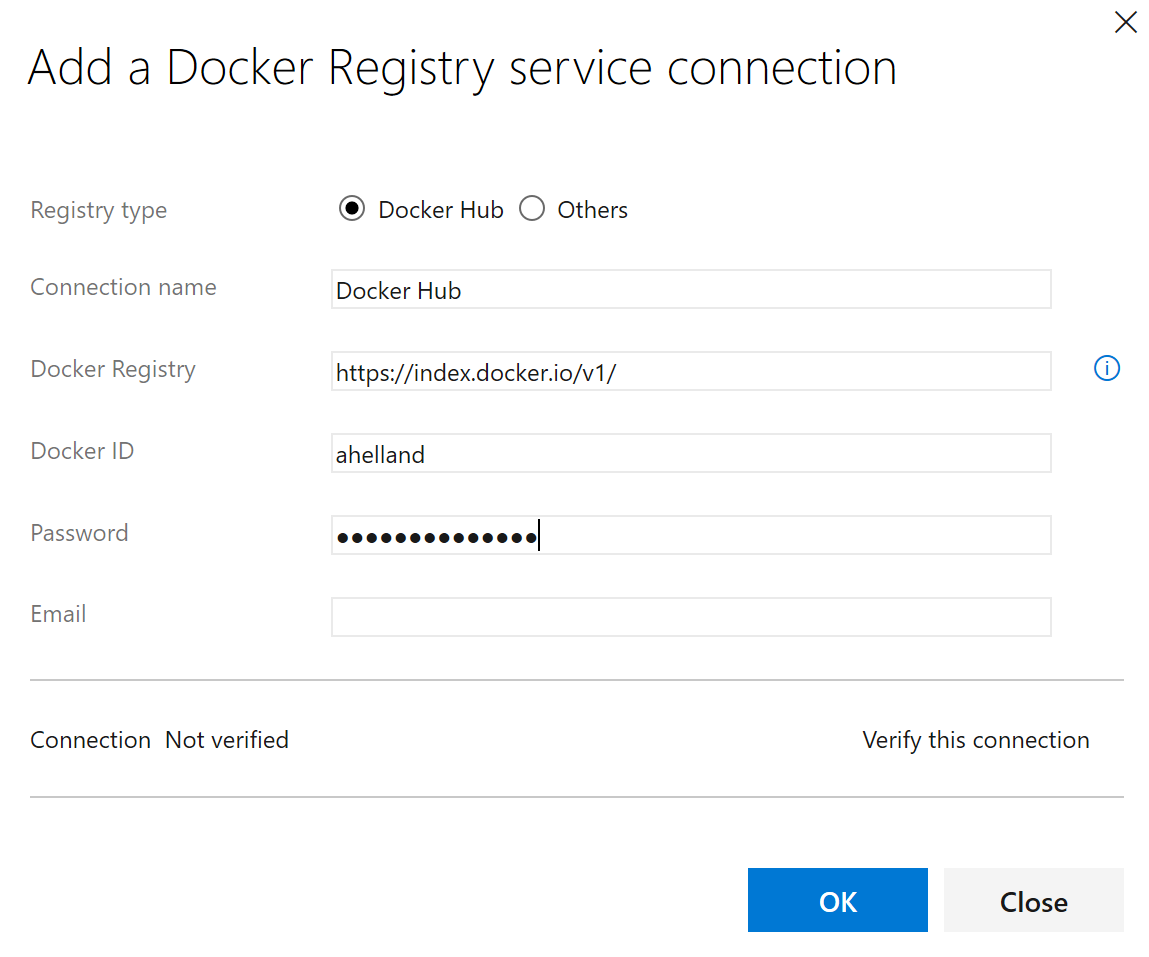

Since we're already in the service connection let's add a Docker registry as well:

Then we will move on to creating a pipeline.

If you chose an External Git repo choose that as a source:

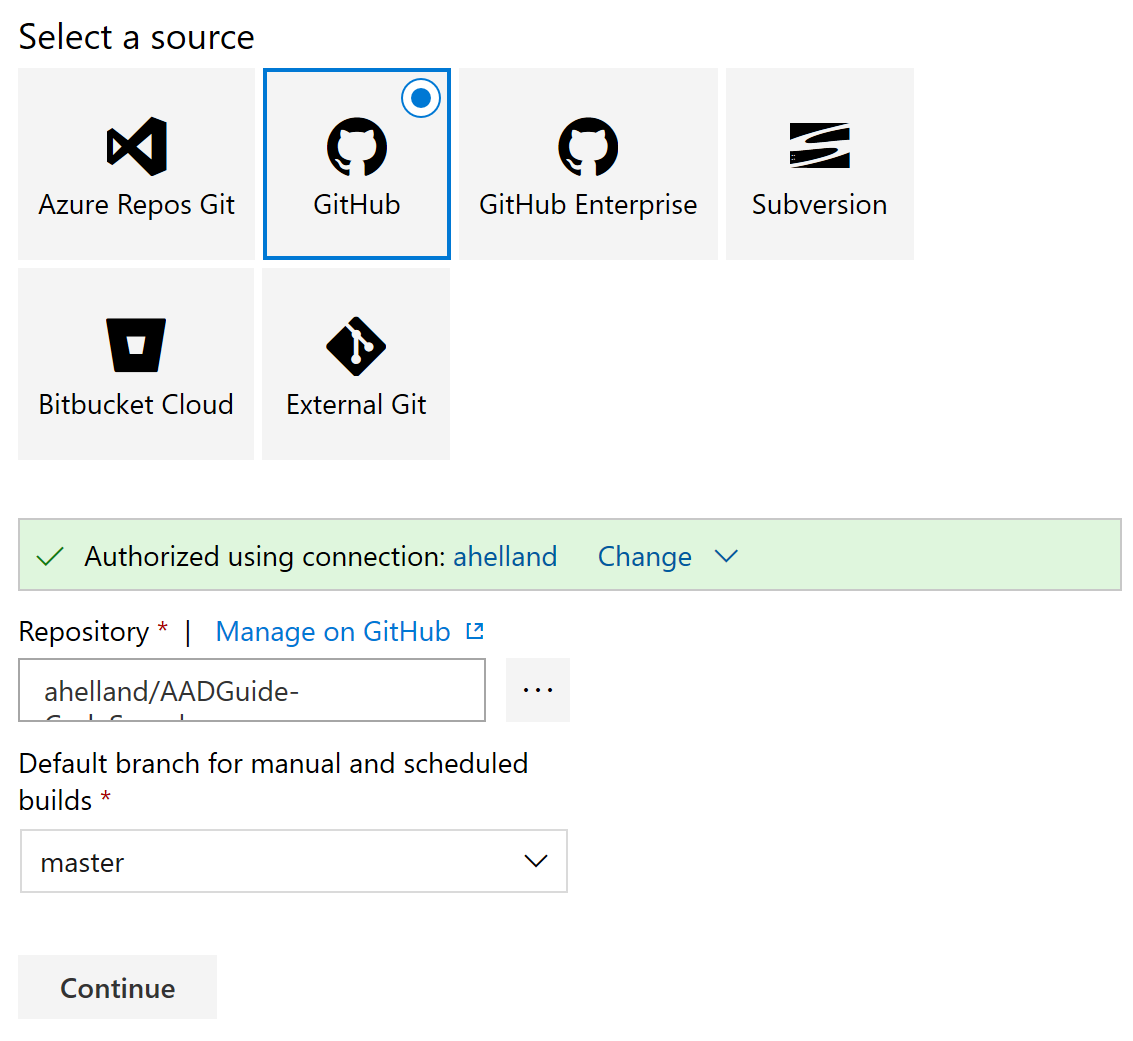

Or GitHub as a source if that's what you set up in the previous step - that requires you to choose the repository you want to work with:

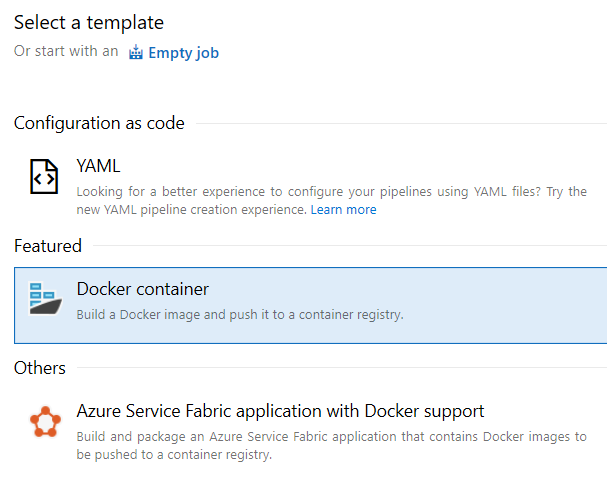

There are a number of templates to choose from, but to make it easy you can opt for the Docker container template:

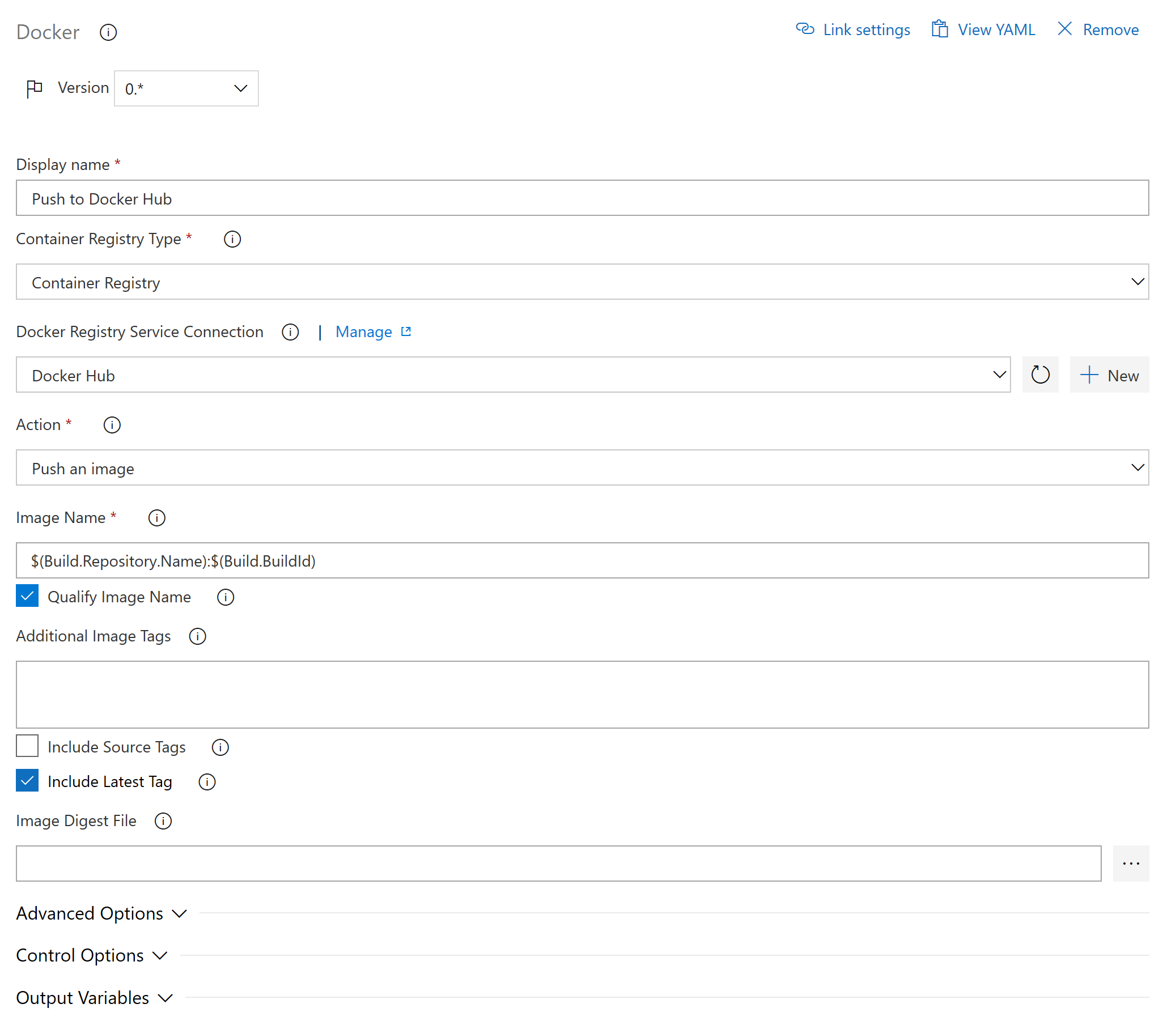

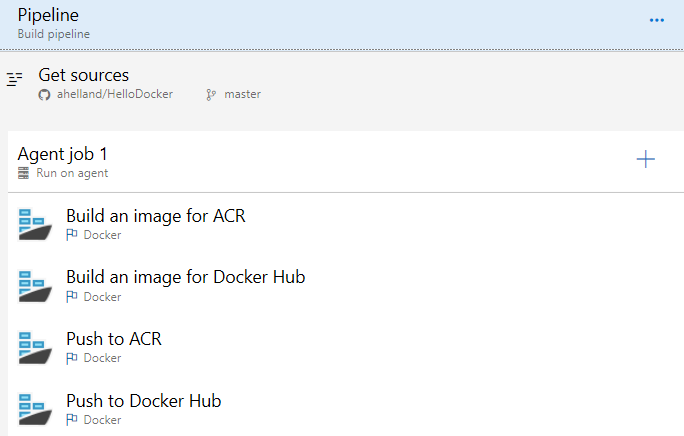

I chose to do builds both for ACR and Docker hub in the same pipeline by adding two more Docker tasks. (For simple customization needs this might not be required, but options are always great.)

The build task looks like this:

Kick off the build, and hopefully you will get something along these lines:

Which means you should also have an image available for everyone:

And there you have it - pull from Docker Hub into Azure Web Apps, do a replace a number of files and push a customized image into ACR only to pull into AKS afterwards. Well, you catch my drift :)