Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

InDro Robotics, a drone operating outfit based in Salt Spring Island British Columbia, recently connected with our team at Microsoft to explore how they could better enable their drones for search and rescue efforts. The team joined forces to create a Proof of Concept to test the ability of automatic object detection to transform search and rescue missions in Canada. In the future, these efforts will be able to assist organizations such as the Canadian Coast Guard in their search and rescue efforts. Before partnering with Microsoft, InDro Robotics had to manually monitor specific environments to recognize emergency situations. In fact, each drone had to be monitored by a person, resulting in a 1:1 ratio of operators to drones. This meant that the organization needed to rely heavily on its operators, hindering their ability to leverage the full potential of the drones.

Leveraging Custom Vision Cognitive Service and other Azure services, including IoT Hub, InDro Robotics developers can now successfully equip their drones to:

- Identify objects in large bodies of water, such as life vests, boats, etc. as well as determine the severity of the findings

- Recognize emergency situations and notify control stations immediately, before assigning to a rescue squad

- Establish communication between boats, rescue squads and the control stations. The infrastructure supports permanent storage and per-device authentication

The new Custom Vision service offering made available via general preview at Build 2017, allows the ability to teach machines to identify objects. Prior to this offering, deep learning algorithms would require hundreds of images to train a model that would recognize just one type of object. This would also create another challenge as a drone would not be able to run any deep learning algorithms on demand due to its small computation capability. With Microsoft Azure and this new offering, objects can now be recognized via cloud, with very little development effort.

Here are some of the steps taken in completing this project…

Training of this new Custom Vision service works best in a closed or static environment. This is to ensure the best result is achieved in training the service for object detection. The service does not require object boundaries to be defined or any specific information regarding object locations. By simply tagging all images, the framework will compare images with different tags to define the differences in objects. While this means that less time is required to prepare data for the service, it is still very important to provide images of objects in real environments for better identification. For example, if you want to find a life vest in the water, the best training environment is the water itself. You should provide images of a life vest in the water, as well as images of the water without any objects. If you use life vest images on a white background, they will not work to train your model due to differences in environment. InDro Robotics elected to launch their drones and capture photos in real-time to properly prepare for the project.

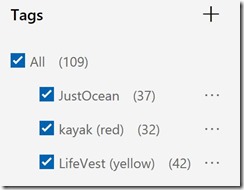

A minimum of 30 images per tag/label are required to adequately prepare the images. This capability to work off a very low control really helps to simplify work flow, which is an important aspect, especially when it comes to training many different objects.

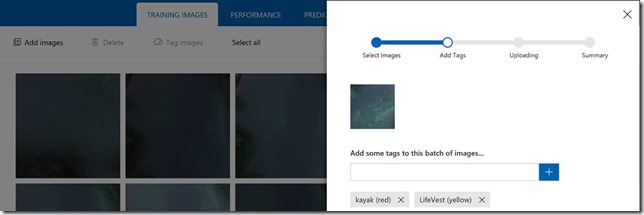

Once the images are ready, you can go ahead and create a new project. Thanks to the “Training Images” tab, you can upload as many images as you like, and assign tags to them:

You can also assign one or more tags per image during this upload process, or even at a later.

As mentioned earlier, it’s important to create a tag for the “empty” environment. In our case, we uploaded images of the ocean. At about an altitude of 3000 ft., the ocean looks pretty much the same, so in this case, we also used different filters, and uploaded deep water as well as shallow water photos:

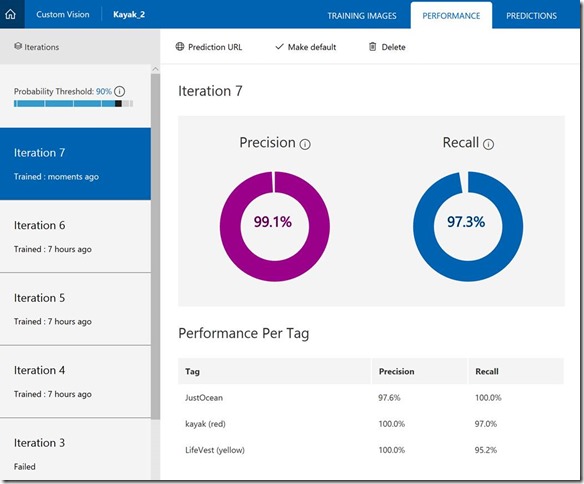

Once all tags are ready, click the “Train” button and in a matter of seconds, your model will be ready for testing. Using the “Performance” tab, you can also check how many images were accepted per tag, and if you are satisfied with the results, you can make the current iteration as default:

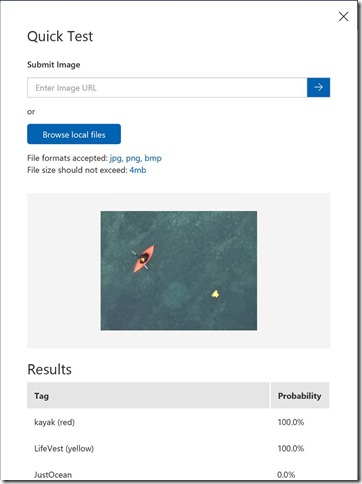

Before the start of using the model, the Custom Vision portal provides one more opportunity: you can test images from your dataset directly on the portal. To do this, simply click on the “Quick Test” button and upload an image:

In addition, you can use the “Predictions” tab to see all results on each iteration, and reassign images using existing or new tags to improve the model on future iterations.

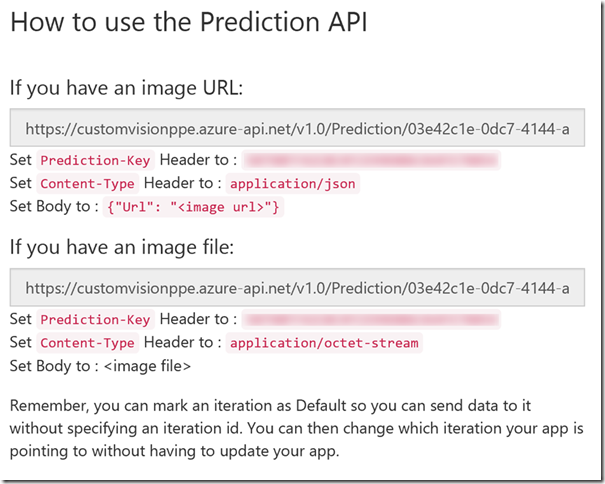

Finally, you can also integrate the model with your application. To do this, select the “Performance” tab and select the Prediction URI menu item:

You can see that it is possible to use URIs or image files themselves. In our case, we used the second approach, as all our images were stored in a private blob and direct URIs would not have worked.

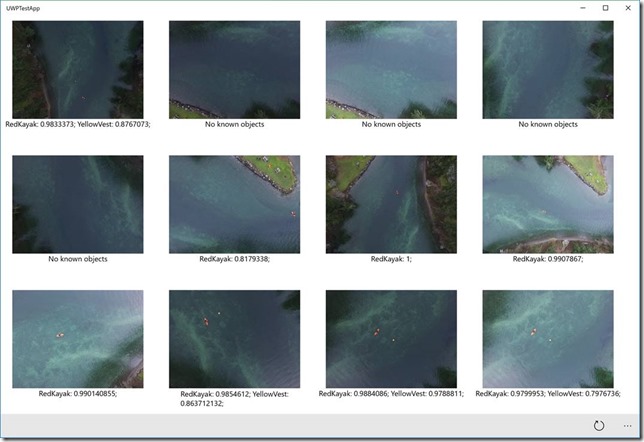

Below you can see some results for randomly selected images (not participated in the training process) from our dataset:

In conclusion, it was not sufficient to implement the Custom Vision model alone. We needed to store images somewhere, activate the service once a new image is available, store results in a database, and present the data to operators. To achieve all these goals, and maximize efforts, the following Azure services were utilized:

- Azure IoT Hub: authenticated drones and sent notifications to our infrastructure about the new image that were available. The messages contained information about the images’ location in Azure Blobs, as well as additional information like GPS coordinates;

- Azure Blobs: used to store images permanently and made available for the control center application;

- Azure Functions: triggered new messages from IoT Hub to invoke the Custom Vision service and update Azure SQL DB;

- Azure SQL: permanent storage to house results;

- Azure Mobile Service: provided required infrastructure to communicate between the control center application and Azure SQL;

- Xamarin: the primary technology which helped to create a cross-platform application for rescue services;

Here is a picture of the InDro Robotics / Microsoft team on Salt Spring Island:

Thank you to everyone who participated, and please keep an eye out for our next post, which will go more in-depth on the Azure Functions and Xamarin components of this project.

Comments

- Anonymous

July 29, 2017

Very good, in France we have the Helper drone.But this soft look way better and go much deeper in analysis.Congrat !