In-Role Cache Demystified

Abstract

The purpose of this article is to explain some of the concepts on Windows Azure In-Role Cache like how does cache cluster function internally, how a client communicates with cache, uncovers some of the mysteries on memory management etc.

This article assumes that the reader is already familiar with In-Role Cache and related concepts. If you are not already familiar then please visit Caching in Windows Azure and How to Use Windows Azure Caching .

Introduction

In In-role cache you configure your cloud service like web role or a worker role to give away memory for cache. As the name suggests “In-Role” the cache is in the role. When you configure your role for cache the cache plugin gets deployed along with the role on Windows Azure PaaS VM and run there.

Cache Service Setup

When cache is enabled on a role during development time either in case of Co-located or Dedicated, Visual Studio imports a plugin “Caching” in the service definition of your role. This job of this plugin is to run the CacheInstaller.exe that would do below at high level

· Install CacheService

· Install CacheDiagnosticsService

· Install CacheStatusIndicator

· Install manifests for windows events, traces and perfmon counters

· Configure Windows Error Reporting for CacheService crashes

· Add firewall exceptions so that Cache role is able to communicate with other role instances

Cache Service is the service that stores the objects in memory and communicates with other cache instances. CacheStatusIndicator Service monitors the Cache Service and if it is down or unresponsive then it sets the status of the role on the management portal as busy otherwise ready/running.

Cache Cluster Management

After the co-located or dedicated cache roles are deployed to Windows Azure the instances of cache enabled role form a cluster or a ring for resiliency and uptime. This ring can be thought as a failover cluster and it maintains its state information (much like a quorum) in a repository. In case of Cache this is maintained in Storage blob generally referred as config blob which is stored in container “cachecluserconfigs”. The actual name of this blob is formed with deployment id and role name, <deployment id>_<role name>_ConfigBlob. Config blob typically contains information like no. of nodes, partition distribution, IP address, ports etc.

When the Cache service starts up or when a new node joins the ring, it first connects to storage to check if config blob is there. If config blob is already there it means some other cache instance must have created it otherwise it creates the config blob. When other Cache service instances start up then they also connect to the storage and use the Azure RoleEnvironment APIs to figure out the other instances running in the same deployment and forms connection to their neighboring instances to form the ring. All the cache service instances are configured with internal endpoints so each cache service instance is able to discover the entire cache topology with the IP Addresses and Port nos.

Each Cache service node has an ID assigned and the ring is formed such that at the end the node with the least ID is neighbor of node with the highest ID. As depicted below the node 0 is neighbor of node 250.

The placement of these nodes is done in a round robin fashion in accordance with FD/UD rules. This ensures that no consecutive neighbors are places in same FD/UD. The same principle applies to the placement of secondary nodes as well when there is High Availability turned on.

To maintain the cluster information in config blob the Cache Service takes lease on it to update it from time to time and this lease is called as External Store Lease. If a cache service is not able to access the config blob in storage then it will not be able to come online (though it will restart and retry).

Since the inability to connect to storage can happen due to network or storage outage, the Cache cluster is made resilient to this failure up to certain extent. Every cache service from the role instance updates its state in config blob every 5 minutes to mark that it is alive. Every successful connection and update of config blob extends the life time of the Cache service in the ring by an hour. If for some reason the Cache Service is unable to reach to the storage then it would retry 12 times in an hour and then will crash and try it all over again. And if all of the nodes in the ring are unable to reach the Storage then the whole cache cluster is brought down.

Data Distribution

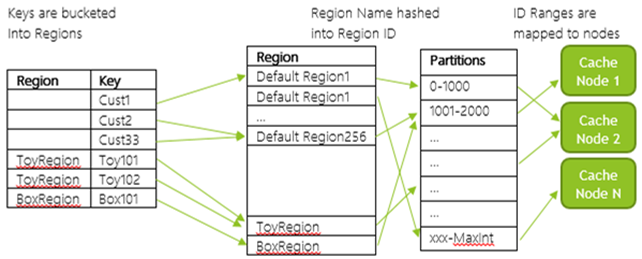

When a user stores an object it is hashed by the key and these keys are distributed across Regions. These regions contain the key ranges which are nothing but partitions that indicate which object belong to what partition based on the key. Partitions are basically fixed range of integer values. Below diagram depicts that the keys are mapped to partitions and partitions are mapped to nodes.

Partitions are not created dynamically i.e. there is never a situation where a partition is created when an object needs to be store. Key ranges are pre-calculated and are distributed across the cache instances in form of partitions. Hence when an object is stored it is assigned to a partition based on its calculated hash key and is stored on the node hosting that partition.

Partitions are spread across cache nodes. Each node can host multiple partitions but a partition can stay with only one node.

Failover

When a Cache Service node goes down gracefully like during Patch Tuesday then it tries to transfer the data to its neighboring nodes on a best effort basis within 5 minutes. Since data transfer is subject to the size of data, free memory and responsiveness of new node hence it is not always guaranteed that all data from cache will be transferred. If there is abrupt shutdown of a node then its data is lost.

Regardless of whether data is successfully transferred to neighboring nodes, its partitions are taken over by them for sure when it is determined that a node is down. By partition take over here it is meant that the ownership of key ranges is taken over by the neighboring node(s). But the data is not guaranteed to be owned.

Cache also supports high availability in which case when an object is stored in cache it is synchronously written to Primary and 1 Secondary node which increases the write latency. All reads and writes are handled by the primary node and in case there is a failure at primary node then the adjoining neighbors form the ring again and promote its secondary to primary status and create another secondary.

While the partition is being failed over if a client tries to fetch an object it will get a retryable error and the client should retry with exponential back-off logic though the partition though it should not take more than 2 minutes for partitions to failover.

Client Communication

When the client first comes up it uses the role name from AutoDiscover property of DataCacheClient section in Web.Config/App.Config and gets list of IP addresses of all the cache role instances using roleenvironment class. Once it has the list of all the IPs it then starts communicating with the cache server (typically it starts with the instance with ID 0) to get what is called as Routing Table.

A routing table contains information about the distribution of partitions across the cache role instances. Hence when the client needs an object it knows which role instance is hosting the object and it makes direct call to that instance using internal endpoint.

Cache client stores the routing table at their end and refreshes it on a need basis for e.g. sometimes client may get an error NotPrimary while fetching an object and then refreshes the routing table. NotPrimary error can occur when HA is enabled on a cache and the primary node hosting the partition went down and a secondary is yet to be made a primary.

Memory Management

Even though the box has full memory at the disposal of Cache Service but not all of it can be used. Some part of the RAM is used by OS. Rest of the RAM can used by Cache Service to store the user objects. It is important to understand that there is cost associated with user objects and that is the overheads for managing the user objects like internal data structures and other things such as keys, regions, tags etc.

Generally Cache service allocate 1 KB pages at a minimum to store the objects so there is wastage expected, for e.g. to store and object of 0.5 KB we would allocate 1 KB page and 0.5 KB is wasted because for next allocation we would allocate a new page. Also there is overhead here to manage the no. of pages so users who are closely tracking the amount of data store versus the cache usage reported will see difference in the figure.

When a user configures certain % of memory for co-located or entire box memory for dedicated cache then that amount of memory is inclusive of cache overheads. Roughly for 1 GB of cache memory there is 731 MB for user objects and rest for overheads. If there are regions, tags and notifications configured in cache then it can consume more memory than the configured size. To track size of user data there are performance counters such as Total Data Size, Total Primary Data Size and Total Secondary Data Size (in HA) but currently there is no way to measure how much memory is taken by the overhead. You can get a rough estimation of the overhead by subtracting Total Data Size from Working Set of the cache service process. This can only give an estimate and is not guaranteed to be accurate.

Sometimes the cache service can show memory usage more than it is configured for and the reason again can be expired, evicted objects, excessive usage of regions, tags and notifications. You will the working set for cache service increasing. Don’t be alarmed if you see this because this may be a situation where GC hasn’t yet kicked in to free up memory. Typically after the Gen 2 collection from GC the memory usage should drop to normal.

The article Windows Azure In-Role Caching has a good explanation on how much RAM is used in case of both co-located and dedicated deployments. Users can track relevant performance counters for Cache to track the memory usage.

This is different in DevFabric where if you use either dedicated or co-located roles the memory % set there has no impact. The cache emulator process reserves 16% of total box memory per cache role and this memory is divided among the instances of that role. This memory is only for user objects and overhead is extra.

Consider the following scenarios

- There are 2 instances of Co-located or Dedicated Cache roles in a project. There will be 2 instances of Cache Emulator process and both the instances will share same 16% memory allocation.

- There are 3 instances of Co-located or Dedicated Cache roles in a project. There will be 3 instances of Cache Emulator process and all three instances will share same 16% memory allocation.

- Unlikely but for the sake of argument there are 3 instances of Co-located cache role and 2 instances of dedicated cache role in a project. There will be 3 + 2 Cache Emulator process instances where 3 Co-located cache emulator will share 16% and 2 Dedicated cache emulator will share their own 16%)

Connection management

The Instances of DataCacheFactory and DataCache maintain physical connections to the cache servers. For the same reason they are expensive to create and destroy. In general, you should have minimum number of instances so that the cache servers are not overwhelmed with too many connections from clients and try to reuse the same instances for subsequent requests to cache.

As a best practice you should use connection pooling if possible and control the number of connections opened to cache server by tuning the “MaxConnectionsToServer” attribute of <datacacheclient> setting in configuration.

There is pretty good explanation on this in the article Understanding and Managing Connections in Windows Azure Cache .

Cache server also internally manage the idle connections. If a connection is not used for default 10 minutes then it is closed. For subsequent requests the connection is re-established with the server. You can control how long to wait on a connection before it is marked idle by setting “ReceiveTimeout” attribute in <transportProperties>setting in datacacheclient section in configuration.

On the other hand there are two more settings to control the timeouts that can occur while opening the connection with cache service and waiting for response for an API call on cache service which are ChannelOpenTimeout and RequestTimeout respectively. You can find good explanation on them in article Configuring Cache Client Timeouts (Windows Server AppFabric Caching).

Diagnostics

In-Role cache service introduces switches for controlling both the client and server side traces. This can be controlled by settings “ClientDiagnosticLevel” for client and “DiagnosticLevel” for server in .cscfg file. These settings get added when you enable caching on a role or when you install the caching Nuget package.

The values provided for these settings control the amount of information collected in logs and performance counters. The logs that are generated for the client and server are kept locally until the Cache diagnostics is hooked up with Windows Azure Diagnostics in Role Startup code to transfer the logs to storage. A good explanation on it is given in article In-Role Cache Troubleshooting and Diagnostics (Windows Azure Cache) .

Below is a quick summary on what logs go where

Log Type |

Storage Type |

Container/Table Name |

ETW traces |

Blob |

wad-custom-logs |

Performance Counters |

Table |

WADPerformanceCountersTable |

Windows Event Logs |

Table |

WADWindowsEventLogsTable |

Crash Dumps |

Blob |

wad-crash-dumps |

Client Logs |

Table |

WADLogsTable |

Conclusion

Windows Azure In-Role cache provides very powerful and flexible ways of managing your data in Cache for faster performance. The purpose of this article was to answer frequently asked questions on how does In-Role Cache manages itself, the data and the logs.

![clip_image001[4] clip_image001[4]](https://msdntnarchive.blob.core.windows.net/media/MSDNBlogsFS/prod.evol.blogs.msdn.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/01/57/80/metablogapi/0412.clip_image0014_thumb_1208FF14.png)