Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

One of my customers recently asked me to help them solve what sounded like a pretty simple problem until we started diving into the details of it. I believe that the solution that we came up with is an elegant one and thought that it might be interesting to others of you.

PROBLEM: Create and continually maintain an Accessible Backup of a Azure Storage Account including all of its contents

SOLUTION: Due to the cost differences, changing the primary storage account setting to Read Accessible-Geographically Redundant Storage (RA-GRS) was not an option. This really only leaves one other option and that is to maintain a copy of the Storage Account in another region. What makes this solution elegant though is the HOW.

This solution provides an Azure Automation Runbook that can do an initial copy of the Storage Account and then we leverage a Blob Trigger based Azure Function to copy every new item as it is added to the Storage Account. If the secondary Storage Account is in another region and is either set to be in the new Cool Storage Tier or uses the Local Redundant Storage (LRS) setting, then the costs are actually less than if the primary storage account is set to RA-GRS. In addition, the secondary storage account will always be accessible in the second region and be available for DR purposes.

Assumptions

This solution assumes that there are a few pieces that you already have in place within your Azure subscription and I would recommend that they are all in the same Resource Group.

- All work is done using Azure Resource Manager (ARM)

- Existence of an Azure Automation Account and a Service Principle user has been created and been assigned the "AzureRunAsConnection" within the account. See the additional resources for information on how to do this either automatically or manually.

- Primary LRS based Storage Account already exists (You can easily switch the type to LRS anytime that you like)

- Secondary Cool Tier LRS based Storage Account already exists in a second region

Solution Deployment - Automation Runbook

Using the assumed list above, there are two major pieces of functionality that needs to be deployed. The first is an Azure Automation Runbook which will be used to do the initial copy of the primary storage account and all of its contents. To get started here, you will need to perform the following steps within the Azure Automation account:

- Create new PowerShell based Runbook

- Create two new Variable based Assets with the values being the names of the Primary and Secondary Storage Accounts: Log-Storage-Primary & Log-Storage-Secondary

- Copy & Paste the following code into the newly created Runbook

- Run Manually or Schedule to Run the Runbook from within the Automation Account

1. $primary = Get-AutomationVariable -Name 'Log-Storage-Primary' 2. $secondary = Get-AutomationVariable -Name 'Log-Storage-Secondary' 3. 4. $Conn = Get-AutomationConnection -Name AzureRunAsConnection 5. Add-AzureRMAccount -ServicePrincipal -Tenant $Conn.TenantID -ApplicationId $Conn.ApplicationID -CertificateThumbprint $Conn.CertificateThumbprint 6. 7. $primarykey = Get-AzureRmStorageAccountKey -ResourceGroupName accuweather -Name $primary 8. $secondarykey = Get-AzureRmStorageAccountKey -ResourceGroupName accuweather -Name $secondary 9. 10. $primaryctx = New-AzureStorageContext -StorageAccountName $primary -StorageAccountKey $primarykey.Key1 11. $secondaryctx = New-AzureStorageContext -StorageAccountName $secondary -StorageAccountKey $secondarykey.Key1 12. 13. $primarycontainers = Get-AzureStorageContainer -Context $primaryctx 14. 15. # Loop through each of the containers16. foreach($container in $primarycontainers) 17. { 18. # Do a quick check to see if the secondary container exists, if not, create it. 19. $secContainer = Get-AzureStorageContainer -Name $container.Name -Context $secondaryctx -ErrorAction SilentlyContinue 20. if (!$secContainer) 21. { 22. $secContainer = New-AzureStorageContainer -Context $secondaryctx -Name $container.Name 23. Write-Host "Successfully created Container" $secContainer.Name "in Account" $secondary 24. } 25. 26. # Loop through all of the objects within the container and copy them to the same container on the secondary account 27. $primaryblobs = Get-AzureStorageBlob -Container $container.Name -Context $primaryctx 28. 29. foreach($blob in $primaryblobs) 30. { 31. $copyblob = Get-AzureStorageBlob -Context $secondaryctx -Blob $blob.Name -Container $container.Name -ErrorAction SilentlyContinue 32. 33. # Check to see if the blob exists in the secondary account or if it has been updated since the last runtime. 34. if (!$copyblob -or $blob.LastModified -gt $copyblob.LastModified) { 35. $copyblob = Start-AzureStorageBlobCopy -SrcBlob $blob.Name -SrcContainer $container.Name -Context $primaryctx -DestContainer $secContainer.Name -DestContext $secondaryctx -DestBlob $blob.Name 36. 37. $status = $copyblob | Get-AzureStorageBlobCopyState 38. while ($status.Status -eq "Pending") 39. { 40. $status = $copyblob | Get-AzureStorageBlobCopyState 41. Start-Sleep 10 42. } 43. 44. Write-Host "Successfully copied blob" $copyblob.Name "to Account" $secondary "in container" $container.Name 45. } 47. } 49. }

Let's take a look at exactly what the code does so that we can understand it because this will then feed into what also needs to be done inside of the Azure Function.

- Lines 1-5: In these first few lines we are pulling in the values of the Azure Automation Variables referencing the Storage Account names as well as the Service Principal Connection and then using that to authenticate against a specific Azure subscription and Azure Active Directory Tenant.

- Lines 7-11: Here we are taking the names of the Storage Accounts and using them to get the access keys for each specific Storage Account so that a Storage Account Context can be created. A Storage Account Context is used to actually manipulate the Storage Account and the objects stored within.

- Lines 13-24: Now that we have the Context for each Storage Account we loop through each of the Containers within the Storage Account. We first validate to see if the Container exists in both Storage Accounts, if not, it creates it, otherwise it moves on.

- Lines 27-49: Lastly we need to loop through each of the blobs within the container. We start by doing a check for existence or to see if the Last Updated Date changed which would determine if the copy needs to happen. Once the copy is kicked off, it is done in a transaction like environment where we continually check to see if the copy is complete before moving on to the next one.

NOTE: Obviously this script will loop through the containers and blobs one by one, which means that as the storage account grows, it will make sense to modify the script to do a better job of processing more than one blob at a time. This is also why the Azure Automation Runbook is not the only way piece to this solution.

Solution Deployment - Azure Function

The second piece of functionality for this solution will be a C# based Azure Function App which will be used to maintain the replication as new blobs are added to the primary storage account. This is done through a BlobTrigger based Function that will take the input from the first storage account and automatically copy it over to the second storage account. For more information on Azure Functions and specifically BlobTrigger based functions, please see the Additional Resources based section below.

We have to start off with the creation of an Azure Function App, which is the same process as creating an Azure Web App and requires an App Service Plan. One thing to not here though is that when choosing/creating the App Service Plan, I would recommend that you use the new D1 Tier which is designed specifically for Azure Function Apps. Please see the following for more specific information about Azure Function pricing: https://azure.microsoft.com/en-us/pricing/details/functions/.

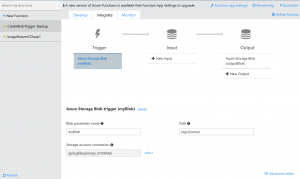

Once the Function App has been created we can start creating functions and tieing them to specific triggers so that they can be called properly. In this case, the function that I created is tied to a BlobTrigger which means that the function gets called based on when a Blob gets created or updated. The definition/configuration of this Trigger can be found in the Integration settings of the function which can be viewed in a graphical way, like the image below, or through JSON which is also shown below.

{ "bindings": [ { "path": "logs/name}", "connection": "apilogfilesprimary_STORAGE", "name": "myBlob", "type": "blobTrigger", "direction": "in" }, { "path": "logs/{name}", "connection": "apilogfilessecondary_STORAGE", "name": "outputBlob", "type": "blob", "direction": "out" } ], "disabled": false }

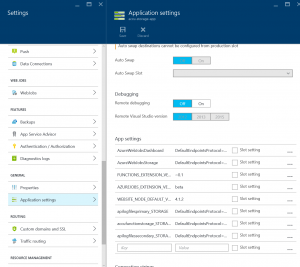

In the settings above, you can see that we are using a blobTrigger which automatically brings in the Blob object from a specific container path (i.e. logs) that is being created or updated and places it into a variable called "myBlob". In addition there is also a definition for an output variable called "outputBlob" for which the blob will be copied into. The last major area of the Integration settings to mention is the connection parameters. These parameters refer to Application Settings that are defined within the App Service that backs the Functions. In this case, both of the connection parameters are Connection Strings that point to each of the Storage Accounts that are being used. In some cases, these can be automatically populated, but it is still good to know where they are actually stored.

The last piece to this Azure Function is the actual code that will do the Blob copy each time that a new or updated one comes into the primary storage account. This is a very easy function, but as you can see, it uses the input object of "myBlob" and using all of the standard methods available within the .NET Stream library to copy to the output object of "outputBlob". You will also notice the use of a TraceWriter object to log data back to the console log that is available for monitoring within Azure Functions.

using System; public static void Run(Stream myBlob, Stream outputBlob, TraceWriter log) { log.Verbose($"C# Blob trigger function processed: {myBlob}"); myBlob.CopyTo(outputBlob); }

Conclusion

I hope that you can see that this solution is pretty straight forward and in my opinion elegant. It pretty easily maintains two complete accessible copies of a Storage Account and all of their contents in two distinct regions. There are definitely places for improvement depending on your specific business requirements, but it is definitely a great place to start. In addition, Azure Functions are still very new, so there will certainly be improvements as the service matures. Please don't hesitate to comment should you have any suggestions or questions about the solution.

Additional Resources

Azure Cool Storage Announcement: https://azure.microsoft.com/en-gb/documentation/articles/storage-blob-storage-tiers/

Create new Azure Automation Account with Service Principal: https://azure.microsoft.com/en-us/documentation/articles/automation-sec-configure-azure-runas-account/

Assigning a Role to the Automation Account Service Principal: https://azure.microsoft.com/en-us/documentation/articles/automation-role-based-access-control/

Azure Function Pricing: https://azure.microsoft.com/en-us/pricing/details/functions/

Create you first Azure Function: https://azure.microsoft.com/en-gb/documentation/articles/functions-create-first-azure-function/

Azure Storage Triggers: https://azure.microsoft.com/en-gb/documentation/articles/functions-triggers-bindings/#azure-storage-queues-blobs-tables-triggers-and-bindings

Azure Function C# Reference: https://azure.microsoft.com/en-gb/documentation/articles/functions-reference-csharp/

Comments

- Anonymous

August 26, 2016

I found that when I implemented this using Azure functions, it processed all of the matching blobs in my input storage account first (I believe this is due to the fact that the blobreceipts folder was empty) - in any case, I didn't need to do anything else other than to create the function, which made this so much easier.Thanks for the article. - Anonymous

October 26, 2016

Excellent information and thanks for the script! i found out after a bit of struggeling that my modules in my Runbook needed an update and after that i found out that the rows with New-AzureStorageContext needs to be altered since they changed the Kayhandling. If any one else have the same try to change as below: $primaryctx = New-AzureStorageContext -StorageAccountName $primary -StorageAccountKey $primarykey[0].Value $secondaryctx = New-AzureStorageContext -StorageAccountName $secondary -StorageAccountKey $secondarykey[0].ValueI found this article that helped me :http://www.codeisahighway.com/breaking-change-with-get-azurermstorageaccountkey-in-azurerm-storage-v1-1-0-azurerm-v1-4-0/