Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Wir wollen euch in den nächsten Monaten von ISVs und Startups berichten, mit denen wir an verschiedenen Technologien arbeiten und auf interessante technische Fragen aufstoßen. Solche Lösungen wollen wir mit euch teilen. Vielleicht hilft es euch bei euren Projekten oder inspiriert für neue Ansätze.

Levion Technologies GmbH ist ein Grazer Startup, das eine innovative Lösung im Bereich von Energie Management hat. Die hier zum Teil vorgestellte Lösung besitzt auch eine öffentliche API , sodass Entwickler weitere Lösungen auf ihr aufbauen können.

Im Teil 1 haben wir bereits mit den Highlights begonnen. Nun folgen weitere Highlights.

Online Communication with Azure Web Apps and Redis

The online communication between the SEMS control unit and the mobile application works via a NodeJS application that is hosted on Azure Web Apps and connects to the backend control unit and the frontend app via Websockets. The messages are relayed via Redis Cache service in Microsoft Azure.

Here is how the NodeJS application is built.

var ws = require('ws'),

nconf = require('nconf'),

express = require('express'),

url = require('url'),

redispubsub = require('node-redis-pubsub');

/// endpoints defines (for websocket path matching and authentification)

const regex_websocket_path =

'^\/api\/v1\/relay\/(frontend|backend)\/([a-zA-Z0-9]+)$'

// Setup nconf to use (in-order):

// 1. Command-line arguments

// 2. Environment variables

// 3. config file (read-only)

nconf.argv().env().file({ file: __dirname+'/config/config.json' });

// check for needed VARS

if(!nconf.get('PORT')) {

console.log("FATAL: PORT not defined.");

process.exit()

}

// log only when enabled

const logging = nconf.get('DEBUG_CONSOLE');

function logMessage(message) {

if(logging)

console.log(message);

}

/// INIT main parts

var app = express();

var http = require('http').createServer(app);

// redis publish/subscribe

var tls;

if(nconf.get('REDIS_SSL'))

tls={servername: nconf.get('REDIS_HOST') }

var relay = new redispubsub({

host: nconf.get('REDIS_HOST'),

port: nconf.get('REDIS_PORT'),

scope: nconf.get('REDIS_SCOPE'),

tls: tls,

auth_pass: nconf.get('REDIS_KEY')

});

relay.on('error', function(err) {

logMessage('some redis related error: ' + err);

});

/// authentification within websockets

///

function checkAuthorization(info, cb) {

//* THIS WAS REMOVED *//

cb(true);

};

/// WEBSSOCKETS

///

///

var server = new ws.Server({

perMessageDeflate: false,

server: http,

verifyClient: checkAuthorization,

});

/// on each websocket we do ...

server.on('connection', function(socket) {

// get the url

const location = url.parse(socket.upgradeReq.url, true);

const req_url = location.path;

// callback for unsubscribing

var unsubscribe;

// get type of client and serial of target/source system

const match = location.path.match(regex_websocket_path);

if(!match) // should never happen

return;

// extract values from path

const endpoint = match[1];

const serial = match[2];

// BACKEND (a SEM)

if(endpoint === 'backend')

{

logMessage('device ' + serial + ' available');

// message from the relay

unsubscribe = relay.on('to:' +serial, function(data) {

var msg = new Buffer(data.msg, 'base64').toString('utf8')

//logMessage('message for '+serial+': '+data.message);

socket.send(msg);

});

}

// FRONTEND (an app)

else if(endpoint === 'frontend')

{

logMessage('client looking for ' + serial +' arrived');

// message from the relay

unsubscribe = relay.on('from:' +serial, function(data) {

var msg = new Buffer(data.msg, 'base64').toString('utf8')

//logMessage('message from '+serial+': '+data.message);

socket.send(msg);

});

}

// unknown (auth should not let this happen)

else

{

logMessage("rejecting unknown path");

socket.close();

return;

}

// message from the web sockets

socket.on('message', function(message,flags) {

var msg64 = new Buffer(message).toString('base64');

if(endpoint === 'backend') {

//logMessage('from '+serial+': '+message);

relay.emit('from:'+serial, { msg: msg64 } );

} else { // frontend

//logMessage('to '+serial+': '+message);

relay.emit('to:' +serial, { msg: msg64 });

}

});

// handle closes properly

socket.on('close', function(message,flags) {

if(unsubscribe)

unsubscribe();

if(endpoint === 'backend') {

logMessage('device ' + serial + ' left');

} else { // frontend

logMessage('client looking for ' + serial +' gone');

}

});

});

/// handle default location if someone calls that

app.get('/', function (req, res) {

res.send(

'This is the relay service for SEMS. This is an API and no website.<br>'+

'Please visit <a href="https://sems.energy" '+

'target="_blank">https://sems.energy</a> for more information');

});

/// start http server

http.listen( nconf.get('PORT') , function () {

logMessage('staring sems relay service on port '+ nconf.get('PORT') );

});

/// shutdown detection for graceful shutdown

process.on( 'SIGINT', function() {

logMessage( "\nGracefully shutting down from SIGINT (Ctrl-C)" );

relay.quit();

process.exit();

})

DevOps with Build and Release Management

Since LEVION wanted to automate build and release management of the app, they used Visual Studio Team Services for this task.

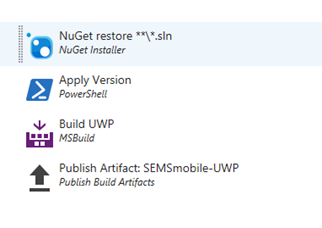

The build definition for the UWP App consists of following steps.

This build definition is based on the UWP template from the suggested build definitions with a couple of changes.

In the Nuget Restore Step it was important to select Nuget Version 3.5.0.

The Apply Version Step consists of a powershell script that makes sure the UWP Package Version corresponds to the running build. This is how the script looks like:

#Based on https://www.visualstudio.com/docs/build/scripts/index

# Enable -Verbose option

[CmdletBinding()]

$VersionRegex = "\d+\.\d+\.\d+\.\d+"

$ManifestVersionRegex = " Version=""\d+\.\d+\.\d+\.\d+"""

if (-not $Env:BUILD_BUILDNUMBER)

{

Write-Error ("BUILD_BUILDNUMBER environment variable is missing.")

exit 1

}

Write-Verbose "BUILD_BUILDNUMBER: $Env:BUILD_BUILDNUMBER"

$ScriptPath = $null

try

{

$ScriptPath = (Get-Variable MyInvocation).Value.MyCommand.Path

$ScriptDir = Split-Path -Parent $ScriptPath

}

catch {}

if (!$ScriptPath)

{

Write-Error "Current path not found!"

exit 1

}

# Get and validate the version data

$VersionData = [regex]::matches($Env:BUILD_BUILDNUMBER,$VersionRegex)

switch($VersionData.Count)

{

0

{

Write-Error "Could not find version number data in BUILD_BUILDNUMBER."

exit 1

}

1 {}

default

{

Write-Warning "Found more than instance of version data in BUILD_BUILDNUMBER."

Write-Warning "Will assume first instance is version."

}

}

$NewVersion = $VersionData[0]

Write-Verbose "Version: $NewVersion"

$AssemblyVersion = $NewVersion

$ManifestVersion = " Version=""$NewVersion"""

Write-Host "Version: $AssemblyVersion"

Write-Host "Manifest: $ManifestVersion"

Write-Host "ScriptDir: " $ScriptDir

# Apply the version to the assembly property files

$assemblyInfoFiles = gci $ScriptDir -recurse -include "*Properties*","My Project" |

?{ $_.PSIsContainer } |

foreach { gci -Path $_.FullName -Recurse -include AssemblyInfo.* }

if($assemblyInfoFiles)

{

Write-Host "Will apply $AssemblyVersion to $($assemblyInfoFiles.count) Assembly Info Files."

foreach ($file in $assemblyInfoFiles) {

$filecontent = Get-Content($file)

attrib $file -r

$filecontent -replace $VersionRegex, $AssemblyVersion | Out-File $file utf8

Write-Host "$file.FullName - version applied"

}

}

else

{

Write-Warning "No Assembly Info Files found."

}

# Try Manifests

$manifestFiles = gci .\ -recurse -include "Package.appxmanifest"

if($manifestFiles)

{

Write-Host "Will apply $ManifestVersion to $($manifestFiles.count) Manifests."

foreach ($file in $manifestFiles) {

$filecontent = Get-Content($file)

attrib $file -r

$filecontent -replace $ManifestVersionRegex, $ManifestVersion | Out-File $file utf8

Write-Host "$file.FullName - version applied to Manifest"

}

}

else

{

Write-Warning "No Manifest files found."

}

Write-Host ("##vso[task.setvariable variable=AppxVersion;]$NewVersion")

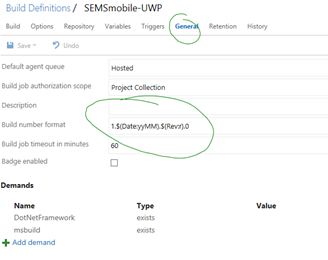

In order for this script to run without problems, it is necessary to change the Build number format which can be found in the General tab of the Build definition edit screen:

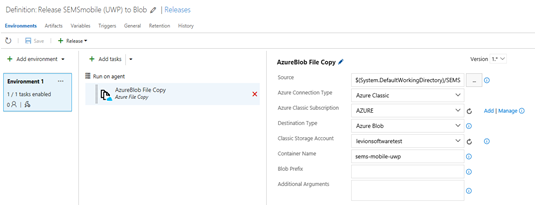

After the build package has been created. It is taken from the drop location and copied to Azure blog in a release definiation:

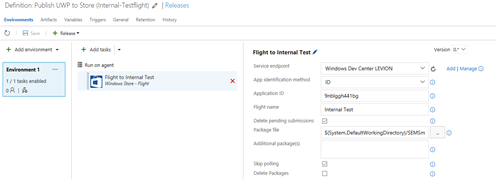

The following release definition shows how the package is submitted to the store in a specific package flight.

Conclusion

Intially the team was not convinced Xamarin would be the right solution for their mobile application, and they wanted to begin with the UWP version only and develop the Android and iOS version outsourced natively. However, working with the framework they were convinced of Xamarin's benefit of code sharing that they will now go ahead and also build the Android and iOS version with Xamarin. Effectively this means that they only have to work on the respective UIs for Android and iOS since the app logic is completely capsulated in the shared codebase of several Portable Class Libraries. The description above highlights some of their solutions for this app. As discussed, their shared logic worked for all platforms in the PCL without problems.