Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

From the perspective of a WCF developer, the interaction between WCF and ASP.NET can be a black box. But to understand the performance of a system, it is necessary to know how all the components interact. This post covers how threads work when a request is sent to a WCF service hosted in IIS.

This started with the IO thread pool bug I covered earlier. The scenario is that you have a service that performs long-running work and blocks a thread, which can be easily simulated with a Thread.Sleep.

[ServiceContract]

public interface IService1

{

[OperationContract]

string GetData(int value);

}

public class Service1 : IService1

{

public string GetData(int value)

{

Thread.Sleep(TimeSpan.FromSeconds(2));

return string.Format("You entered: {0}", value);

}

}

.NET 3.5

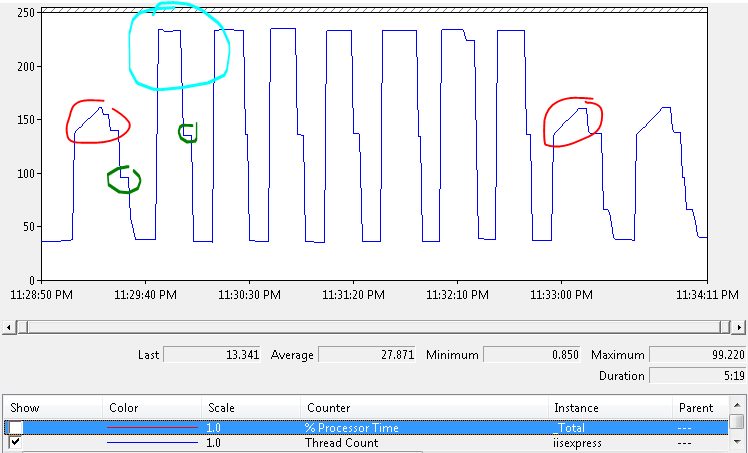

For this test, I used IIS hosting and compiled against the .Net 3.5 framework. The test client creates 100 simultaneous requests to GetData. To handle this many requests, I set the min worker threads and min IO threads to 100 each in <processModel>. The graph below is captured from performance monitor where I watched the thread count on the service process:

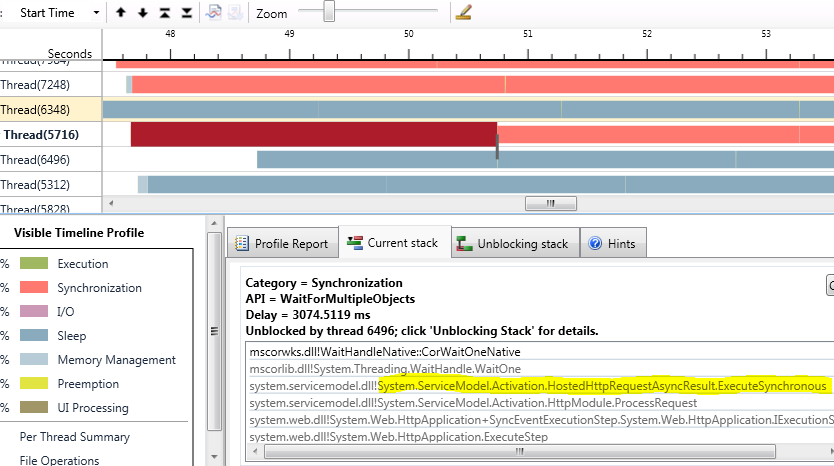

The test harness sends 100 concurrent requests to the middle tier. Each request takes 2 seconds to complete. Since the WCF service is synchronous, an IO thread will be blocked for those 2 seconds. In order to handle 100 requests at the same time, there would need to be 100 free threads in the IO thread pool. Circled in light blue above, you can see that as the burst of requests comes in, 200 threads are created. Even stranger are the parts highlighted in red. Here 100 threads are created at once, then the thread count slowly increases. During these periods, it takes a lot longer to handle all of the requests (at least 10 seconds). To understand this, we can view the process in concurrency analyzer:

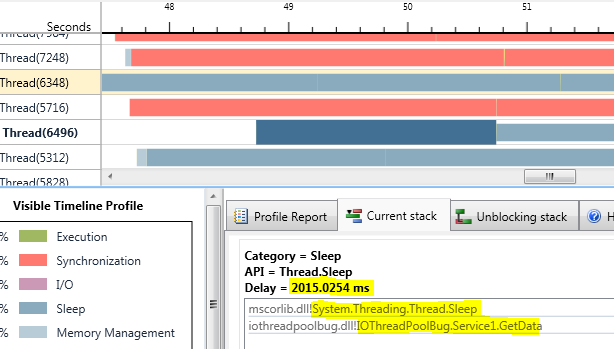

Here you can see that thread 5716 is blocked. That block comes from the highlighted method System.ServiceModel.Activation.HostedHttpRequestAsyncResult.ExecuteSynchronous. What this is showing is a thread from the worker thread pool being used by IIS. This HostedHttpRequestAsyncResult class is used to transfer work from ASP.NET to WCF. After transferring, it then waits for the WCF call to finish. We can look at thread 6496 to see this more clearly:

Highlighted above you can see the call to Service1.GetData. You can also see the call to Thread.Sleep inside and that the thread sleeps for 2 seconds. This thread is from the IO thread pool. You may also have noticed that the ASP.NET thread (5716) is blocked for 3 seconds, not 2. This is because the request had to wait for an IO thread to become available in order to service the request. An IO thread can become available either by another request finishing or by the gate thread adding a new thread to the pool. As mentioned in previous blog posts, it only adds threads to the pool on a 500ms interval.

There are a few important things to note. The HostedHttpRequestAsyncResult class shown above is invoked from System.ServiceModel.Activation.HttpModule.ProcessRequest. The HttpModule class is shown in Wenlong's blog as the synchronous handler for WCF requests. As he explains, this handler blocks the ASP.NET thread while waiting for the WCF request to finish. This is why 200 threads are created instead of only 100. ASP.NET uses the worker thread pool to handle requests. WCF uses the IO thread pool. One thread is needed from each to handle the request.

Another important thing to consider is from the performance monitor graph above. Circled in red, we can see that there are cases where 100 threads are created quickly and then the thread count slowly goes up. The profile taken above is during one of those times. The reason for this is the IO thread pool bug talked about earlier. The worker thread pool obeys the min threads setting but sometimes the IO thread pool does not. Therefore, ASP.NET can handle all the requests at once, but WCF is stuck waiting for the IO threads to come through.

You can also see areas that are circled in green. This is further proof of the difference between IO and worker thread pools. IO threads will be cleaned up after a shorter period of time than the worker threads. That's why you can see a sudden drop in thread count and then a leveling off followed by another drop. In case you're wondering why the threads are cleaned up, Jeff Richter talked about how expensive threads are on AppFabric.tv recently.

The problem with blocking an ASP.NET thread while waiting for a response from a WCF service is that even if you write your WCF code with the async pattern, you will still be blocking one thread. That will limit your service's ability to handle requests in a timely manner.

.Net 4.0

When we switch to .Net 4, things are a little different. The ServiceModel HTTP module no longer blocks a thread waiting for a response from WCF. You can also get the same results in .Net 3.5 by switching from the synchronous HTTP handler to the asynchronous handler. This is covered in Wenlong's blog as well (linked above).

Without changing any code, the service is switched from .Net 3.5 to .Net 4.0 and shows the following behavior with the 100 request burst (circled in red).

The thread count increases only by 100 because the service is still performing synchronous work with the Thread.Sleep. The subsequent bursts do not show the quick scale up of threads because of the IO thread pool bug (linked earlier). But at least now we are assured that if we use the async pattern in the WCF service that we will no longer be blocking any threads. In that case, the maxConcurrentCalls throttle will most likely be the threshold for how many simultaneous calls the service can handle.

Summary

When you have a service that performs work that is not CPU-bound and takes a long time, you have to be aware of your thread usage. Thread pools meter how many threads they make available and that can put a speed limit on your service. By understanding how ASP.NET and WCF work with threads you can see how to keep from blocking threads. This makes the WCF throttling settings much more effective and simplifies your performance tuning.

Comments

Anonymous

June 14, 2011

Interesting Finds: June 15, 2011Anonymous

June 24, 2011

Dustin Metzgar recently posted an entry centered around understanding how ASP .NET and WCF work in orderAnonymous

June 27, 2012

What is the name of the graphical tool you used ?Anonymous

June 28, 2012

@Vit - The tool is built in to Visual Studio 2010. It's not in all the SKUs so I'm not sure if it's in Professional. But you would see under the Analyze menu an option to start the performance wizard. You would need to get a concurrency profile to get this visualization tool.