Mixing Natural Language into Games

[I had intended to write up a small post about my project earlier, but didn't get the chance until now. Hopefully, you'll find it at least part of it interesting. -Gary]

Being in the Natural Language Processing group here in Microsoft Research, and being someone who enjoys creating and playing games, one of my interests is to explore the intersection between these two areas.

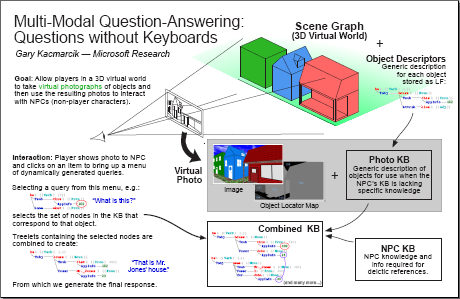

I started by experimenting with attaching semantic annotations to virtual photographs - photos taken by the player within the virtual world. The player could take a photograph and then bring the photo to an NPC in the world and have a (somewhat stunted) "conversation" about the contents of the photo. A key aspect of this work is that all of the interactions are dynamically generated during runtime - there is no scripted dialog. A basic description and overview of this work is given in "Multi-Modal Question-Answering: Questions without Keyboards" [IJCNLP 2005].

One of the problems that I encountered with this project was the high cost of creating 3D assets (where's the Content Pipeline when you need it?), so I converted to a more conventional tile-based isometric role-playing game. I still needed to create art assets, but found that it was much easier to create 2D sprites than to create models, textures and the other bits required for a decent-looking 3D world. This allowed me to focus less time on the art (which is fun, but not the key point of the game) and more on the dialog and NPC-interaction aspects of the game.

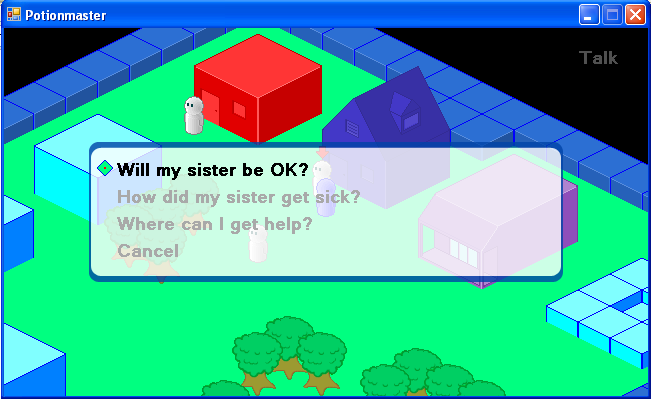

Here is a screenshot from a game demo (called "PotionMaster") showing some of the dynamically generated dialog elements (apologies for the "programmer art" ^_^):

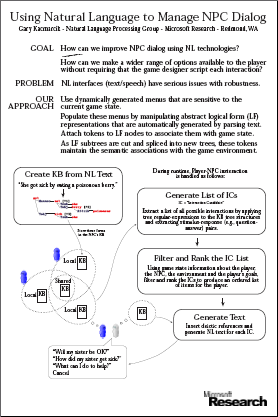

More details about this game are given in "Using Natural Language to Manage NPC Dialog" [AIIDE 2006] and (in an earlier form) in "Question-Answering in Role-Playing Games" [AAAI 2005].

Unfortunately, I cannot release this game in its complete form. It relies on some internal-only NLP tools during runtime (to dynamically generate the NPC responses from the abstract semantic representations), and those components cannot be released (not even in binary form).

To work around this limitation, I've defined an interface between the core game engine and our dialog engine. This way I can release the game engine and keep our proprietary dialog system in-house. There may seem like no point in releasing only half of a game, but the purpose is so that other NL researchers can plug-in their own dialog systems and run situated evaluations without having to create an entire game from scratch. I've also created a very simple scripted dialog plug-in that takes the place of our internal backend (so the game will be playable).

So, that's the project in a nutshell. When XNA was released, I expanded the scope slightly so that the game code could be used as a tutorial for creating isometric games. The XNAExtras that you've seen so far are the first of these releases.

[Update: All of my papers (including those mentioned above) are listed on my research homepage .]