Preparing and uploading datasets for HDInsight

In the previous blog https://blogs.msdn.com/b/hpctrekker/archive/2013/03/30/finding-and-pre-processing-datasets-for-use-with-hdinsight.aspx we went over how to get English only documents from the Gutenberg DVD. We showed you the Cygwin Unix emulation environment and also some simple Python code. The script takes about 15 minutes to run. Async or some simple task scheduling would probably have saved us some time in copying about 25,000 files. We will continue to explore some of the Unix utilities that are useful for data processing.

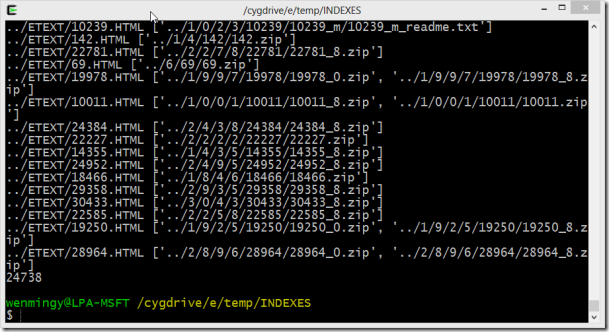

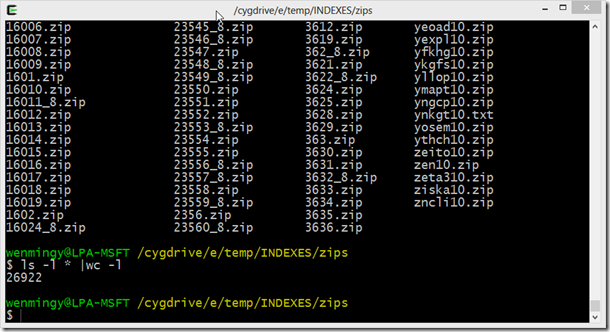

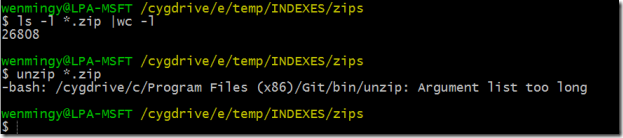

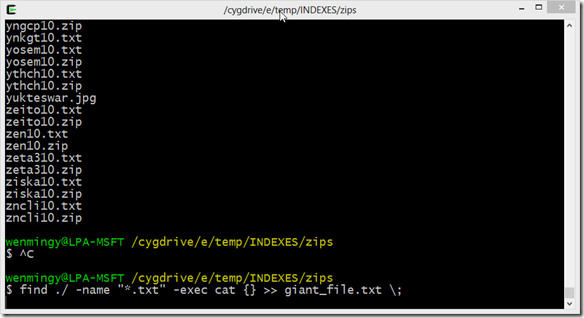

After the Script finished running, we go into the zips directory and did a count on total number of files:

Some of these files are in zip format and some are text files. We could simply upload these files to HDInsight, but in general it is a bad idea to send lots of small files for Hadoop to process. The best way to do this is to combine all the text files into a smaller set of larger files. To do this, we need to unzip these files first, and then combine them into similar sized chunks in 250mb in size. 64MB – 256 MB are common block sizes for Hadoop file systems.

Installing and using Gnu Parallel

As we saw earlier, it was painfully slow to process 25000 files in serial execution. Parallelism in this case might help when it comes to unzipping the files, as long as the extraction process is CPU bound. GNU Parallel is a set of Perl Scripts that can help you do simple task based parallelism. Download them from: ftp://ftp.gnu.org/gnu/parallel/ You might want to get a more recent version from 2013.

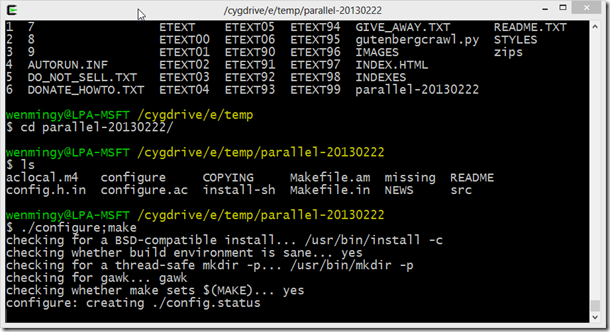

Building gnu Parallel is like any raw Unix packages. You need to Unpack, configure and then run Make. Please make sure these utilities are installed under Cygwin. If not, you can simply re-run setup.exe from https://cygwin.com/ . You should get “make”, “wget”, as well. Unfortunately, gnu parallel is not part of the cygwin pre-built packages.

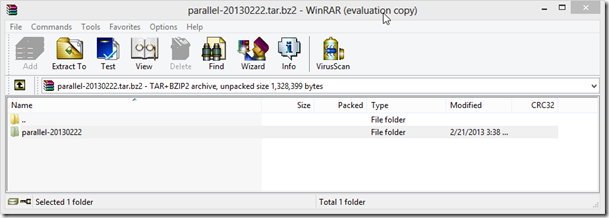

Open gnu parallel with WinRAR and press control+C, copy the directory and paste it into a temp location. In this case, e:\temp

In the Cygwin Shell, navigate to the parallel package directory, and type ./configure;make

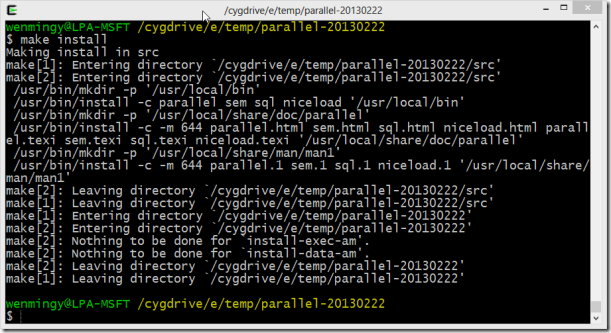

Finally, make install, by default, most raw Unix source packages will install everything in /usr/local/

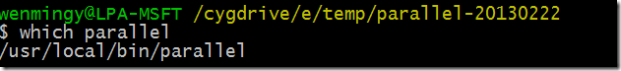

You can verify this by typing which parallel, it will show you that parallel is in /usr/local/bin (binary).

When we have large amount of files(26808 total), unzip *.zip would not even work properly.

Instead, we can list the files and pipe it to work with gnu parallel. but first, let’s run chmod 555 *.zip to make sure we can read the zip files for unzip.

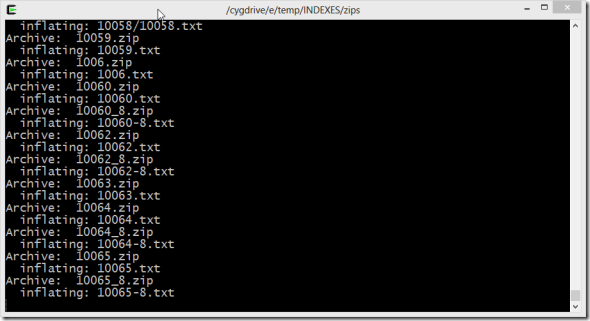

Then the command: ls *.zip |parallel unzip

Well have other chances to use parallel in the future for processing files. By default, gnu parallel will use number of cores the CPU contains to spawn the sanme number of processes, in this case 4 parallel processes or tasks. How well gnu parallel speeds up the processing depends on the actual workload, if this is disk bound work, then multiple processes won’t help, but if it is CPU bound work, then it would. The best way to find out is to run a benchmark test with and without gnu parallel. You can find more examples here https://en.wikipedia.org/wiki/GNU_parallel

The Unix “Find” Command

The Unix find command is extremely powerful, you can use it in conjunction with GNU Parallel. In this step of the tutorial, we will need to combine the 27000 text files we just extracted and prepare them as 250MB blocks to upload to Windows Azure Blob Storage. Some of these text files are in the root directory, some are in sub dirs. The easiest way is to combine all text files into one giant file, and then split it up. With “find”, we can accomplish this by a single command:

find ./ –name “*.txt” –exec cat {} >> giant_file.txt \;

Note that {} is the argument list, and >> means “append”

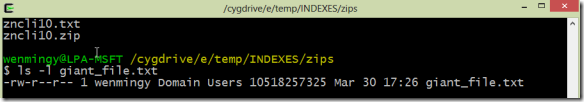

The text file is about 10GB in size.

The Unix Split Command

We often need to split a larger file into smaller chunks, split is a very useful command for data files. You can split by number of lines, or simply by total number of chunks. In our case, we need to split the 10GB file into 40 files to get approximately 250MB each.

mkdir split_files; cd split_files create a directory that we keep the split files separately, and cd into it.

In split_files directory, type split –n 40 ./giant_file.txt to start the process.

A list of files with auto-generated file names appear in the split_files directory. The next step is to upload these files to the Blob Storage.

The Windows Azure AZCopy Utility

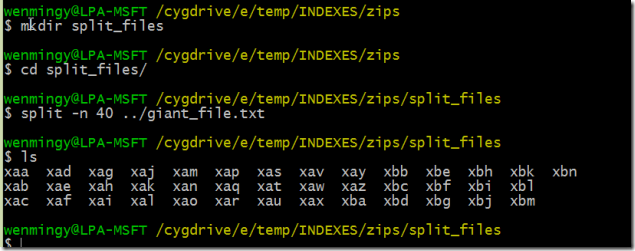

Download the Windows Azure Blob copy utility from Github, then copy it to c:\cygwin\usr\local\bin

The AZcopy utility can copy files to/from blob storage, you can learn more about it at: https://blogs.msdn.com/b/windowsazurestorage/archive/2012/12/03/azcopy-uploading-downloading-files-for-windows-azure-blobs.aspx Or you can get help by typing AzCopy /?

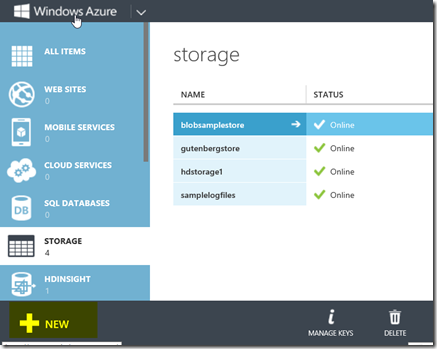

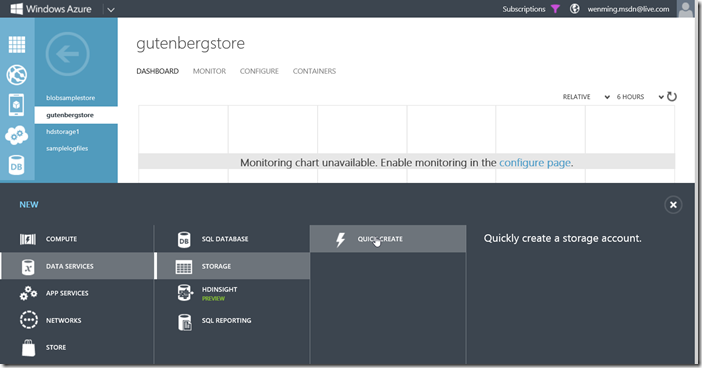

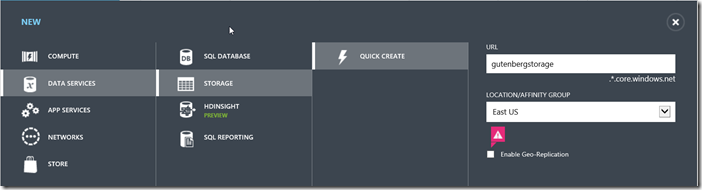

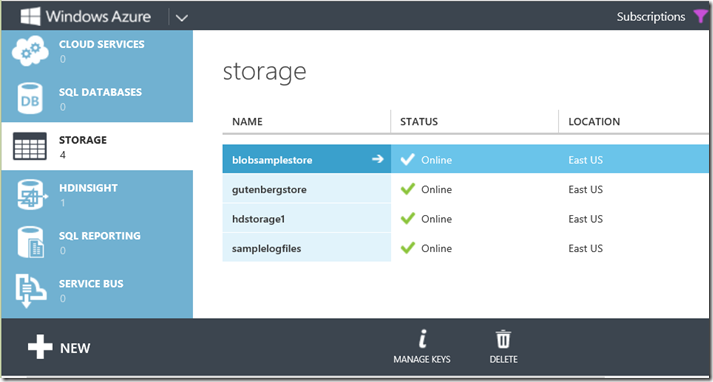

In our case, we are copy files to a blob container we created on Azure blob storage account. To do so, you need to create an new storage account, it is recommended that you do that in the east data center, or the data center which your HDInsight cluster will be created.

I recommend turning off Geo replication to keep cost low.

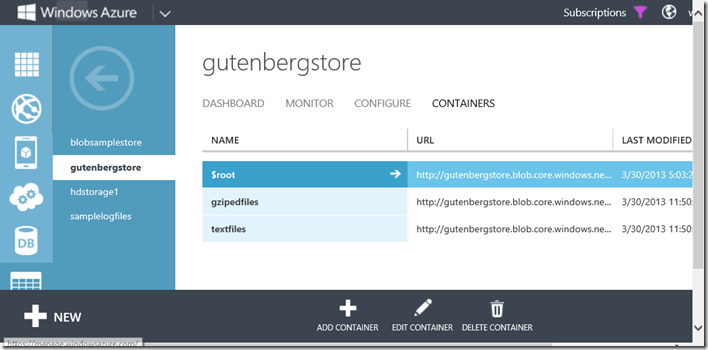

You can add/remote container by selecting the containers tab in Gutenbergstore storage account.

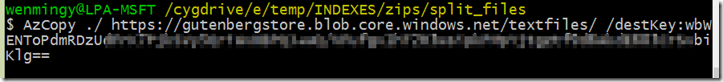

We will be using this command to upload all the files to blob storage: (please note you should replace your own keys with /destKey switch) The storage account keys are at the bottom tool bar, “manage keys".

wenmingy@LPA-MSFT /cygdrive/e/temp/INDEXES/zips/split_files

$ AzCopy ./ https://gutenbergstore.blob.core.windows.net/textfiles/ /destKey:yourKey

Note ./ means currently directory, destination is the URL for the blob container.

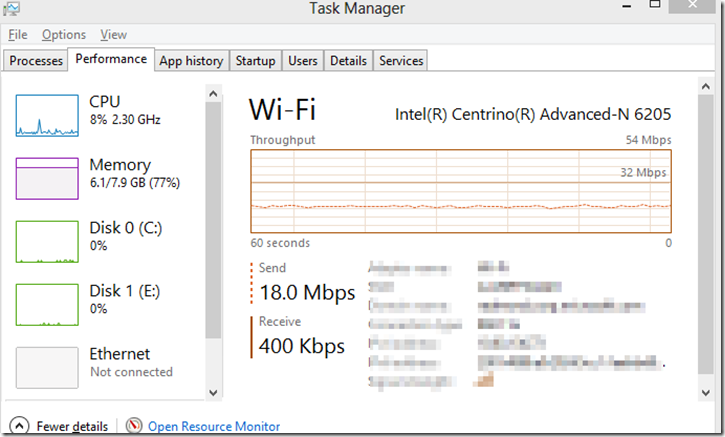

The AzCopy Utility can perform at excellent transfer speed using the default configuration. We sustained 18Mbps over our wifi network. AzCopy uses 8 threads/core as its default number for transfer.

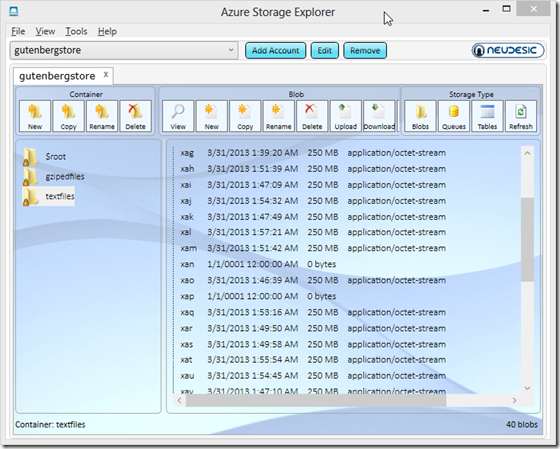

Once done, you should find the files in Blob storage by inspecting the container using Azure Storage Explorer.

Conclusion

In this part of the tutorial, we learned how to use a few useful Unix tools including Gnu Parallel, Find, Split, and the AzCopy command to efficiently deal with large files using the Cygwin environment. It should also give you a flavor of what Data scientists do on a daily basis when it comes to dealing with data files. We have not touched on how to use HDInsight to do some of these tasks in Parallel. In the next blog, we will cover Map Reduce in detail on doing word count on the Gutenberg files we just uploaded.