Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

This common blocking symptom really can cause trouble if you get caught off guard.

When you process an Analysis Services object, like FullProcess on a database or cube, near the end of processing is the phase where it needs to swap the old files out and replace it with the new files a lock is needed a the highest level in the database. The users running queries have got the priority until they finish.

Some times, the users and the server administrator cannot even log in with management studio to run a new query.

An Analysis services database is a collection of files (some XML files to point to others, and some compressed binary files) that represent the objects in the cube that you query with MDX queries. Processing is the act of refreshing those objects with a new set of data values from the relational database. It runs large relational database TSQL queries to query from the data sources, do joins, and aggregate the data, and save the compressed and aggregated data into the cube. The old copy of the AS database objects stays in place until the very end of the processing, when its is nearly done the Commit Phase begins.

The Commit Phase needs an exclusive write lock, and the users can’t be querying the objects at that moment when it does the swap of the old version of the cube data for the new version.

Another problem is the instance-wide Master.vmp lock is required to finish the commit from processing.This special file is a pointer to all the other database objects and their current versions, and this file is critical at the point when swapping out the old database objects with the new database objects.

At the point the server enters phase 2 of the commit, it tries to acquires a server level lock to update Master.vmp. If there is another transaction in process at that point, the server wait for an interval that is equal to the ForceCommitTimeout setting (default is 30 seconds) and then rollback any uncommitted transactions and abort executing queries. That server wide lock remains in effect until the transaction completes and will block any read lock request that is initiated. When a new login or existing user attempts to connect to the server, they will initiate a read lock request and wait.

This tiny file is the central point of the list of databases in Analysis Services, and should never be tampered with, else your database is likely to be deleted! Look but don’t touch!

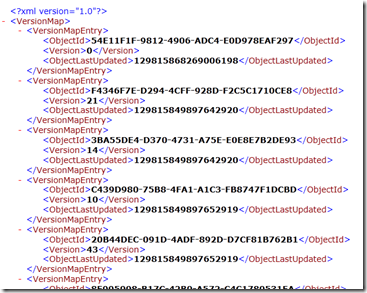

Insides of the master.vmp (shown with XML formatting for clarity) shows each object (represented by a GUID value) and the version number (an integer 1 2 3… 43 etc) . The <version> number gets incremented every time the object is processed (or synchronized) and committed by the server, and the time is updated. This is the central point of all objects in an Analysis Services instance.

So back to the story, why can’t I log In when locking happens?

Locking can be the center of the problem. Here is a visual simplification of the blocking chain that can happen that will prevent new users from getting into the database and running any query.

This is the locking pattern I saw last week – slow queries aggrevated the processing commit waits and the server became non-responsive.

The head queries in Set 1 are taking many hours, and the Set 2 locks are waiting for 1 hour +

Set 3 New Logins >>> Set 2 Processing Commit phase >>> Set 1 Queries

- Set 1 Queries running holds database read locks (running for several hours)

- Set 2 Processing Commit needs commit write locks (waiting about 1 hour or more)

- Set 3 New connections wait in line, blocked to read the soon to be committed database

Sometimes the administrator cannot even log in with management studio because the connection gets queued in Set 3.

When most new connections come in from Management Studio, the server does their initialization to see database names and object names with discover commands. They may get stuck in line waiting to read the soon to be committed and processed database behind the processing Set 2 .

The new connections will likely do a discovery command such as

Discover on DBSCHEMA_CATALOGS

Discover on MDSCHEMA_MEMBERS

-

- During the commit phase of the processing transaction, queries can still be sent to the object, but they will be queued until the commit is completed. For more information about locking and unlocking during processing

https://msdn.microsoft.com/en-US/library/ms174860(v=SQL.100).aspx

What to do to fix the problem:

1. Minimize the MDX query duration. Tune the queries. Reduce the time it takes for Set 1 to finish you will have the least conflict between queries and processing.

In one example I saw, the slow query was requesting an arbitrary shape. Tune or Avoid Arbitrary Shape queries in Set 1 to run faster, or change syntax to avoid arbitrary shapes, or configure a timeout in the application to kill long running queries. For more on Arbitrary Shape read https://blog.kejser.org/2006/11/16/arbitrary-shapes-in-as-2005/

Add aggregations, and partitions to reduce the amount of reading of data required.

Tune any calculations that may take the formula engine a long time to work on.

Run a profiler trace to investigate the MDX query. I won’t get into the specifics, but you get the idea.

However, sometimes you cannot control the queries at all, in an ad-hoc environment when Excel pivot table users and custom MDX is allowed you will have the occasional runaway MDX query that may just take a long time. What else can you do?

2. Avoid processing at peak hours to avoid query and processing collisions.

In one example, the Set 2Full Processing happens at 11:30am and noon, in the middle of busy query times. There is bound to be a locking collision during those busy times because there are big queries running in the business then. Avoid processing at peak times.

An aside - Some folks totally avoid processing database on the server where queries run. In other words they scale out, doing processing on a dedicated server, and connect the users to run their read-only MDX queries on a dedicated query AS server (can scale further to run multiple query servers in tandem). To do that, you can use the Analysis Services feature called database synchronization to copy the database from server to server in an automated fashion. The same locking can apply – the users have to stop their queries in order for the synchronization to apply the refreshed synchronized objects, so there is still the same kind of locking that can happen. The synchronization best practices are discussed in this whitepaper by the SQL CAT team. https://sqlcat.com/sqlcat/b/technicalnotes/archive/2008/03/16/analysis-services-synchronization-best-practices.aspx

3. Tell the server to favor one or the other when blocking occurs. Choose a victim!

Try these two configuration settings to allow the server to attempt killing either the long queries Set 1 or the waiting processing Set 2.

-

Kill the queries - Set 2 can influence the server the server to cancel Set 1 after a time of waiting on locks with this setting:

- ForceCommitTimeout is a server property that is used to control what happens when a processing operation is waiting to finish its operation to enter the commit phase. When this value is greater than zero, SSAS will start canceling prior transactions, but only after the specified value in milliseconds. However, if read locks become available before the ForceCommitTimeout period is reached, canceling will not occur.

Kill the Processing Set 1 can influence the server to cancel Set 2 after waiting on locks occurs.

- CommitTimeout Analysis Server processing operations need to acquire a write lock before it can commit a transaction. In order to acquire a write lock, no other read locks can be taken by another process or query. Therefore, Analysis Services needs to wait until all read locks are released. The transaction will wait for a period of time to acquire a write lock, as specified by the CommitTimeout property before rolling back.

Sometimes the cancellation doesn’t happen immediately so even with the ForceCommitTimeout and CommitTimeout, there can be a limbo period where work is stalled.

Another Variation – Multiple processing requests can block each other

If you run two or more processing batches at the same time in different transactions, a similar locking chain and deadlock may occur. This is overly simplified, but just for illustration, imagine there are 2 processing transactions that are ready near the exact same time, but get blocked waiting on a user’s long MDX query.

The locking granularity at the end of processing is quite coarse at the database level and at the master.vmp file, so it is difficult to get parallel processing to go through successfully.

For Processing Start time is not as critical as end time, so aim to avoid overlap in the end of processing jobs. If the two processing transactions are ready to committing around the same time they may hold some locks and request other lock and cause a deadlock.

Adding a long running MDX query into the mix makes it more likely that a deadlock chain will occur as well, since the intermediate locks can cause a circle to happen.

You might get this error as a Notification event in the profiler trace:

Transaction errors: Aborting transaction on session <victimsessionid>

The victim processing job will likely be cancelled with this error:

Transaction errors: While attempting to acquire one or more locks, the transaction was canceled.

Proposed Remedies for locking conflict between processing jobs

1. Schedule processing in a staggered fashion – remember that the end time matters more than start time, because the commit phase is the time where the high granularity commit locks are needed.

Avoid peak times to avoid the locking described at the top of the blog post.

2. Combine processing into a single transaction/batch XMLA tag.

If you process objects in the scope of a single transaction, maybe they won’t collide and kill each other. You can process parallel objects within a single transaction perhaps, instead of a sequence of small commits, you could have a larger more granular commit to reduce the window in which locks happen, but remember you are increasing the surface area of the amount of locks at lower level granularity so it may increase conflict with user queries.

For example, you can have multiple processing commands in a single batch.

<Batch xmlns="https://schemas.microsoft.com/analysisservices/2003/engine">

<Parallel>

<Process xmlns:xsd="https://www.w3.org/2001/XMLSchema" xmlns:xsi="https://www.w3.org/2001/XMLSchema-instance" xmlns:ddl2="https://schemas.microsoft.com/analysisservices/2003/engine/2" xmlns:ddl2_2="https://schemas.microsoft.com/analysisservices/2003/engine/2/2" xmlns:ddl100_100="https://schemas.microsoft.com/analysisservices/2008/engine/100/100" xmlns:ddl200="https://schemas.microsoft.com/analysisservices/2010/engine/200" xmlns:ddl200_200="https://schemas.microsoft.com/analysisservices/2010/engine/200/200" xmlns:ddl300="https://schemas.microsoft.com/analysisservices/2011/engine/300" xmlns:ddl300_300="https://schemas.microsoft.com/analysisservices/2011/engine/300/300">

<Object>

<DatabaseID>AdventureWorksDW2012</DatabaseID>

<DimensionID>Dim Account</DimensionID>

</Object>

<Type>ProcessUpdate</Type>

<WriteBackTableCreation>UseExisting</WriteBackTableCreation>

</Process>

<Process xmlns:xsd="https://www.w3.org/2001/XMLSchema" xmlns:xsi="https://www.w3.org/2001/XMLSchema-instance" xmlns:ddl2="https://schemas.microsoft.com/analysisservices/2003/engine/2" xmlns:ddl2_2="https://schemas.microsoft.com/analysisservices/2003/engine/2/2" xmlns:ddl100_100="https://schemas.microsoft.com/analysisservices/2008/engine/100/100" xmlns:ddl200="https://schemas.microsoft.com/analysisservices/2010/engine/200" xmlns:ddl200_200="https://schemas.microsoft.com/analysisservices/2010/engine/200/200" xmlns:ddl300="https://schemas.microsoft.com/analysisservices/2011/engine/300" xmlns:ddl300_300="https://schemas.microsoft.com/analysisservices/2011/engine/300/300">

<Object>

<DatabaseID>AdventureWorksDW2012</DatabaseID>

<DimensionID>Clustered Customers</DimensionID>

</Object>

<Type>ProcessFull</Type>

<WriteBackTableCreation>UseExisting</WriteBackTableCreation>

</Process>

</Parallel>

</Batch>

3. Process on one server, sync to another server. The synchronization best practices are discussed in this whitepaper by the SQL CAT team. https://sqlcat.com/sqlcat/b/technicalnotes/archive/2008/03/16/analysis-services-synchronization-best-practices.aspx

How do you see these locks and blocking chains? Are they visible?

Starting in SQL Server 2008 R2 Service Pack 1 there are some great profiler trace events that help see these locks. There are XML tags within the text of the trace events that show who’s waiting, and which locks are held. Collect a profiler trace with the normal events, but add these events to see who’s blocking whom and for how long, and on which objects are the locks conflicting.

https://support.microsoft.com/kb/2458438

-

-

- Locks Acquired

- LockList XML nodes

- Locks Released

- LockList XML nodes

- Lock Waiting

- The transaction ID

- The LockList XML node

- The WaitList XML node

- The HoldList XML node

- Locks Acquired

-

In SQL Server 2008 Analysis Services or later you can run an MDX query the dynamic management views to see the various connections, their transactions, and who’s granted locks and who’s waiting on locks (blocking).

select * from $system.discover_connections;

go

select * from $system.discover_sessions;

go

select * from $system.discover_transactions;

go

select * from $system.discover_locks;

go

select * from $system.discover_jobs

go

Reference: https://dwbi1.wordpress.com/2010/01/01/ssas-dmv-dynamic-management-view/

The same thing is possible in SQL Server 2005, but an alternate (harder) XMLA syntax is required.

<Discover xmlns="urn:schemas-microsoft-com:xml-analysis">

<RequestType>DISCOVER_LOCKS</RequestType>

<Restrictions>

</Restrictions>

<Properties>

<PropertyList xmlns="urn:schemas-microsoft-com:xml-analysis">

<LocaleIdentifier>1033</LocaleIdentifier>

<Content>Data</Content>

<Format>Tabular</Format>

</PropertyList>

</Properties>

</Discover>

More Information and Suggested References on Analysis Services Processing

These are good reads I recommend to help illuminate the complexities and best practices of processing.

AS Processing Best Practices- https://technet.microsoft.com/library/Cc966525

Analysis Services Whitepaper download - https://www.microsoft.com/en-us/download/details.aspx?id=17303 Page 60 starts the processing section

Comments

- Anonymous

July 29, 2013

how can i avoid processing bloking?there has good method?