Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

For a machine learning junkie like me, there was lots to love at Build 2016! In this post, I’ll fill you in on the machine learning announcements from Build.

In summary, we announced previews of the Microsoft Bot Framework and the Microsoft Cognitive Services (formerly Project Oxford) for adding intelligence to your applications. We showcased the fun Project Murphy bot and the inspiring Seeing AI story. All of the machine learning sessions given at Build 2016 are online at https://channel9.msdn.com/Events/Build/2016?t=machine-learning.

Microsoft Bot Framework

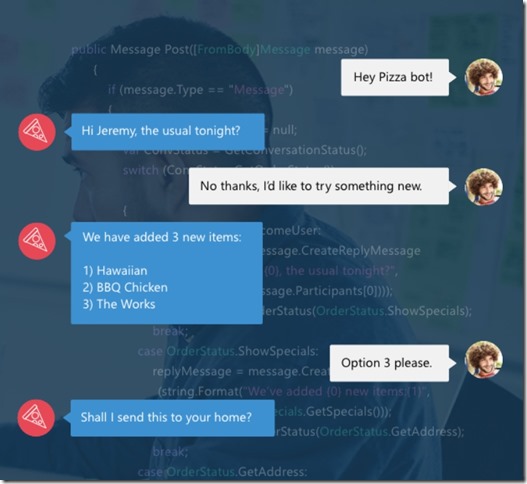

The Microsoft Bot Framework allows you to build intelligent bots to interact with your users naturally in writing, using text/SMS, Skype, Slack, Office 365 mail, and other popular services. During the keynote, they showed an example Domino’s Pizza bot which you could talk to (actually, type to) in natural language to order a pizza.

This great getting-started guide walks you through the steps of creating and publishing a bot. Write your code (there is a C# template available in Visual Studio), use the Bot Framework Emulator to test your bot, publish your bot to Microsoft Azure, register your bot with the Microsoft Bot Framework, test the connection to your bot, and configure channels on which your bot should communicate.

The Bot Builder for Node.js and Bot Builder for C# help you create a great bot.

The Bot Connector lets you connect your bot to popular conversation experiences, like GroupMe, Slack, Skype, SMS, and others.

The Bot Directory provides a way to upload and share your bots. Currently, it contains 7 sample bots from Build 2016: Summarize (to summarize web pages), Bing Image Bot (to search the web for memes, gifs, etc.), MurphyBot (to bring “What if” scenarios to life by creating mash-up images), Bing News Bot (to search the web for news), CaptionBot (to describe images), BuildBot (to navigate the Build conference), and Bing Music Bot (to search for music on the web).

For more information, there was a great deep-dive session on building a bot with the Bot Framework at Build 2016.

Microsoft Cognitive Services

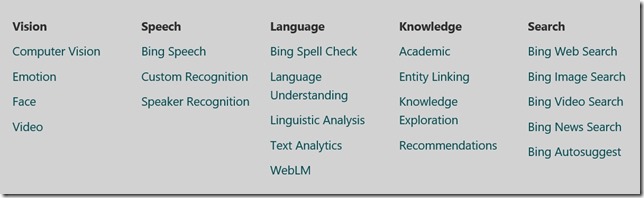

The Microsoft Cognitive Services (formerly called “Project Oxford”) are a set of 21 APIs in the areas of Vision, Speech, Language, Knowledge, and Search.

You may have already seen these APIs in action. A flurry of fun websites showcase their power: How Old which predicts your age and gender based on a photo, Twins or Not which predicts whether 2 pictures are twins (or the same person), What Dog which can predict the breed of an image of a dog, CaptionBot which describes images, and others at Cognitive Services applications.

At Build, there were demo kiosks set up all over the conference to allow you to experience the power of these APIs to build intelligent apps. Imagine the possibilities for retail if you could tell the emotions of people looking at your products! And of course, both Project Murphy and the Seeing AI app (keep reading!) are powered by Microsoft Cognitive Services.

Learn more at https://microsoft.com/cognitive. There was also a great session by Harry Shum on the Cognitive Services at Build 2016.

Project Murphy

Project Murphy brings together the Microsoft Bot Framework and the Microsoft Cognitive Services in a very fun way. Project Murphy is a Skype Bot which answers questions like “What if Scott Hanselman was being chased by an elephant?” by doing Bing image searches to find pictures of people being chased by elephants and pictures of Scott Hanselman, and then combining those images. Needless to say, I installed it immediately and spent way too long playing with it! For example: what if I was a Disney princess?

There are a couple of interesting things to note here:

1. It can support “what if I…” type questions, as you can see from the example above. If you ask “what if I…”, the bot asks you for an example photo of yourself, which it only stores for 10 minutes to answer subsequent questions.

2. It asks for feedback via emojis, so it can improve its results (yay machine learning).

3. It does some work to adjust the orientation of the face if needed. I uploaded a picture of myself looking straight at the camera, and asked a question which produced an image with a face looking sideways, and it rotated my face to the correct angle.

Seeing AI

At Build 2016, the Microsoft Cognitive Services stole the show at the close of the first keynote, with an inspiring video about a Microsoft employee who lost his sight at age 7 and used the Microsoft Cognitive Services along with glasses from Pivothead to help him see. The video shows several compelling scenarios:

- Using the Object Recognition API to describe the world around you. In the video, he touched the glasses to take a picture when he heard a strange sound, and the Microsoft Cognitive Services could identify that it was a boy riding a skateboard which was communicated back to him.

- Using the Face and Emotion APIs to detect the emotions of those around you. Imagine not having visual cues while speaking to others in a meeting, and having no idea if they are interested in your ideas or falling asleep. In the video, he touched the glasses to take a picture and his colleagues’ faces are identified and emotions reported.

- Using OCR to read a menu. Optical character recognition (OCR) is the ability to detect text in an image and convert those to digital words. In the video, he uses his phone to take a picture of the menu, and then the app can read it aloud to him. It also uses some neat edge-detection logic to guide him in taking the photo, so he gets the entire menu.

Resources

Machine learning sessions at Build 2016

Build blog post from the Machine Learning team

Microsoft Bot Framework dev portal

Comments

- Anonymous

April 22, 2016

Great resumen !!! I've created a 15 min video on how to create an UWP app whith a similar funcionality as the Seeing App, https://elbruno.com/2016/04/20/coding4fun-whats-there-app-source-code-and-visionapi-description/ it was so easy and fun !!Regards-El Bruno