IIS SEO Toolkit

Again the IIS team are kicking butt in showing the value of using Windows as a web platform, this time with the IIS SEO toolkit – to bust the jargon – Internet Information Services Search Engine Optimization Toolkit.

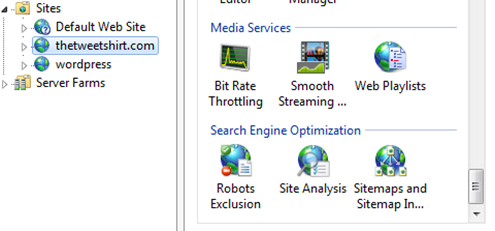

To get it, of course the easy way is to grab the Web Platform Installer and tick the option, it’ll become part of your IIS Manager. At this stage the Toolkit will only analyse the website you have on your server.

I didn’t have any sites locally to play with, so I yanked one that is constantly seeking publicity – Michael Korhdahi’s thetweetshirt.com – I sucked down the site content using Expression Web 2 and hosted it locally on my IIS Server.

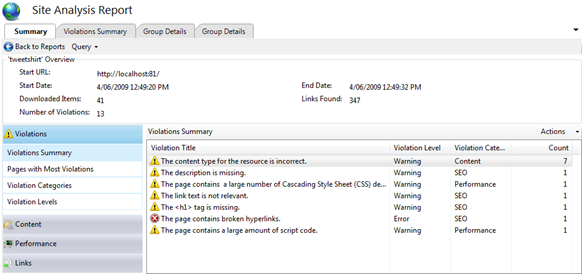

Then selected “Site Analysis” and analysed the site, which was pretty fast, since there wasn’t much content there.. and gave me a few things to look at:

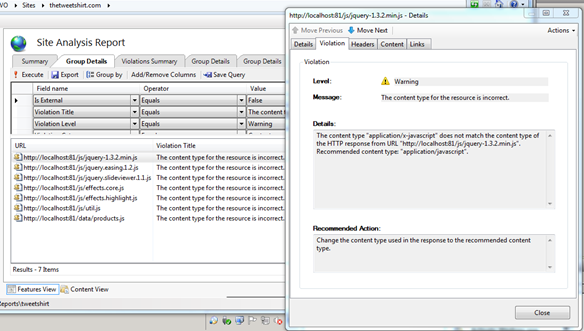

Drilling down into the first option gives you a query-able interface to find rules which is kinda neat, PLUS an insane amount of detail on the actual warning itself:

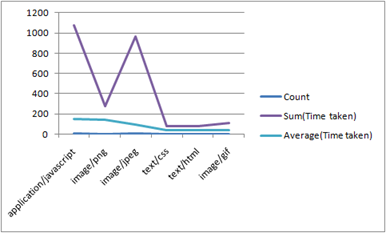

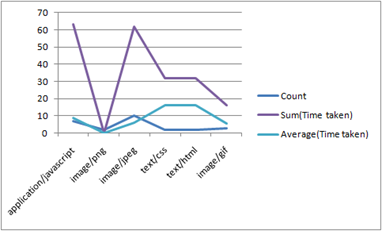

I could drill down on each detail forever, but by FAR coolest feature is under the Performance Tab, where you can analyse the speed, of the content loading. Even better is that you can export this query as a CSV – which you can then import into something like Excel and graph:

So from this we can see that the delivery of the JavaScript and Jpeg’s are the least efficient part of the site…

With a few tweaks I reckon I can fix that easy enough.. just add some output caching…

<system.webServer>

<caching>

<profiles>

<add extension=".htm" policy="DontCache" kernelCachePolicy="DontCache" />

<add extension=".gif" policy="CacheUntilChange" kernelCachePolicy="CacheUntilChange" />

<add extension=".png" policy="CacheUntilChange" kernelCachePolicy="CacheUntilChange" />

<add extension=".js" policy="DontCache" kernelCachePolicy="DontCache" />

<add extension=".jpg" policy="CacheUntilChange" kernelCachePolicy="CacheUntilChange" />

</profiles>

</caching>

</system.webServer>

And we do seem to get better results.

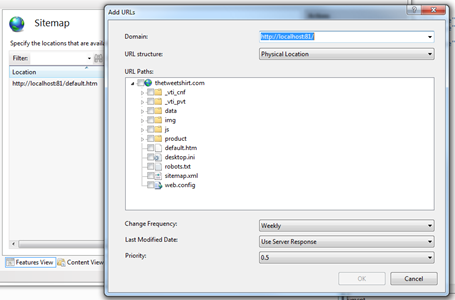

So that’s a very fast look that analytics part of the package but there’s also the ability to create a sitemap for the spiders or bots to follow:

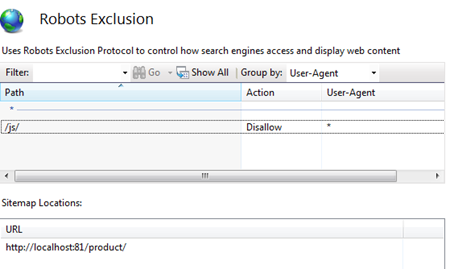

And then the Robots Exclusion Module that allows you to create the robots.txt file and set sitemap locations:

So all in all, a very neat little module that helps the developer setup their site to be ideal for search engines to index.

-jorke