Autoscaling Azure–Cloud Services

This post shows how to autoscale Azure Cloud Services using a Service Bus queue.

Background

My role as an Architect for Azure COE requires me to have many different types of discussions with customers who are designing highly scalable systems. A common discussion point is “elastic scale”, the ability to automatically scale up or down to meet resource demands. One of the keys to building scalable cloud solutions is understanding queue-centric patterns. Recognizing the opportunity to provide multiple points to independently scale an architecture ultimately protects against failures while it enables high availability.

In my previous post, I discussed Autoscaling Azure Virtual Machines where I showed how to leverage a Service Bus queue to determine if a solution requires more resources to keep up with demand. In that post, we had to pre-provision resources, manually deploy our code solution to each machine, and then use PowerShell to enable a startup task. This post shows how to create an Azure cloud service that contains a worker role, and we will see how to automatically scale the worker role based on the number of messages in a queue.

The previous post showed that we had to pre-provision virtual machines, and that the autoscale service simply turned them on and off. This post will demonstrate that autoscaling a cloud service means creating and destroying the backing virtual machines. Because data is not persisted on the local machine, we use Azure Storage to export diagnostics information, allowing persistent storage that survive instances.

Create the Cloud Service

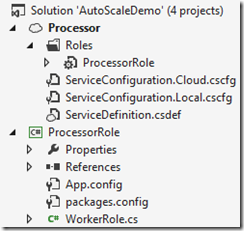

In Visual Studio, create a new Cloud Service. I named mine “Processor”.

The next screen enables you to choose from several template types. I chose a Worker Role with Service Bus Queue as it will generate most of the code that I need. I name the new role “ProcessorRole”.

Two projects are added to my solution. The first project, ProcessorRole, is a class library that contains the implementation for my worker role and contains the Service Bus boilerplate code. The second project, Processor, contains the information required to deploy my cloud service.

Some Code

The code for my cloud service is very straightforward, but definitely does not follow best practices. When the worker role starts, we output Trace messages and then listen for incoming messages from the Service Bus queue. As a message is received, we output a Trace message then wait for 3 seconds.

Note: This code is different than the code generated by Visual Studio that uses a ManualResetEvent to prevent a crash. Do not take the code below as a best practice, but rather as an admittedly lazy example used to demonstrate autoscaling based on the messages in a queue backing up.

WorkerRole.cs

- using Microsoft.ServiceBus.Messaging;

- using Microsoft.WindowsAzure;

- using Microsoft.WindowsAzure.ServiceRuntime;

- using System;

- using System.Diagnostics;

- using System.Net;

- namespace ProcessorRole

- {

- public class WorkerRole : RoleEntryPoint

- {

- // The name of your queue

- const string _queueName = "myqueue";

- // QueueClient is thread-safe. Recommended that you cache

- // rather than recreating it on every request

- QueueClient _client;

- public override void Run()

- {

- Trace.WriteLine("Starting processing of messages");

- while (true)

- {

- // Not a best practice to use Receive synchronously.

- // Done here as an easy way to pause the thread,

- // in production you'd use _client.OnMessage or

- // _client.ReceiveAsync.

- var message = _client.Receive();

- if (null != message)

- {

- Trace.WriteLine("Received " + message.MessageId + " : " + message.GetBody<string>());

- message.Complete();

- }

- // Also a terrible practice... use the ManualResetEvent

- // instead. This is shown only to control the time

- // between receive operations

- System.Threading.Thread.Sleep(TimeSpan.FromSeconds(3));

- }

- }

- public override bool OnStart()

- {

- // Set the maximum number of concurrent connections

- ServicePointManager.DefaultConnectionLimit = 12;

- // Initialize the connection to Service Bus Queue

- var connectionString = CloudConfigurationManager.GetSetting("Microsoft.ServiceBus.ConnectionString");

- _client = QueueClient.CreateFromConnectionString(connectionString, _queueName);

- return base.OnStart();

- }

- public override void OnStop()

- {

- // Close the connection to Service Bus Queue

- _client.Close();

- base.OnStop();

- }

- }

- }

Again, I apologize for the code sample that does not follow best practices.

The Configuration

Contrary to how we pre-provisioned virtual machines and copied the code to each virtual machine, Cloud Services will provision new virtual machine instances and then deploy the code from the Azure fabric controller to the cloud service. If the role is upgraded or maintenance performed on the host, the underlying virtual machine is destroyed. This is a fundamental difference between roles and persistent VMs. This means we no longer RDP into each instance and make changes manually, we incorporate any desired changes into the deployment package itself. The package we are creating uses desired state configuration to tell the Azure fabric controller how to provision the new role instance.

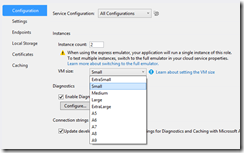

As an example, you might add the Azure Role instance to a virtual network as I showed in the post Deploy Azure Roles Joined to a VNet Using Eclipse where I edited the .cscfg file to indicate the virtual network and subnet to add the role to. Using Visual Studio, you can affect various settings such as the number of instances, the size of each virtual machine, and the diagnostics settings:

For a more detailed look at handling multiple configurations, debugging locally, and managing connection strings, see Developing and Deploying Microsoft Azure Cloud Services Using Visual Studio.

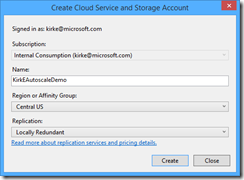

Deploying the Cloud Service

Right-click on the deployment project and choose Publish. You are prompted to create a cloud service and an automatically created storage account.

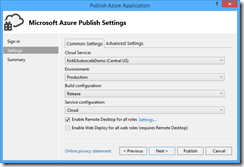

The next screen provides more settings regarding whether it the code is being deployed to Staging or Production, whether it is a Debug or Release build, and which configuration to use. You can also configure the ability to enable Remote Desktop to each of the roles. I enable that, and provide the username and password to log into each role.

Click Publish and you can watch the status in the Microsoft Azure Activity Log pane. Notice that the output shows we are uploading a package:

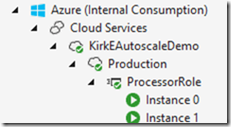

Once deployed, we can see the services are running in Visual Studio:

We can also see the deployed services in the management portal:

Managing Autoscale

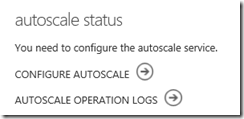

Just like we did in the article Autoscaling Azure Virtual Machines, we will use the autoscale service to scale our cloud service based on queue length. Go to the management portal, open the cloud service that you just created, and click the Dashboard tab. Then click the “Configure Autoscale” link:

On that screen you will see that we have a minimum of 2 instances because we specified 2 instances in the deployment package.

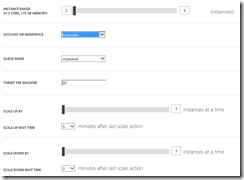

Click on the Queue option, and we can now scale between 1 and 350 instances!

OK, that’s a little much… let’s go with a minimum of 2 instances, maximum of 5 instances, and scale 1 instance at a time over 5 minutes based on messages in my Service Bus queue.

Click save, and within seconds our configuration is saved.

Testing it Out

I wrote a quick Console application that will send messages to the queue once per second. The receiver only processes messages once every 3 seconds, so we should quickly have more messages in queue than 2 instances can handle, forcing an autoscale event to occur.

Sender

- using Microsoft.ServiceBus.Messaging;

- using Microsoft.WindowsAzure;

- using System;

- namespace Sender

- {

- class Program

- {

- static void Main(string[] args)

- {

- string connectionString =

- CloudConfigurationManager.GetSetting("Microsoft.ServiceBus.ConnectionString");

- QueueClient client =

- QueueClient.CreateFromConnectionString(connectionString, "myqueue");

- int i = 0;

- while(true)

- {

- var message = new BrokeredMessage("Test " + i);

- client.Send(message);

- Console.WriteLine("Sent message: {0} {1}",

- message.MessageId,

- message.GetBody<string>());

- //Sleep for 1 second

- System.Threading.Thread.Sleep(TimeSpan.FromSeconds(1));

- i++;

- }

- }

- }

- }

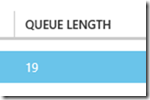

If we let the Sender program run for awhile, it is sending messages to the queue faster than the receivers can process them. I go to the portal and look at the Service Bus queue, I can see that the queue length is now 61 after running for a short duration.

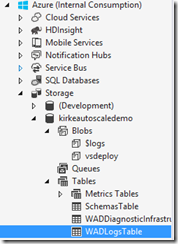

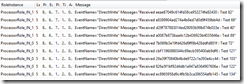

Hit refresh, and we see it is continuing to increase. Next, go to the Azure Storage Account used for deployment and look at the WADLogsTable:

Double-click and you will see that the roles are processing the messages, just not faster than the Sender program is sending them.

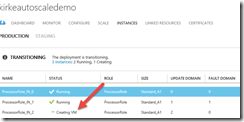

After a few minutes, the autoscale service sees that there are more messages in queue than we configured, our current role instances cannot keep up with demand, so a new virtual machine instance is created.

This is very different than when we used virtual machines. When using cloud services, the roles are created and destroyed as necessary. I then stop the sender program, and the number of queue messages quickly falls as our current number of instances can handle the demand:

And we wait for a few more minutes to see that the autoscale service has now destroyed the newly created virtual machine according to our autoscale rules.

This is a good thing, as the virtual machine was automatically created according to our autoscale rules as well. This should highlight the importance, then, of not simply using Remote Desktop to connect to a cloud service role instance to configure something. Those settings must be applied within the deployment package itself.

Monitoring

If we go to the operation logs we can see the deployment operation (note: some values are redacted by me):

Operation Log

- <SubscriptionOperation xmlns="https://schemas.microsoft.com/windowsazure"

- xmlns:i="https://www.w3.org/2001/XMLSchema-instance">

- <OperationId>3c8f4a65-64c4-7c6a-86db-672972e504b9</OperationId>

- <OperationObjectId>/REDACTED/services/hostedservices/kirkeautoscaledemo/deployments/REDACTED</OperationObjectId>

- <OperationName>ChangeDeploymentConfigurationBySlot</OperationName>

- <OperationParameters xmlns:d2p1="https://schemas.datacontract.org/2004/07/Microsoft.WindowsAzure.ServiceManagement">

- <OperationParameter>

- <d2p1:Name>subscriptionID</d2p1:Name>

- <d2p1:Value>REDACTED</d2p1:Value>

- </OperationParameter>

- <OperationParameter>

- <d2p1:Name>serviceName</d2p1:Name>

- <d2p1:Value>kirkeautoscaledemo</d2p1:Value>

- </OperationParameter>

- <OperationParameter>

- <d2p1:Name>deploymentSlot</d2p1:Name>

- <d2p1:Value>Production</d2p1:Value>

- </OperationParameter>

- <OperationParameter>

- <d2p1:Name>input</d2p1:Name>

- <d2p1:Value><?xml version="1.0" encoding="utf-16"?>

- <ChangeConfiguration xmlns:i="https://www.w3.org/2001/XMLSchema-instance"

- xmlns="https://schemas.microsoft.com/windowsazure">

- <Configuration>REDACTED

- </d2p1:Value>

- </OperationParameter>

- </OperationParameters>

- <OperationCaller>

- <UsedServiceManagementApi>true</UsedServiceManagementApi>

- <UserEmailAddress>Unknown</UserEmailAddress>

- <ClientIP>REDACTED</ClientIP>

- </OperationCaller>

- <OperationStatus>

- <ID>REDACTED</ID>

- <Status>InProgress</Status>

- </OperationStatus>

- <OperationStartedTime>2015-02-22T13:38:12Z</OperationStartedTime>

- <OperationKind>UpdateDeploymentOperation</OperationKind>

- </SubscriptionOperation>

For More Information

Developing and Deploying Microsoft Azure Cloud Services Using Visual Studio