HAVM Cluster–Configuration Recommendations on Windows Server 2008 R2

Whether connecting to virtual machines or physical ones, the thing that matters most to us is that the servers are available and responsive. To keep VMs available, Failover Clustering enters the stage providing a number of features to add redundancy and recoverability into Hyper-V VMs.

- Live Migration – Allows us to have zero down-time with our VMs to conduct hardware and software maintenance to the Hyper-V Hosts (aka Parent Partition)

- CSV – The feature that allows every cluster disk to be online all the time on every cluster node. Also provides us with additional redundancy to connect to the storage.

- Health Monitoring – Cluster can monitor the health of the VMs and take immediate action to bring them back to life if they are not responding.

All of these features are working together to make your VMs Highly Available. To maintain this HA, we must ensure that best practices are in place. When done properly, the net result is keeping those VMs online, responsive and available more often.

Validate

First off, many of you have heard of Validate or perhaps been using it for years. Windows Server 2008 marked the beginning of a new trend of “best practice” type tools being built right into the OS to check for proper configuration of various components. The Cluster Validation Wizard is a fully functional tool that will test the h/w, drivers and other components that are required to support a Failover Cluster system. It is designed to be run prior to or after deployment and requires that all h/w, storage connections, networking and Failover Cluster Feature are in place before running.

Cluster Validate is among one of the most useful tools that have been put into the hands of our customers that truly incorporates the kind of testing that our h/w vendors have been doing for over a decade to get their Failover Cluster solutions listed on the Microsoft HCL. Once Validate is complete, you are greeted with a detailed report of the testing that was done on your cluster system. When designing your new Hyper-V Failover Cluster, this will be among the first in the line of action items.

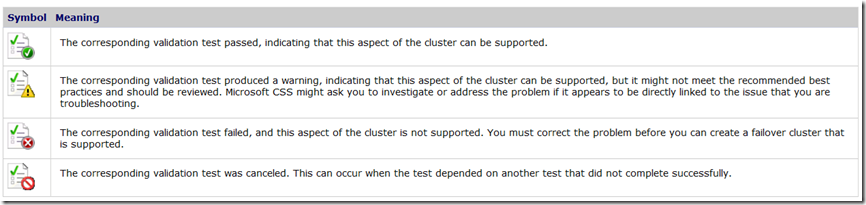

- Green check marks means that everything is configured per Microsoft best practices and/or is working properly.

- Yellow warnings indicate that there is something wrong that you should consider addressing, but whatever it is will not prevent you from building your cluster. An example of this could be if you only had a single network connection for your cluster nodes to communicate. You can technically build a cluster with only one network, but to be fully supported, Microsoft requires a second network. Another example could be if you ran Validate post-production and your Quorum configuration was not set properly for the number of nodes present in the cluster.

- Red X’s are bad. If you have any red ‘X’ in your report, cluster will not function on your setup and you must address the problem before you can continue. An example of this could be if your storage did not support the SCSI-3 PRs or other hardware features that we require.

Validate should be run after creating your Hyper-V Virtual Networks. It will identify many common configuration issues and most importantly, any problems with storage.

More info on Validate: https://technet.microsoft.com/en-us/library/cc732035(WS.10).aspx

Live Migration

Live Migration, as mentioned, is the feature that allows us to move a VM between cluster nodes with ZERO downtime. We want Live Migration to just work, right? Plug the nodes in, create a few VMs, and voila, we can Live Migrate our VMs around with no problem. For this to succeed however, there are some specific configurations that must be in place. To begin with, the processing that the VM was doing on the original host must be able to continue to run on the new host. If the processors are not the same manufacturer or even at a compatible level, then Live Migration will fail. If the processors are not compatible, we can enable ‘processor compatibility’ on the VMs that will essentially bring the physical procs down to the lowest functional denominator. However, we do not support migrating to nodes with different processor manufacturers.

- If the ‘virtual switches’ are not configured identically, we may fail. Ensure that every setting even down to the naming of the actual networks are identical from node to node.

- If any changes have been made to a VM through Hyper-V Manager, the Virtual Machine Configuration may be inconsistent and require a ‘refresh’ in Failover Cluster Manager.

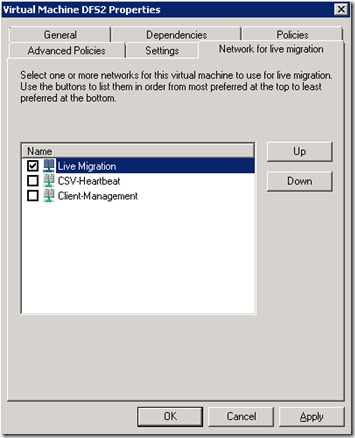

- Be sure to specify the network that you want the VMs to use for Live Migration. Also, set a secondary network if you have one as a backup in case the preferred network fails.

- IPSec is fine to use on all of the cluster networks, however, for performance reasons, we recommend keeping IPSec off of the Live Migration network. Unless required for security, keep it off the LM network.

Cluster Shared Volume

The CSV network will be the one that has the highest priority in the cluster. You will determine this by looking at the metric that is set in the network properties. The lowest metric value indicates the ‘highest’ priority. If the network that you intend to use for CSV traffic is not already set to the highest priority, you will want to run a PowerShell command to modify the metric value.

- To determine the current metric for the cluster networks:

- Get-ClusterNetwork | ft Name, Metric, AutoMetric, Role

- To modify the metric value of one of the cluster networks:

- ( Get-ClusterNetwork “Cluster Network” ).Metric=900

Additional Details at: https://technet.microsoft.com/en-us/library/ff182335(WS.10).aspx

- NTLM must be enabled on all nodes

- Ensure identical HBA/Storage/Drivers/Firmware across all nodes

- CSV feature utilizes SMB and thus Client for Microsoft Networks and File and Printer Sharing must be enabled

- This is a little different for those of you that are used to disabling these on the Heartbeat networks in the past.

- Be aware of available space on the CSV volumes, VMs will stop or be unable to start if there is no free space.

- Alternatively, use fixed disks

- https://blogs.msdn.com/b/clustering/archive/2010/06/19/10027366.aspx

Networking

Networking is absolutely critical in a Hyper-V cluster system. Depending on how many network adapters you have in your nodes, you have some options as to how to configure them. Although it can be done with fewer, the most ideal situation is to have 4 total networks.

- Virtual Machine Access

- The first network will be used for your Virtual Machines to actually get out on your client network.

- Management

- This network will be set aside for your management – Hyper-V Management, SCVMM, Failover Cluster, etc.

- Cluster and CSV

- Inter-node communications/heartbeating and CSV traffic will happen via the 3rd network. This network will be used to access the CSV volumes in re-directed access mode or when we are doing other maintenance to CSV volumes including backup operations. As mentioned, this will be your highest priority network.

- Live Migration

- This network will be used to transfer the memory pages from VM states during the Live Migration process. If possible, always provide a dedicated network for use with Live Migration.

- iSCSI

- A fifth network would be in place if we were also using iSCSI.

If you do not have a network to dedicate to Live Migration, you will need to configure a QoS policy to limit the bandwidth used for Live Migration purposes.

More details on networking can be found at: https://technet.microsoft.com/en-us/library/ff428137(WS.10).aspx

Load Balancing

One of the biggest issues that I see people run into when it comes to Failover Clustering is properly distributing the load of VMs across the cluster nodes. Special consideration needs to take place to ensure that each node can handle the pressure of VMs that may be hosted at one time. With the release of SP1 and the new Dynamic Memory feature, administration will be made simpler, but performance baselining should be completed to ensure the cluster nodes are not over-extended. SCVMM may be used to assist with proper load balancing of the VMs in conjunction with SCOM.

In my next blog, we will look at the issues of, and how to overcome, collecting performance data from CSV volumes. Thank you! –Kaitlin

Comments

Anonymous

October 31, 2011

This helped me so much! Thank you!Anonymous

January 19, 2015

NAZIM.Anonymous

January 19, 2015

NAZIM.