Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

This blog post is authored by Omar Alonso, a Senior Data Scientist Lead at Microsoft. To subscribe to this blog, click here.

Every successful chef will tell you that a key part of preparing a great meal is how you source your ingredients. The same advice applies to supervised or semi-supervised machine learning solutions: the quality of labels makes a huge difference. There is a wide range of ML algorithms and they all need good input data for you to avoid a “garbage in, garbage out” situation.

Common examples of applications that require labels are content moderation, information extraction, search engine relevance, entity resolution, text classification, and multimedia processing. In this post we briefly describe the main areas that impact the overall quality of labels.

What is a Label?

Label corpora may be used to train and evaluate a wide range of learning algorithms. Assigning a label is considered a judgment task performed by a human (worker, judge, expert, annotator, etc.) using an internal tool or with some of the crowdsourcing platforms available on the Web. Often perceived as a boring and time consuming activity, collecting high quality labels is a fundamental part of the ML process that requires full attention so it is important to own it end-to-end.

To illustrate the process, let’s see how labels are collected and used in search engines (Figure 1). Because the Web is so large it is not possible to use humans to label the entire collection. Instead, a representative sample is labeled by hand and predictive features are identified on this subset so an ML approach can be used to process the rest of the Web. Often, one or more judges will label the same document with the goal of obtaining high agreement. In aggregate, using some voting scheme, the final label is produced and it will be used in the learning phase. This model is then used to predict the labels for the rest of the collection that have not been part of this judgment task. Finally, we need to be able to compare the performance of the system. As we can see, every part of the workflow heavily depends on good judgments and good labels.

Figure 1. The importance of labels: from document assessment to performance evaluation.

How to Ask Questions

At some point, all tasks involving the collection of labels require asking a question to a human being, so that they can provide the best possible answer. E.g. is this document relevant for this topic? It this query navigational or informational? Does this image contain adult content? Asking questions that are clear and precise is not an easy exercise. In addition to using plain and understandable English, the information necessary to answer the question should be made available within a self-contained task. Good content (data) also keeps workers engaged.

Let’s examine a few examples. In Figure 2, the task is about video annotation. Unfortunately, the task is unclear, there are too many requests, too many “do not’s” and “reject” clauses, and the task is poorly presented:

Figure 2. How not to ask questions.

Figure 3 shows a task about data collection from ads. The problem with this design is that there are many steps that require the worker to go to one place or another, copy, enter, count, etc. making the task a difficult one for them to succeed on.

Figure 3. How not to ask questions.

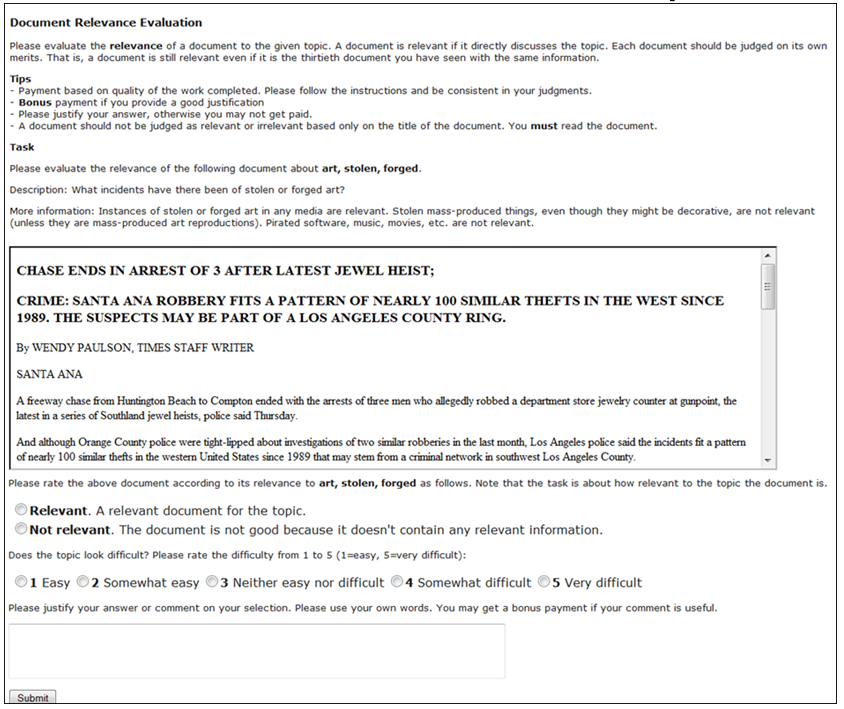

In contrast, Figure 4 shows how to ask a worker to assess the relevance of a topic to a web page. First, there’s a clear description of the task and a few tips provided. Next, the web page is rendered in the context of the task itself (i.e. there is no need to navigate someplace else). Finally, the question of relevance is asked in the context of a topic and web page that have been provided.

Figure 4. How to ask relevance evaluation questions.

How to Debug Tasks

Debugging solutions that use human computation is a somewhat difficult task. How would we know if our task is working? Or why our labels have a low agreement. If the task is problematic, how do we debug it? One solution is to sequentially zoom into each of the following factors and measure progress via an inter-rater agreement:

Data. The first step is to look at our data. Is the data problematic? Are there certain factors that can cause bias in the workers?

Workers. Human-based tasks are completed by humans and thus certain errors are expected. How are the workers performing in our tasks? Can we identify spammers from the ones that make honest mistakes? Can we detect good workers?

Task. If we are still having problems then the issue may be of task design. Maybe the task is so unclear that not even an expert can provide us with the right answer. Time to go back to the drawing board and rethink.

Measuring the level of agreement among judges is useful for assessing how reliable the label is. While it is very difficult to get perfect agreement, low agreement among workers is an indication that there is something wrong. Standard statistics for measuring inter-rater agreement produce values between 1 and -1. A value of 1 indicates perfect agreement among workers, a value of 0 indicates that workers are assigning labels randomly, and a negative value indicates that disagreements are systematic. Examples of such statistics are Cohen’s kappa (2 raters), Fleiss’ kappa (n raters), and Krippendorff’s alpha (n raters with missing values). These and many more inter-rater statistics are available in modern statistical packages like R. The advice is simple: use one that fits your need and don’t rely on percentage agreement.

How to Assess Work Quality

Quality control is an on-going activity that should be done before a task goes into production, during the time the task is being executed, and after the task is completed. Each check point allows us to manage the quality and get the best possible result.

There are algorithms for managing work quality and they all depend on the specific domain. Using majority voting works well and it is cheap to implement. More sophisticated approaches such as EM-based, maximum likelihood and explore-exploit provide other alternatives.

Conclusion

Labels are crucial. Avoid cutting corners and rushing this step simply because you want to play with the latest learners. Your data gets used for creating training sets, modeling and evaluation. Many different skills are needed to create quality labels, including behavioral science, algorithms, statistics, human factors and more. To create successful production ML systems, you must be willing to work with other teams and invest the time necessary for this important area.

Omar

Follow me on Twitter

A few references, for those of you interested to read further on this topic:

[1] O. Alonso. “Implementing Crowdsourcing-based Relevance Experimentation: An Industrial Perspective”, Information Retrieval, 16(2), 2013.

[2] O. Alonso, C. Marshall, and M. Najork. “Debugging a Crowdsourced Task with Low Inter-rater Agreement”, JCDL 2015.

[3] E. Law and L. von Ahn. Human Computation. Morgan & Claypool, 2011.

Comments

- Anonymous

July 04, 2015

In some respects, this is almost more about UI design than anything else!