Exploring Design Options With The Exchange Server Role Requirements Calculator

As an Exchange consultant with Microsoft Consulting Services, one of the primary tools in our tool belt is the Exchange Server Role Requirements Calculator. While I’ve been working with Exchange for a decade and a half dating back to Exchange 5.5, up until two years ago, I was mostly working in a support capacity, and didn’t dig too heavily into the design aspects of Exchange. As such, really getting into the nuances of the calculator has been something new for me.

What I want to talk about in this post are some of the lessons I’ve learned while using the calculator, some tricks I’ve discovered, and the general approach I take to using it. I’ll do this by walking through some different calculator scenarios, and describing the decisions I’m making along the way. This is not to intended to be a definitive guide to the calculator, as there are many possible approaches to take, and no single right answer (although probably many wrong answers!).

When sizing an environment, one of the most important factors for me is having options to choose from. I don’t want to run through the calculator just a single time, or even just a couple times, as even though a design may look good at first glance, there may be more economical or practical options that I hadn’t discovered. Instead, I prefer to run through the calculator many times, and to a reasonable extent, try to find all combinations of hardware that could possibly work for the environment. Once I’ve identified all possible designs, I can put their major components and requirements together into a single table for easy comparison.

To try to find the different options that are available for a design, I like to relate the various options in the calculator to a slider. On one end of the slider, you have the least number of servers possible. Because the number of servers is small, the amount of hardware required per server (CPU, Memory, Storage) is going to be high. On the other end of the slider, you have the least amount of storage per server possible (and consequently, CPU and Memory). Because there’s less storage per server, the number of servers in the environment will be high. Then there’s all the options in between the two extremes, where moving the slider in either direction will directly affect all required resources. The ideal design is probably somewhere within this range, although you won’t know until you can look at all options side by side.

The options I describe above are what I consider the first layer of design options. All of the options in this layer apply to a design where regardless of the number of DAG’s, all DAG’s have the same number of nodes (like an 8 node DAG). The next layer of design options would be found by repeating the above process, but against different numbers of nodes per DAG (like a 12 node DAG). For the purposes of this post, I will only walk through finding the options in a single DAG size, but the concepts are easily applied to DAG’s of any size.

Getting Started

Before I start walking through all possible options, the first thing I like to do is to plug in all of my static design requirements (those that will not change for any model) into the calculator, and save that as a template. That way when I start a new model, all I have to do is make a copy of the template, and don’t have to bother plugging in common requirements repeatedly.

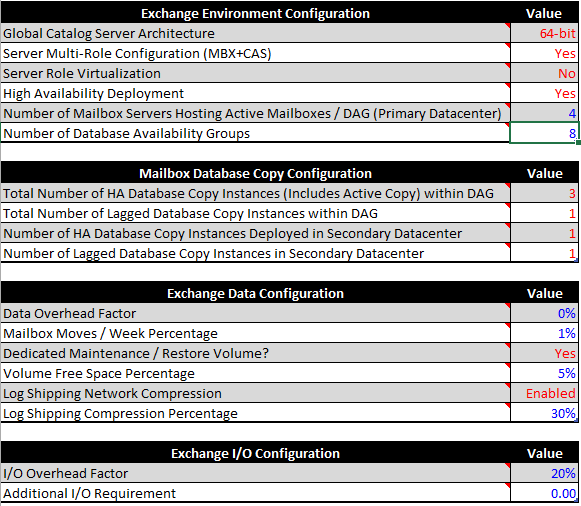

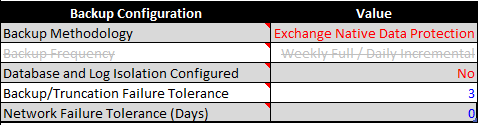

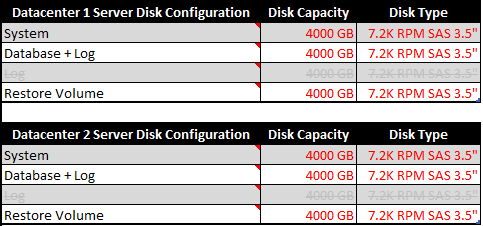

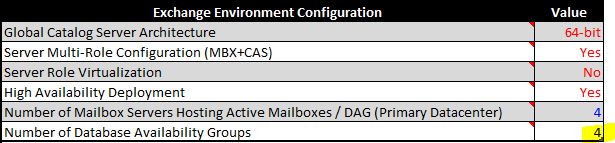

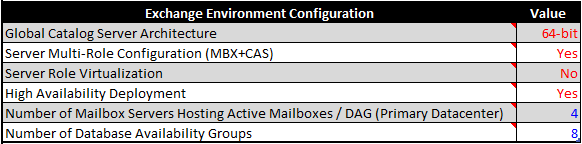

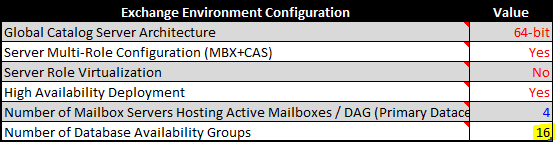

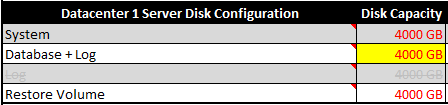

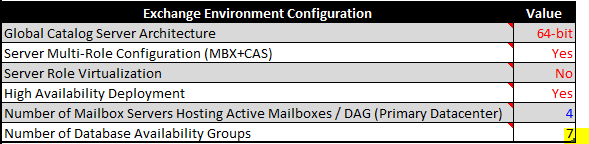

For the examples I will be walking through, I am using a mostly default copy of the calculator. Notable design decisions and/or changes are as follows:

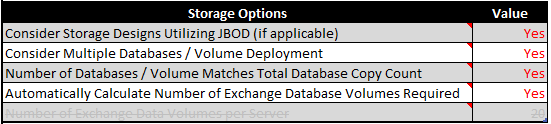

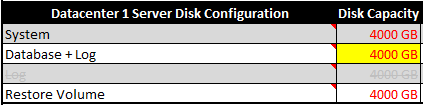

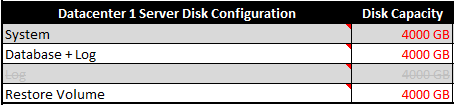

All servers will use JBOD with 4TB 7.2K RPM SAS disks

A two disk mirror will be used for the System Disk and Exchange Binaries

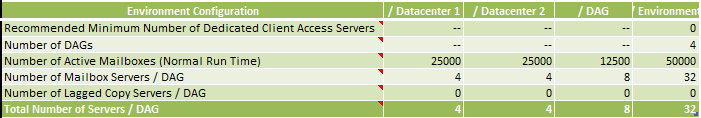

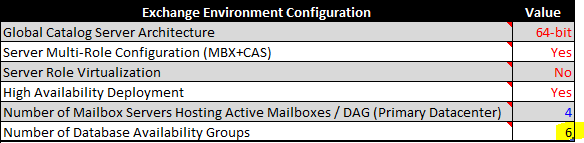

The DAG model is Active/Active (Single DAG)

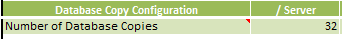

There are 8 nodes per DAG

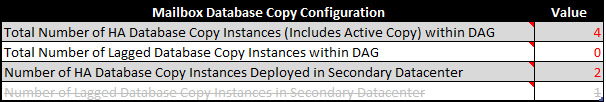

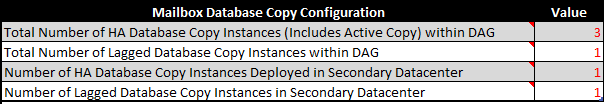

There are 4 copies of each database: 2 HA in the primary datacenter, and 1 HA and 1 LAG in the secondary datacenter

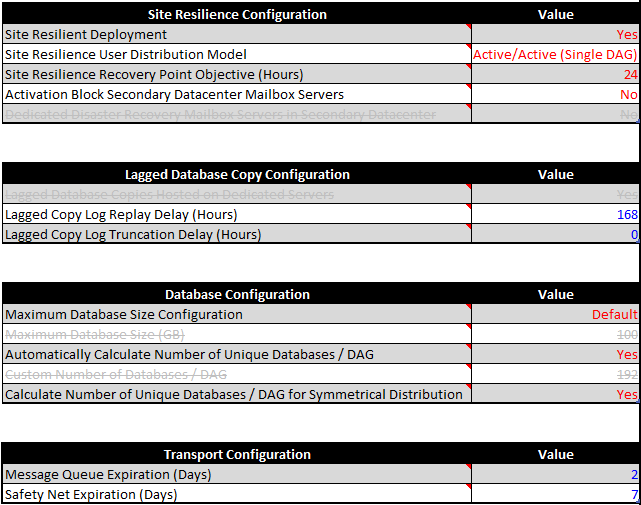

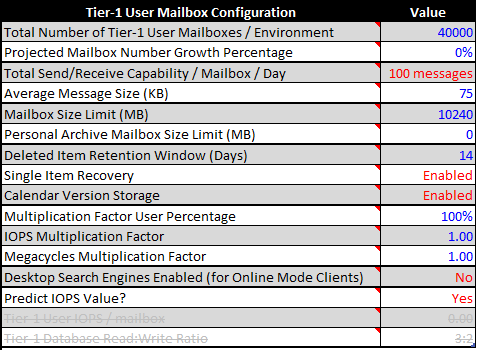

There are two tiers of users, who only differ in their mailbox sizes:

- 40,000 5GB mailboxes

-

- 10,000 25GB mailboxes

All common input into the calculator which will be present in the template is shown below:

|

Lastly, before I walk through the various options, I want to cover one more design goal for these exercises, which is to utilize both storage enclosure and server rack space as efficiently as possible. It’s most likely not cost effective to purchase a 12 disk DAS enclosure if you’re only going to be putting 4 disks in it. The servers I am designing for will be 2U servers, capable of housing 12 LFF disks. DAS enclosures will also be 2U and capable of housing 12 LFF disks. Note that I’m not specifically endorsing going with these form factors for your servers and DAS enclosures, I just chose it for the purposes of this post as they’re common, and easy to work with.

Ok, on to the options!

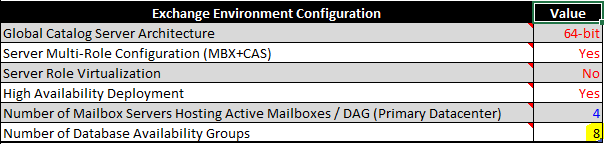

Option 1: Least Number of Servers / Maximum Resources Per Server

For this first option, I’m going to crank the slider all the way to one side, and try to minimize the number of servers required for the design. The first thing I want to do is tweak the calculator to the point where I get the most CPU consumption out of a server without going over the maximum recommended CPU limit, which is 80%. Since I’m going to be sticking with an 8 node DAG for all designs, I have to adjust the CPU usage by increasing or decreasing the number of servers, which means I have to increase or decrease the number of DAGs. Here’s how things stand when I start out:

Input Tab: |

Role Requirements Tab: |

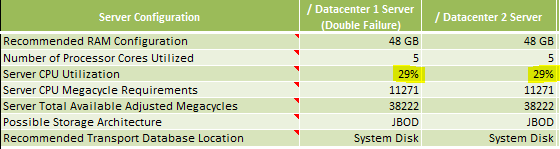

If I keep lowering the number of DAG’s, I find that if I get to 3 DAG’s, CPU usage is just under 80%:

Input Tab: |

Role Requirements Tab: |

What about the LAG’s?

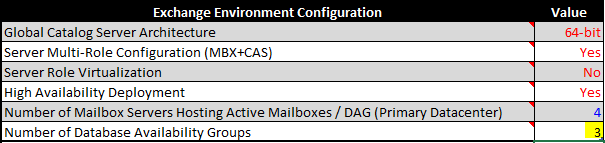

Now here is a minor (or potentially major) gotcha. With the configuration we are using, the calculator does not plan on the LAGs ever being activated, and does not account for that scenario in the CPU and Memory requirements calculation. This potential scenario could occur in the event that there was a datacenter failure causing you to go down to 2 available copies, and then another server failure occurred, which would bring you down to 1 available copy; the LAG. To get the calculator to tell us what the requirements would be for activation in this scenario, we have to tell it there will be no LAG’s. We do this by temporarily setting the copy configuration to 4 HA copies, with 2 per site.

Input Tab: |

Role Requirements: |

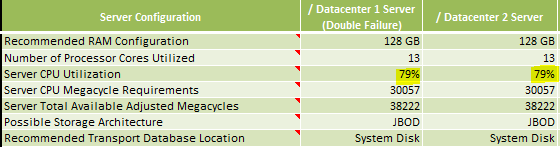

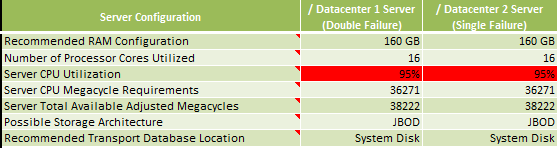

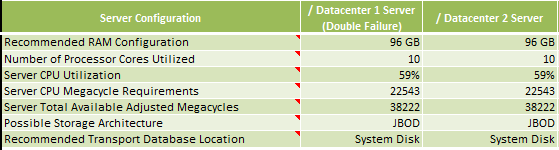

Making this change has put us well over the acceptable limit of 80% CPU usage. If I ever want to be able to rely on my LAG’s in a worst case scenario, this design is unacceptable (however if I didn’t plan on ever activating LAG’s, 79% usage would probably be fine). To allow for LAG activation, I’m going to dial it back and increase the number of DAGs by one. This lowers the CPU usage to 71%:

Input Tab: |

Role Requirements Tab: |

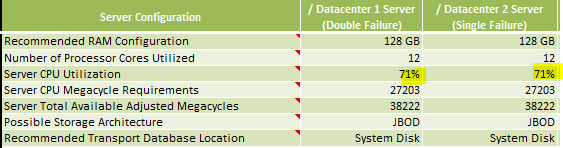

If I turn the LAG configuration back on, I get my final CPU and memory numbers:

Input Tab: |

Role Requirements Tab: |

I can also see the recommended number of servers on the Role Requirements Tab:

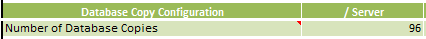

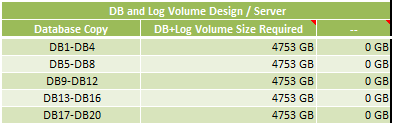

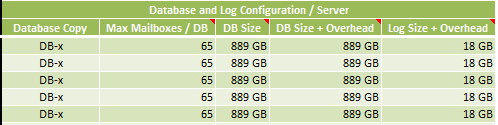

Now that the number of servers is locked down, I need to go look for problems in other parts of the calculator. Starting on the Input Tab, I notice that the Database + Log volume is highlighted in yellow, indicating a problem. Whenever I see disks highlighted in yellow, the first place I go to look for an explanation is the Volume Requirements tab. On this tab, I see that the calculator is recommending 4753GB of space per disk, which obviously will not fit on a 4TB disk. Looking at the Role Requirements tab, I see that the number of database copies per server is 96, which is close to the 100 per server maximum. What this tells me is that the calculator can’t drive the database size down any further as that would require adding more database copies, which we are currently constrained by. Finally, I notice that we’re trying to put 4 rather large databases (889GB) on each disk.

Input Tab:

Role Requirements Tab: |

Volume Requirements Tab: |

Since we’re currently constrained by the number of copies, there’s only one way to get out of this situation: Increase the size of the database. Doing this will help, as larger databases will mean fewer databases. Now as far as increasing the database size, we have a few different possible routes in the calculator:

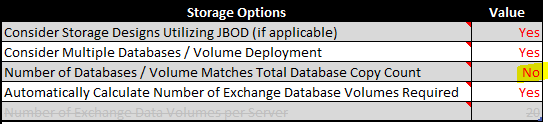

Route 1 is to force the calculator to only use 2 databases per volume instead of 4, which is done by telling it that the number of databases per volume does not match the copy count.

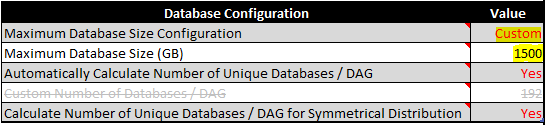

Route 2 is to manually specify the size that we want each database to be. If the specified database size is large enough, the calculator will automatically drop from 4 copies per volume to 2.

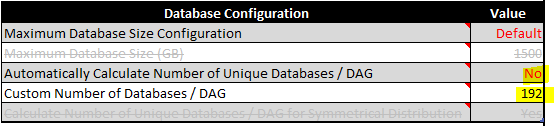

- Route 3 is to manually specify how many total database copies we want in a DAG.

All three routes are available on the Input tab:

Route 1: Route 3: |

Route 2: |

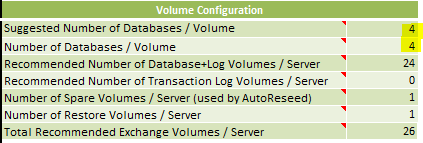

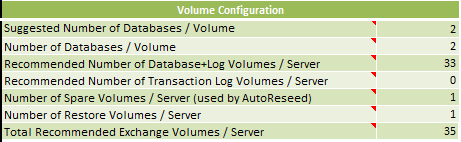

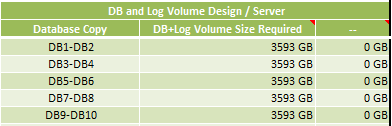

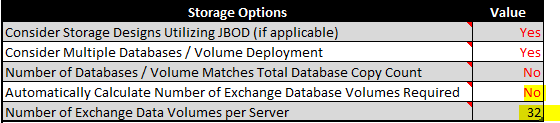

For the sake of brevity, I am only going to explore route 1, however I recommend trying all options to find your perfect design. After following route 1 and setting “Number of Databases / Volume Matches Total Database Copy Count” to No, if I look at the Volume Requirements tab, the number of databases / volume has dropped to 2, and the maximum required space per disk is now 3593GB. Additionally if I look at the disks on the Input tab (not shown), they are no longer yellow, which means the calculator likes this design.

I am going to make one final tweak to this design. Currently, the total number of required disks for the database, spare, and restore volumes is 35 disks. If I add in the two disks for the System and Exchange Binaries, we require 37, which means we would require another DAS enclosure for just a single disk. In an attempt to optimize this, I’m going to try dropping the total number of required database volumes to 32 by manually specifying “Number of Exchange Data Volumes Per Server” on the input tab:

Input Tab: |

Volume Requirements Tab: |

This final change results in an even spread of the data across the available disks, and also allows for us to completely fill up the server and storage enclosures with disks.

Option 2: Minimum Amount of Storage Per Server / Large Number of Servers

For this next option, I’m going to crank the slider all the way to the other side, and try to come up with a design using (within reason) the smallest amount of storage per server possible. Specifically, I’m going to aim for each server having 12 total disks, including the system, spare, and recovery volumes, so that all disks can fit in a single server chassis.

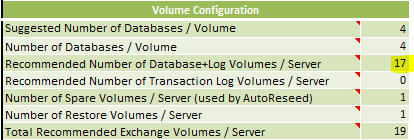

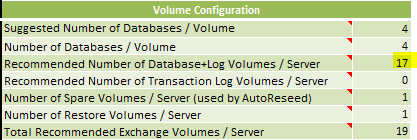

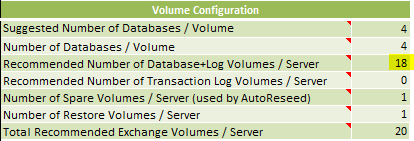

Starting off with a fresh copy of the template and going straight to the Volume Requirements tab, I see that the calculator is currently recommending 17 volumes per server.

Input Tab: |

Volume Requirements Tab: |

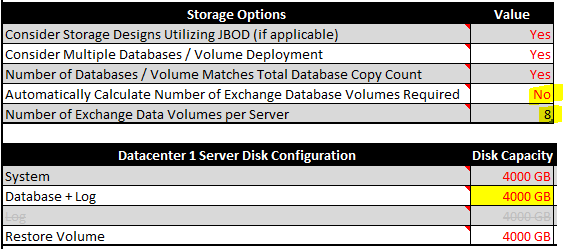

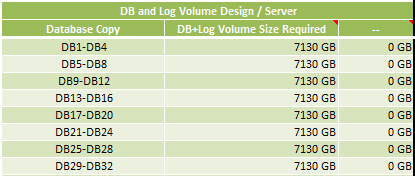

To be able to fit all required disks in a single chassis, I need to get the number of Database + Log volumes per server down to 8, as that will leave room for 1 spare, 1 recovery, and 2 system volumes. To accomplish this, I’m going to go to the Input tab and set “Number of Exchange Data Volumes per Server” to 8. After making this change, I see that the Database + Log volume is yellowed out, and the Volume Requirements tab shows I need 7130GB of space per disk.

Input Tab: |

Volume Requirements Tab: Role Requirements Tab: |

Unlike when I had too much space per disk in Option 1 however, the number of database copies per server is well under the 100 copy limit at 32. The problem this time is that there’s just not enough servers to spread out the required storage when the number of database volumes is limited to 8 per server.

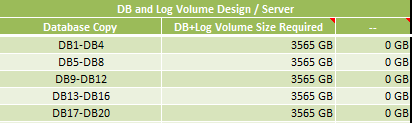

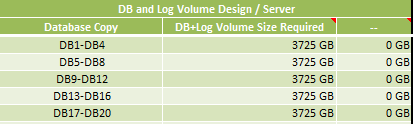

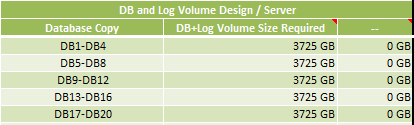

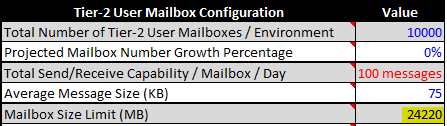

To fix this, I’m going to start increasing the number of DAG’s, in turn increasing the number of servers, until the “DB + Log Volume Size Required” on the Volume Requirements tab drops down to an acceptable value, and the Disk + Log volume on the Input tab is no longer yellow. Having working with 4TB disks in the calculator before, I know that the magic number for DB + Log Volume Size to make the calculator happy is 3725GB; essentially the formatted capacity of a 4TB disk.

Input Tab: |

Volume Requirements Tab: |

After gradually bringing the number of DAGs up, I find that that the number of DAGs I need is 16 (128 servers). This drops the DB+Log Volume Size to 3565GB, which is under the maximum allowed size of 3725GB. That does bring up another problem however. If we leave the configuration as is, there will be 160GB of wasted space per disk. Ideally we want to get as much use out of our storage as possible. The best way to reclaim this storage would be to give larger mailbox quotas to one or both of the user tiers until we hit the 3725GB per disk limit. One way to do this would be to simply increase the quotas, and not use even values of 10GB or 25GB any longer. Another way would be to find the optimal balance of 10GB to 25GB mailboxes to use up all available storage. So how do we go about doing that?

Algebra to the rescue!

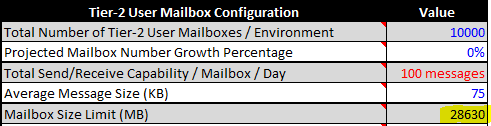

To figure out the optimal balance of mailboxes, we can use a simple system of equations. This is definitely one of the few times I’ve had to use algebra while working with Exchange (or in my career), so I’ll walk through the entire process for those whose algebra is rusty. Getting started, I first need to figure out the maximum amount of data that the entire system can handle, which means I need to push the calculator to the 3725GB per disk limit. To do that, I’m going to increase the quota on one of the mailbox tiers until I hit the limit. I’m going to choose Tier 2 to increase the quota on, since it has a smaller number of users, thus making it possible to increase the total requirements in smaller increments.

By gradually increasing the quota on Tier 2, I find that 28,630MB is the magic number that brings disks to the 3725GB limit:

Input Tab: |

Volume Requirements Tab: |

Next I’m going to figure out the total number of megabytes required by the entire system. I do so with the following formula:

(Tier 1 Mailbox Count * Tier 1 Quota) + (Tier 2 Mailbox Count * Tier 2 Quota) + … (Tier N Mailbox Count * Tier N Quota) = Total Megabytes Consumed

Plugging the newly adjusted mailbox quotas into the above equation, I get:

(40,000 * 10,240) + (10,000 * 28,630) = 695,900,000MB

Now I need to set up a system of equations. The first one says how many 10GB and 25GB users it takes to equal 695,900,000MB, and the second says how many total users there are.

10,240x + 25,600y = 695,900,000

x + y = 50,000

The easiest way to solve this is to just use an online system of equations calculator. I won’t link to one, but they’re easy to find. You can also do it manually. I’ll do this one using the Substitution method:

First I’ll get a value for y in terms of x:

y = 50,000 - x

Next I’ll substitute that value into first equation and solve for x:

10,240x + 25,600(50,000 - x) = 695,900,000

10,240x + 1,280,000,000 - 25,600x = 695,900,000

-15,360x = -584,100,000

x = 38,027.34375

Now that I have x, I can solve for y using the second equation:

y = 50,000 - 38,027.34375 = 11,972.65625

This tells me that the optimum distribution of 10GB to 25GB mailboxes is:

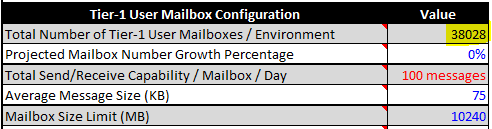

10GB: 38,028

25GB: 11,972

Now I’ll plug these new user counts into their respective tiers, also remembering to reset the Tier 2 quota back to 25GB. Lastly, I want to confirm that the DB+Log Volume Size remains at 3725GB, and the Database + Log section on the Input tab is not yellow. In this case everything looks good, so I’m done with this option.

Input Tab: (Make sure to reset the Mailbox Size Limit) |

Volume Requirements Tab: Input Tab: |

Option 3: Happy Medium

For the final option, I’m going to aim for what I like to call the “Happy Medium”; somewhere in between the two extremes we’ve already done. Since the first option ended up with 36 disks per server, and the second option ended up with 12 disks per server, I’m going to aim for 24 disks per server.

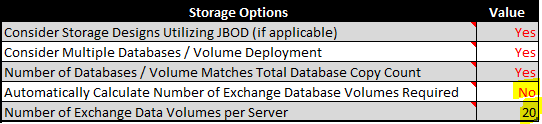

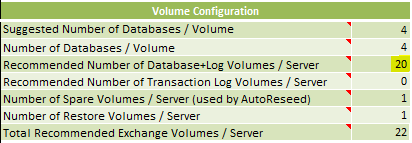

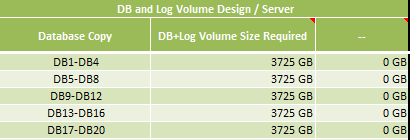

Again starting with a fresh copy of the template, the first thing I’m going to do is set the “Number of Exchange Data Volumes per Server” to 20.

Input Tab: |

Volume Requirements Tab: |

When I make this change, I notice that the number of Database+Log volumes is only 17. What this means is that even though we specified that we wanted 20 volumes, the calculator has determined that it doesn’t actually need that many to fit the required storage. This is a result of having too many servers. To increase the number of volumes per server, we’ll have to decrease the total number of servers. I’ll do this by lowering the number of DAGs.

If I go down to 6 DAGs, I finally get to 20 disks per server, however the Database + Log section has gone yellow. If I raise it back up to 7 DAGs, the number of volumes per server goes down to 18.

6 DAGs:

Input Tab: |

Volume Requirements Tab: |

7 DAGs:

Input Tab: |

Volume Requirements Tab: |

Moving forward, I could just choose one or the other and try to adjust, but instead, I will split them out into separate sub-options. Because we like options, right?

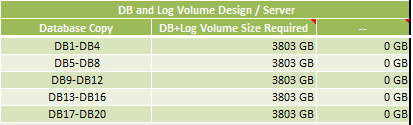

Option 3a: 6 DAGs

Looking at this sub-option a little further, I see that the reason the Database + Log section was yellow is because the calculator is trying to put 3803GB on each disk, which is higher than the 3725GB per disk limit.

Input Tab: |

Volume Requirements Tab: |

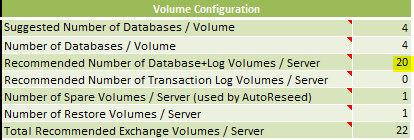

To resolve this, I need to do the reverse of the algebra based process that was done in Option 2. So first thing, I’m going to lower the quota on Tier 2 until we get to the 3725GB per disk limit.

Input Tab: |

Volume Requirements Tab: |

Next I’ll figure out the total amount of storage in the system:

(40000 * 10240) + (10000 * 24220) = 651,800,000

I’ll setup a new system of equations:

10,240x + 25,600y = 651,800,000

x + y = 50,000

Solving the system, I get:

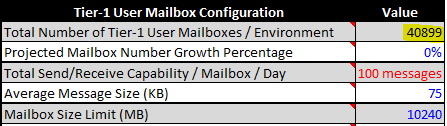

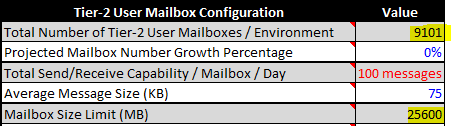

10GB: 40,899

25GB: 9101

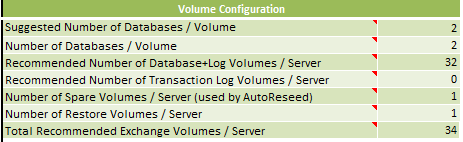

Finally, I’ll plug in the new user counts, set the mailbox size limit back on Tier 2, and verify that volume requirements are met.

Input Tab: |

Input Tab: |

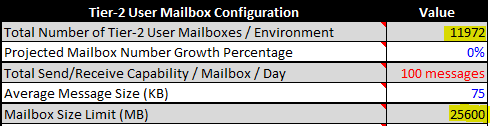

Option 3b: 7 DAGs

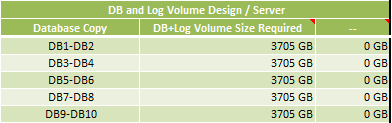

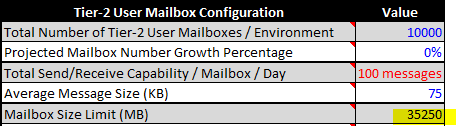

Since the calculator is only using 18 disks when 7 DAGs are configured, it means that there is not enough total storage in use in the system to necessitate 20 disks. If we want to consume 20 disks, we’ll need to increase quotas. To resolve this, we’ll need to follow the exact algebra based process from Option 2 and increase the quota on Tier 2 until 20 disks are in use, and the size per disk is 3725GB. Doing so gives us:

Input Tab: |

Volume Requirements Tab: |

I won’t show the equation again, but the total storage available is 762,100,000 MB, and the split of users is:

10GB: 33,718

25GB: 16,282

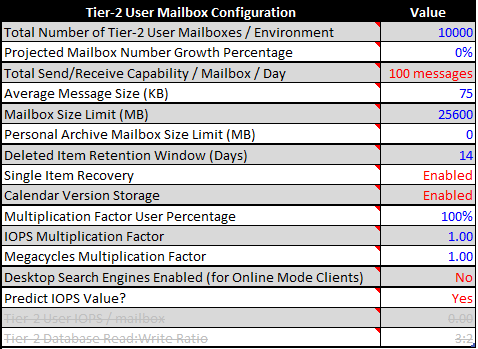

Conclusion:

The goal of this exercise was to get as many options as possible, and we ended up with four (five including an optimized version of Option 1 added after the fact). If I was sizing an environment for real, I would repeat the process against DAGs of a couple different sizes to come up with even more options. Once I’ve done so, I summarize all the options in a single table, which makes it easy to compare for cost purposes.

Option |

Server Count |

CPU% (DF) |

Memory (DF) |

CPU% (LA) |

Memory (LA) |

Disk Count |

DAS Enclosures |

Rack Units |

10GB Users |

25GB Users |

Total Storage |

1 |

32 |

59 |

96 |

73 |

128 |

1152 |

64 |

192 |

40000 |

10000 |

665,600,000 |

1o |

- |

- |

- |

- |

- |

- |

- |

- |

39766 |

10234 |

669,200,000 |

2 |

128 |

15 |

24 |

18 |

32 |

1536 |

0 |

256 |

38028 |

11972 |

695,900,000 |

3a |

48 |

39 |

64 |

48 |

96 |

1152 |

48 |

192 |

40899 |

9101 |

651,800,000 |

3b |

56 |

34 |

48 |

41 |

96 |

1344 |

56 |

224 |

33718 |

16282 |

762,100,000 |

DF = Double Failure

LA = LAG Activation (Triple Failure)

1o = Retroactively optimized version of Option 1

In this particular scenario, it looks like options 1 and 3a are the cheapest, as well as the ones that consume the least rack units. Depending on the size of mailboxes, and the number of mailbox tiers, this will not always be the case. As such, I recommending exploring all available options before trying to make a decision.

Happy calculating!

Comments

- Anonymous

January 01, 2003

thank you Mike. Great. - Anonymous

June 10, 2014

Very helpful.... Thanks! - Anonymous

October 18, 2014

Thanks alot