Quick tips to improve the accuracy of your metrics for deployments

One of the topics that comes up with a lot of my SCCM customers is how their management is looking at reports of things like updates deployments or software deployments and seeing low success rates. Getting 100% success is rare in any large deployment but each company should know a reasonable number, say 90% or 95%.

For any given deployment there are many things which can and do go wrong, but there are many items which could be considered “false alarms” and really server only to bring down your metrics and distract you from focusing on the real problem clients. I like to start by splitting all your machines into two categories: Manageable vs. non-manageable. A non manageable machine could be corrupted, offline, or thrown away. There are many possibilities which is its own series of discussions and leads to a discussion about the PFE client health and remediation service.

Tip 1 – Manageable machines only

There are several ways to do this:

- For each collection add a query rule requirement so only machines having a client=1 are included

- select SMS_R_System.Name from SMS_R_System where SMS_R_System.Client = "1"

- Setup your maintenance task “Clear install flag” to change this flag to no on a reasonable time frame of inactivity

- Create a collection that is all machines where the client flag = yes

- Limit your other software deployment collections to this collection

- Setup your maintenance task “Clear install flag” to change this flag to no on a reasonable time frame of inactivity

- (SCCM 2012 only) DO either of the above but use the client activity flag instead of client installed flag

- select SMS_R_System.Name from SMS_R_System inner join SMS_G_System_CH_ClientSummary on SMS_G_System_CH_ClientSummary.ResourceId = SMS_R_System.ResourceId where SMS_G_System_CH_ClientSummary.ClientActiveStatus = 1

The above helps you deal with machines that were manageable at one time but are no longer in a manageable state. Of course, it is separating out the bad stuff from the good stuff in your SCCM database. Keeping the bad stuff out of the SCCM DB in the first place is also helpful, and that means not discovering things from AD that can’t be managed.

Tip 2 – Avoid the “garbage” from AD

In SCCM 2007 or 2012

- Clean AD

- Take steps like I suggest in one of my older posts.

In SCCM 2012 only

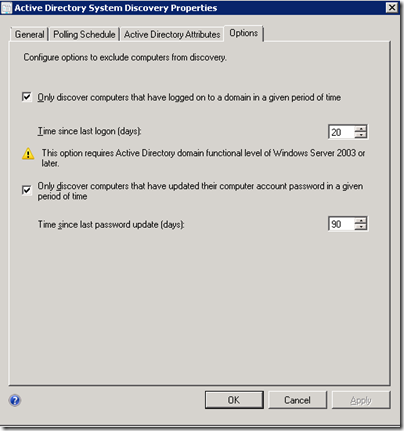

- Filter your discovery process

Using those two tips you should be able to get most deployments hitting above 90% success. If not then you likely have a more systemic issue like missing content or a bad command line.

Comments

- Anonymous

December 01, 2015

Hi Mike,

Great article.. I agree completely and try to follow these practices.

I am constantly have this conversation with my "Upper Management". They once claimed that either Gartner and Microsoft posted a 85% or some value for deployment and that should be the standard all the time every time. Yet none of them can find me the link to that site..

So, in trying to put an end to this conversation, have you ever heard of any industry standards as a value for deploying application or patch accuracy?

~Rich - Anonymous

December 08, 2015

Rich - I have never heard of a hard and fast "standard" for this. More is always better. When the question comes up as to why things aren't at 100% I typically have a conversation around what it takes to hit that. Most companies that I have worked with consider 90-95% to be an acceptable range. What it really comes down to is looking at the current success numbers you have, finding out what it would take to make that better, and then asking if it is worth the effort to make that improvement. Often times the effort is not worth it. If you aren't sure what it would take to make the number improve then it is good to get an outside perspective, like a ConfigMgr RaaS or something similar.