Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Disclaimer: Due to changes in the MSFT corporate blogging policy, I’m moving all of my content to the following location. Please reference all future content from that location. Thanks.

This is a fun little story today, but I got to see first hand how our security management pack works during a real, non-simulated attack. I was pleasantly surprised by the results. For the record I keep a couple of labs. One is internal, blocked from the world, and I spend most of my time in that lab. I recently started up a lab in Azure as Azure is a bit of an internal requirement right now given that it’s kind of a big deal in the IT world. Because of that, I multi-purposed that lab to be a SCOM 2016 environment as well as a second test environment. Given time constraints as well as genuine curiosity, I did not put much effort into securing the lab. It would serve as a nice little honey pot to see if I could detect the bad guys, and as it happened, it worked.

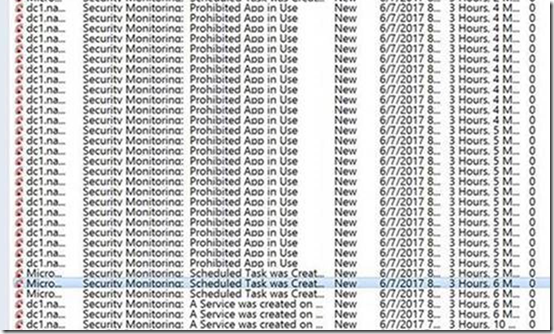

I posted a screenshot from this lab in this piece about a month ago. I specifically noted the failed RDP logons and why it is a bad idea to expose RDP to the world. My guess, as of this writing, is that this is how the attacker got in. Our management pack lit up almost immediately:

As you can see from the screen shot, this was likely nothing more than a script kiddie, but when you leave RDP exposed to the world, you make it very easy for them to get in, and they succeeded. In this particular case, it would be what I call a lazy attacker. I had AppLocker audit rules configured for hash based versions of Mimikatz. My attacker, considering him or herself clever, had simply renamed Mimikatz to “Chrome” and put it in a program files directory called “google”. Due to this, AppLocker did its thing. This won’t catch a pro, as they recompile their tools to have different signatures so as to avoid AV detection. As such, I wouldn’t rely on those and call myself secure, but as you can see from the screen shot, this was not the only thing we picked up. They also created scheduled tasks to launch their malware and started a few services on my domain controller. These checks are a bit better, as even the pros do this. With a good alert management process in place, a security team can confirm that the operations team was not involved in this allow for a response while the attack is occurring, potentially stopping an attacker before there is data loss.

At this point, we will be doing some forensics to see what else they did or did not do. The Azure security team was also on top of this and stopped the attack in its tracks, so it will be interesting to see what I didn’t detect. Hopefully, we will come away with a few more nuggets to watch.

Comments

- Anonymous

June 13, 2017

Please keep up the good work. Really, really interested in what the Azure security team comes up with.- Anonymous

July 11, 2017

Ultimately, it wasn't too exciting. The attacker got in via brute force, which is not really a surprise, but other than creating a couple of standard user accounts for a back door, they remained dormant for a few months. I don't monitor account creation with the security MP since that would generate way too much noise, so I didn't catch the initial event. What we caught is when they went active. Both the Azure team and the security monitoring MP picked up on those signatures when they attempted to move into a persistence role. They started using mimikatz and set it up to run as a scheduled task.

- Anonymous

- Anonymous

September 26, 2018

Good job on this project, Nathan.P.S. good to run into posting by you, during a random search on Security Management Packs, lol. Regards,Rich Badu