Working with FTP

| Caution |

| Test the script(s), processes and/or data file(s) thoroughly in a test environment, and customize them to meet the requirements of your organization before attempting to use it in a production capacity. (See the legal notice here) |

Note: The workflow sample mentioned in this article can be downloaded from the Opalis project on CodePlex: https://opalis.codeplex.com |

Overview

The “Working with FTP” samples have been designed to demonstrate typical automation one could build leveraging FTP as a mechanism for Electronic Data Interchange (EDI). Some might find it surprising, but this type of use-case (FTP for data interchange) is actually not uncommon. These types of automation generally take the form of scheduled jobs that run in conjunction with a calendar. It’s very typical to see FTP used both for inbound data as well as outgoing. Additionally, it’s not unusual to see these automations leverage PGP encryption and file compression.

There are quite a few noteworthy features of the “Working with FTP” samples:

**

Single-Stage vs Dual-Stage Workflow – The Inbound sample shows a single-stage job, meaning that the entire process is orchestrated in a single top-line workflow. The Outbound sample, in contrast, is a two-stage job meaning that it is composed of two top-line processes. The first process prepares files for outgoing FTP and places the files in a staging area. These second process monitors the staging area and sends the files out. Both types of workflows are very typical for such automations.

Credential Management – The sample shows how child workflows and published data can be used to deal with scenarios where user ID/password information might be dynamic. One doesn’t want to have to edit a workflow every time a password change on an FTP server is required. Simply updating the credentials and have his dynamically provided to the workflow is a much better approach.

Service Testing – Service Testing would involve verification of a service state prior to execution of future process steps. This sample shows two methods of Service Testing. First, activities use “Looping” to test (and re-test) a service to make certain it’s available. Example: Try to FTP a file and if the transfer fails wait 5 minutes and try again, giving up after three attempts. The second approach uses child workflows as well as looping. Complex decision making is involved in deciding whether a primary or secondary FTP site should be used. Hence Service Testing along with complex business logic work together to provide an environment for the success of the workflow.

“Integration” with SSIS – It’s fairly common to see workflows that integrate FTP jobs with MS SQL Server Integration Services. There are activities in the Inbound and Outbound examples that show how to launch these jobs as part of the overall process. Some tweaking of the workflow might be required to make these sections work, but the basic pattern of how one would accomplish this is integrated into the workflow.

Branching and Multi/Single Instance Data – Shown in this sample is a unique problem not specific to the FTP use-case but worth noting nevertheless. It’s not uncommon for a workflow to require multi-instance data, for example to process a list of files, however a separate branch may require a single instance of data (example: Email the success of a workflow, which one might only want to run once). The “Working with FTP” sample shows how one can branch and integrate single/multi instance data effectively.

Top-Level Workflows

There are two top-level workflows that can be found in the “Working with FTP” folder.

“1. FTP – Inbound Data Transfer”

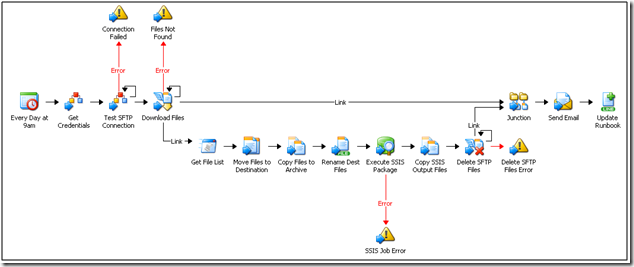

The Inbound sample is a fairly linear workflow. Every morning at 9am it collects files from an FTP site, unpacks them and runs an SSIS job that processes the files.

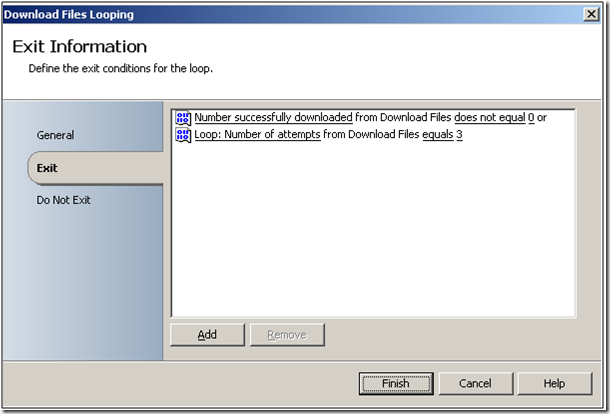

“Looping” is used in a few portions of the workflow to make the job more robust to issues with the FTP site. For example, the “Download Files” activity is configured attempt to download the files once every 600 seconds. It will try to download the files three times before giving up and throwing an exception.

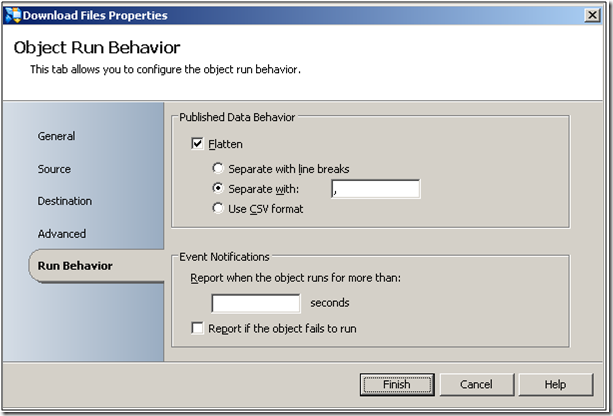

Additionally, this workflow shows how one can leverage multi-instance data in a complex branching scenario. Referring to the snapshot of the full workflow, notice how the “Download Files” activity branches to a junction. This is done since we want to process all of the files downloaded, but we also want to send an email when we are done processing the files. Only one email message is required (not one email message per file processed) . This is addressed by collapsing the “Download Files” multi-instance data into a single piece of published data using the “Flatten” option as shown below:

This causes the list of files and directories to be published as a single CSV line (a comma is used as a delimiter since it’s not permitted in either a path or a file name). Rather than getting one piece of Published Data for every file we now get a single piece of Published Data for all the files.

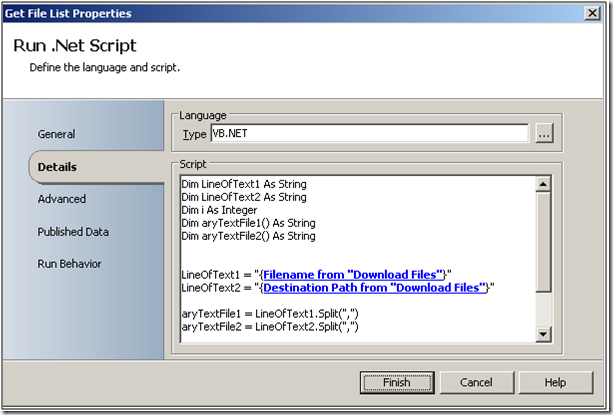

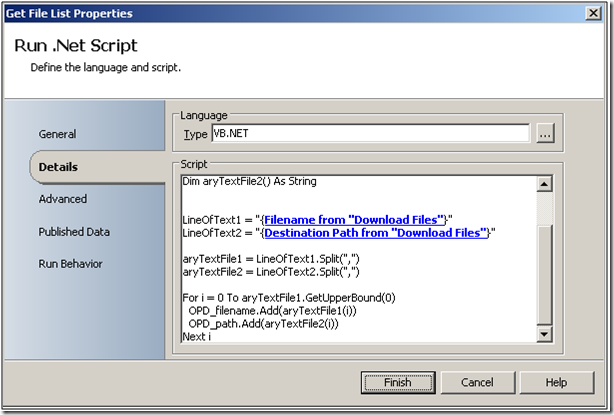

How then does the workflow process all the files if there is only one piece if Published Data produced for each file? Following the lower branch to the “Get File List” activity, one can see that a VB script has been used to “break out” the CSV file list back into multi-instance data.

Notice how the VB script accepts as input the CSV lists for the files and paths of the downloaded files. However, the data is “Split” into individual values that are stored in a pair of arrays. These arrays have been configured in the “Published Data” tab so Opalis knows to pick up their contents and publish it as Published Data.

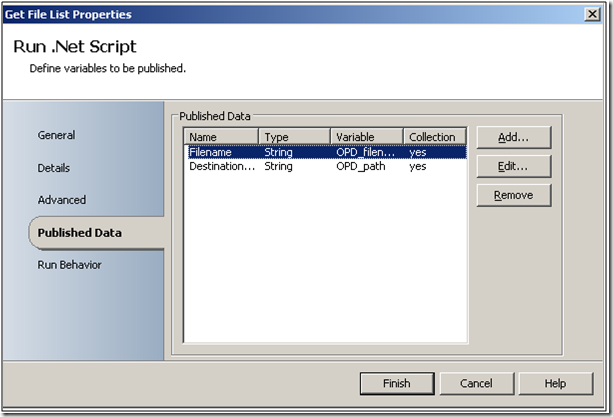

Opalis knows this is an array of multi-instance data since we told it OPD_filename and OPD_path were “collections” rather than pieces of single-value data. This can be seen in the “Published Data” tab where the “Collection” property is set to “Yes”.

The net effect is that all the files are processed via multi-instance data, however a single-value path of data is permitted past the junction, so only one email message is sent once all the files are processed.

“2. FTP – Outbound Data Transfer”

The Outbound sample is an example of a two-stage workflow. The first workflow runs according to a schedule and processes a list of files through SSIS. The second workflow monitors a “staging area” and processes files deposited by the first workflow. This approach is fairly typical since it allows the processing of data (which might need to be re-run) from the transfer of the data. It also allows a transfer workflow to be re-used for other data processing scenarios (hence making the process more modular).

“1. Outbound FTP Job – Stage Files”

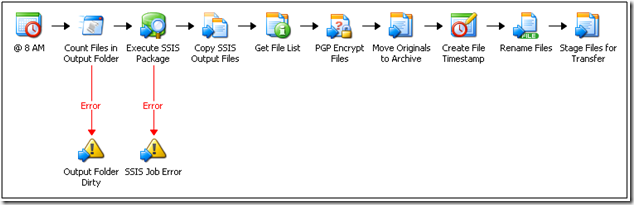

The first of the two workflows launches daily at 8am. Similar to the Inbound use-case, it checks an output folder to count the number of files it contains (via a VB script). The files are run through an SSIS job before the output is encrypted. The original files are “backed up” in an archive. Before the files are moved to the staging area for transfer (by the second workflow) a time-stamped filename is generated for each file to make it unique from the files from the previous day.

“2. Outbound FTP Job – Transfer Files”

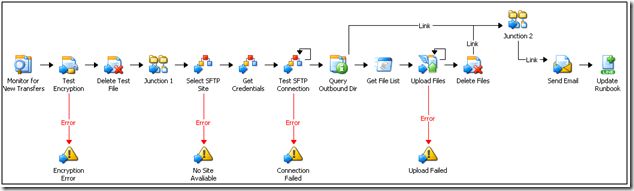

The second Outbound job monitors the staging area used by the first job. When files appear in the directory, the workflow first verifies that the files can be decrypted before transferring them to an external FTO site. Similar to the Inbound example, a multi-instance stream of uploaded files is “collapsed” into a single instance stream so one doesn’t send an email message multiple times once the workflow terminates (in this case by updating a log file).

Child Workflows

There are 3 child workflows that support the top-line processes. These are small workflows called from the top-line workflow as needed to complete the transaction. They all perform some small but useful task and could possibly be re-used in other workflows. It is possible that these workflows might need to be edited to deal with local variations in the environment where the sample is run:

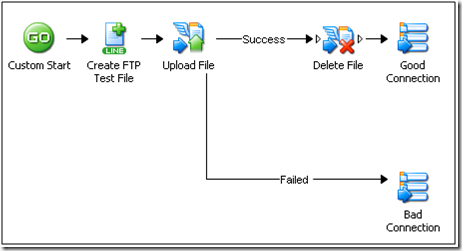

Get Credentials

This is a simple example of how one can perform credential management for workflows. The child workflow consists of a “Map Published Data” foundation activity that contains the list of usernames and passwords associated with a specific server names. Essentially, it’s a keyed lookup-table. If a password changes, the only workflow that needs to be edited is the Get Credentials workflow (the Inbound and Outbound samples all call this workflow so their username and password use is dynamically linked to this child workflow).

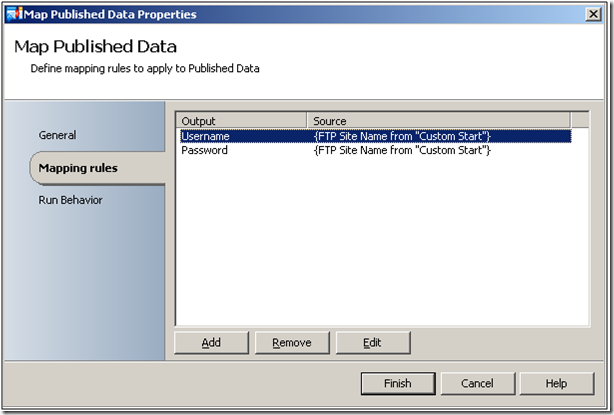

Looking into the Map Published Data activity, one can see that the Username and Password are the outputs it will produce.

Opening up one of the outputs (the Username in this case) one can see that the FTP Site Name is the key that is used and the “Pattern” is the site name. This maps to the FTP User Account that would be used for this FTP server. The Password is dealt with in the same fashion.

Of course, one could replace the Map Published Data activity with a different system for managing credentials. For example, a secure MS SQL Server could be used to store the information.

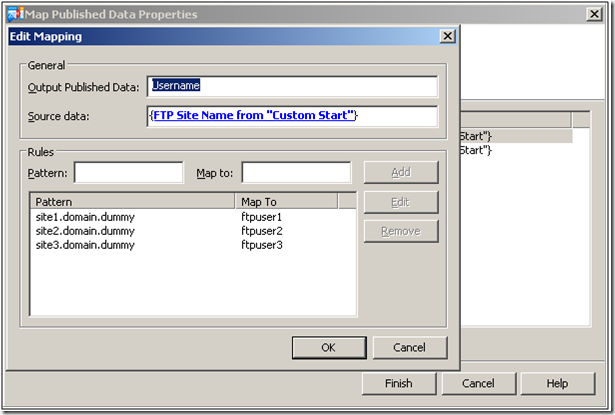

Test FTP Connection

This is a very simply workflow that accepts as input FTP site information. It verifies that the FTP site can be used for a transfer by putting a simple file onto the site and then downloading the file. Once the file has been downloaded it’s deleted from the FTP server. This workflow is called from many different places in the Working with FTP sample.

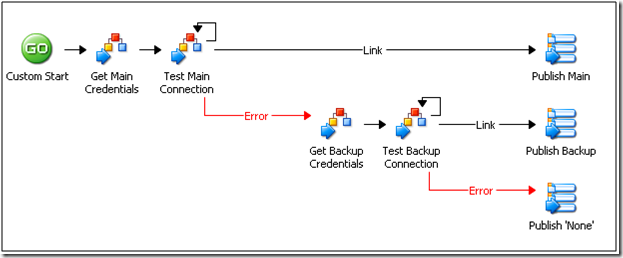

Choose SFTP Site

This workflow makes heavy use of the “Test FTP Connection” child workflow. It accepts as input a primary and secondary FTP site name. It gets the credentials for the primary and tries to connect (several times if the first attempt should fail, hence the “Looping” one can see in the workflow). If the primary site fails after several attempts, the backup site is tested. The site that works first (primary or backup) is what is returned to the parent workflow.

| Share this post : |  |