Should I Virtualise My Root CA?

Hey Everyone, today I wanted to post a note about virtualising offline Certificate Authorities. This is something I come across a lot with customers and there is much debate in the industry about whether it is a valid approach. I’d like to share my thoughts on this, which I will caveat to state are just my personal opinions, but which are based on 6 years plus of PKI implementation experience including in many secure environments.

TLDR

Yes, we can set up a secured virtual environment and configure elaborate solutions for our CA to meet the required audit compliance or security requirements, but why bother? Just because you can do something does not mean you should. So my advice is DON’T DO IT!

The rest of this post will explain my reasoning’s for this.

The Proposal

In many environments a PKI underpins the security of the network, the user identities and access to systems and data. It can be the most trusted system an organisation owns, and if deployed in the correct manner will provide extremely robust authentication for devices, systems and users. It can also provide digital signature capability for signing code and signing emails or other documents to provide assurance around the integrity of said code or documents. If implemented correctly a PKI can also provide non-repudiation for signatures. Non-repudiation is where the signer of a document or other piece of data cannot refute that they were the original signer, i.e. I couldn’t send an email, sign it with my private key and then state that the email originated from someone else or that they had impersonated me. This is crucial for obtaining correct attribution for actions, but also signatures used in this way can, under some circumstances, be held up in a court of law. I’ll have another post in the coming weeks about non-repudiation, it takes much more process and policy than just a check in a box on a certificate template and therefore I think it requires its own post.

Don’t get me wrong, PKI can be implemented in an enterprise environment to deploy certificates where we place a low assurance value in those certificates. This is absolutely fine where the organisation knows this and isn’t relying on those certificates to provide authentication to High Value Assets, secure critical networks or provide robust signatures as stated above. You need to understand the purpose of your PKI and why you deployed it. For example, it’s no good implementing an accessible fully online PKI with no process or policy and then issuing smartcards for authentication from this PKI and thinking they provide highly robust authentication for your users, this is absolutely not the case and if not done correctly can even provide a lower level of security than a random long password (I also have some thoughts pending on smartcards to write up soon as well).

For more information about securing a PKI please see the Microsoft whitepaper at https://aka.ms/securingpki.

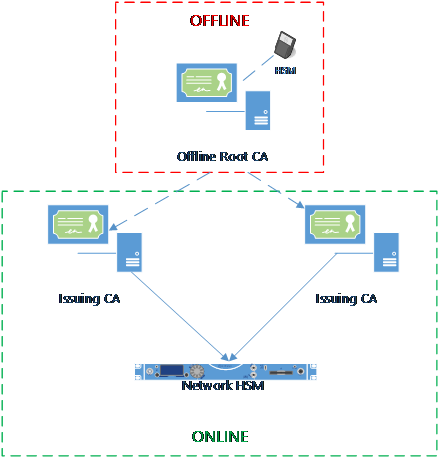

So, we have now set the scene for why PKI is important. Now down to design and implementation and the crux of why I put this post together. Many administrators when building a PKI design a simple two-tier deployment (my recommendation for 99% of customers) as per the following diagram:

The best practice is to have the Root CA offline and not connected to any networks to protect the sanctity of this server. This is a well understood practice and adhered to by many PKI administrators. It is also recommended to use a hardware device for protection of the private key material on the CA, i.e. to use a Hardware Security Module or HSM. In the typical scenario above the offline server would be disconnected from the network and usually stored on a physical server or other hardware such as a desktop PC or laptop. It would also have a HSM connected to it, either via a PCIe card or today we can use a USB connected HSM. The reasoning for this is all down to security and lowering the surface area for attack while also providing access control and robust auditing measures. The issuing servers are usually fully online and can be hosted on a virtual platform (where that platform is secured and managed to the same security tier as the CAs – usually tier 0) and are generally connected to a network based HSM.

So at this point you may be asking yourself “what is this guy’s problem then?”. Ok, so my problem is that we have this very well understood implementation methodology which is based loosely on the design principles above which have been around since the dawn of PKI implementation, yet traditional admins want to move away from this tried and tested methodology and come up with elaborate solutions for virtualising the Root CA. This is normally because the organisation does not want to buy a separate HSM for the Root CA, but rather use one network based HSM for both online and offline environments. This can be achieved as most network based HSMs have two interfaces where there is no network connectivity between the two. My main issue taken with this approach is twofold, firstly it’s the complexity of the solution, secondly it’s the fact that the CA needs to be connected to some network, private or not, to use its private key material which increases its surface area for attack. You can use a USB based HSM in some virtual environments which support direct USB pass-through (not over the RDP channel as far as I’m aware) but I would ask the question to all PKI administrators, why?

The below diagram is a simple design of the above mentioned solution, there are many variations on this design but nearly all involve connecting the offline CA to its own “private” HSM interface:

Taking Issue

The fundamental question we need to ask ourselves is, what is the problem we are trying to solve in virtualising the offline CA? Is it cost, hardware independence or a love for all things virtual? I’d like to do some debunking here on the virtualisation approach and put forward a case as to why I think the traditional method is still the best.

As mentioned, my main mine gripe is complexity, let’s take a look at what we might need to do to secure our virtual CA such that we can state it has equivalent security to an offline CA.

Firstly, we need to ensure the virtual host has the same security as what our offline CA would give us, most notably the physical host server would need to be protected to the same impact level of a normal offline CA. This would involve housing the virtual host server in a secure rack with physical access controls and have the policies and processes to ensure only designated personnel have access to the CA. All media introduced to the CA would need to be controlled and malware checked. If the CA is hosted on another platform other than Hyper-V, you may also require an administrator workstation to connect in to the host server so you can manage the guest, this workstation would need to be built from a known good image and used exclusively for the CA management. This isn’t completely unachievable but does become unworkable when you need elegant, easy to follow scripted procedures for Key Signing Ceremonies.

Secondly, by the very nature of the CA being virtualised you will need to connect it to a network and a network based HSM. This will require infrastructure and means that the HSM to which the offline and online CAs are connected to would need to be protected to the highest impact level of the all the connected assets. So, in this instance the HSM would need to be stored in the secure rack with the same access control procedures as defined for the Root CA. In the scenario where the Root CA is completely offline we still need to protect the online CA HSM, but we can define a separate security zone as these assets are online and need to be network connectable which provides distinct separation and allows us to split up the management of the two platforms. Remember our Root CA private key is the crown jewels we are trying to protect and we don’t want to unduly expose it by having network connectivity to the area where it is stored from a lower level security zone or to lower level administrators.

All of this network setup and thinking of assets crossing security zones just screams too much complexity to me and seems like over-engineering for a solution which really doesn’t need to be complex. With this being said, I have had customers propose a “simpler” approach to the above problem where the Root CA is stored on their production virtualisation platform where their online CAs are stored, the offline CA is then connected with private virtual switches, host NICs and cabling to the HSM. The root CA would then be taken offline by dismounting the virtual machine when not in use and encrypting the virtual hard disks such that the CA cannot be started. To bring it online a process would exist whereby the disks are decrypted and the virtual machine is mounted temporarily. Again, I would ask why? Why engineer the solution to be this complex? What is the problem you are trying to solve? Don’t make the design of your PKI overly elaborate when it doesn’t need to be.

Now to debunk some of the reasons for the virtualisation design:

Cost

The cost of a USB or PCIe HSM card is very reasonable today, and although I can’t give you exact figures it is somewhere in the region of £2.5K - £5K depending on supplier, vendor and model you go for. This may initially look like a lot of money but when you take into consideration the extra administration overhead you have with the virtual solution, the cost of the extra infrastructure and the pain you will go through to achieve the security you need it’s actually a drop in the ocean. You can buy a cheapish laptop and USB HSM and then store these in a protected safe when it is not in use and achieve a high level of data separation and security for relatively low cost. This will give you a high level of security and confidence in the integrity of your Root CA without the over-engineering of the solution. So, no, I don’t take cost as an argument for the virtualisation of the Root CA.

Hardware Independence

Another argument that people sometimes use is around hardware independence, such that if you had a system failure you could restore the VM to any other physical server with the same hyper-visor technology very easily. The argument is that you aren’t reliant on the same physical hardware for system state restores and you get flexibility in your recovery approach and disentangle yourself from specific hardware components. I would however still have a retort to this in that if you have a robust backup and restore process following a componentised model, like the one I documented in this post, this argument becomes irrelevant. In my opinion the component based restore is elegant and not only allows you to restore your service but also migrate it to a new hardware platform or even a new up level operating system, such as during a Windows Server upgrade. The component based restore on a dedicated physical platform is also cost effective.

Summary

This post ended up being more of a rant than I wanted it to be, but we can sometimes get carried away with designing and implementing IT systems fixing problems that don’t exist and I’m a big believer in keeping things simple. The age old saying of “complexity is the enemy of security” rings true for this scenario. Virtualisation absolutely has a place at centre stage in our environments today, but in my opinion, it does not have a place when it comes to offline CAs. I can speak from experience of being in many Key Signing Ceremonies with third party auditors looking over your shoulder and will say that the last thing you want in this scenario is too much complexity as something is bound to go wrong and cause you lots of pain and questions from the auditor.

Alas, I am a bit of a PKI purist though, some may say a curmudgeon, so my views are slightly skewed. So with all of this being said, assess the needs of your PKI, what assets you are protecting and what level of trust you are placing in the certificates it issues. It may be the case that you don’t need extravagant security controls and protection mechanisms and therefore can ignore all of my advice :)

A good friend of mine, David Wozny, wrote an excellent whitepaper for Thales on Offline CA design principles which can be found here, and he covers off what I have spoken about in this post a lot more succinctly. I encourage you to read his whitepaper and this blog post together before you make any decisions on your offline Root CA implementation.

Comments

- Anonymous

October 04, 2015

very good article/articles - Anonymous

October 16, 2015

A Snippet - Anonymous

January 12, 2016

Love it. - Anonymous

April 05, 2017

Great article Chris. - Anonymous

October 03, 2017

Very good articles Thanks! - Anonymous

March 18, 2018

You failed to take into account how expensive it would be to license a single standalone server for this purpose. It is now even more expensive to do this than it was when this article was originally posted. Server 2016 requires licensing for 16 cores minimum even if you loaded it on a cheap lowend mini PC or laptop that you could lock away in a safe.If you install the Offline Root on an existing Hyper-V Server running Windows Server Data Center as a VM, licensing would be $0 additional cost.Why not install a VM with no networking to configure it and then export the VM onto a USB stick or external drive and then stick that drive in a safe to keep it offline and air gapped? Much more cost effective.- Anonymous

March 19, 2018

Hi Matthew,Thanks for the feedback. Indeed, this article does not consider cost. However I would posit that it is not expensive for a standalone 2016 license, there is a minimum core count of 16 per server however the list price for the OS for the base license is $882. This covers you for the minimum outlined and also does not take in to consideration any enterprise or volume license agreements a customer might have in place. The problem with the approach you outline is that firstly you will have to network the CA to use a HSM or plug a USB HSM into the host and redirect to the guest. Neither of these approaches is ideal in my opinion. Secondly, you have the issue whereby the Root CA is exposed on a VM host, albeit for a limited time, but anyone who has admin rights to the host can then fully compromise that CA. This isn't just about the insider threat but admins who may have had their credentials compromised. This is why we recommend that domain controllers and CA servers exist on their own physical hosts in tier 0 away from tier 1 servers - see http://aka.ms/privsec for information on this. Lastly, the operational procedures around this become more complicated, you may argue that they are not but I have witnessed this type of process causing major issues with a PKI deployment, keeping it simple is definitely the approach to take.Ultimately, the choice of design is down to the organisation and costs should certainly be accounted for based on risk profile. If you are investing in HSMs then I would say that you could probably stretch to the cost of a server license. The outlined approach is not for every organisation but I would suggest fits most enterprise requirements. Chris

- Anonymous